In the world of deep learning, recurrent neural networks (RNN) are a big deal. They’re made for handling data that comes in a sequence. Unlike other models, RNNs can understand data that’s connected and changes over time.

This makes them perfect for tasks like understanding speech, creating text, and predicting trends. As a key part of AI RNN, they change how we process data, making it more like the natural world.

Have you ever talked to a digital assistant or seen product suggestions based on your browsing? Chances are, an RNN was involved. RNNs in deep learning learn from data that changes over time. They find patterns in sequences of data.

Imagine a system that learns as it goes, not just from a single snapshot. That’s what RNNs do. Learning about RNNs opens up how they predict the future. This is explained with examples later. RNNs are used in many areas, like finance and healthcare.

The next parts will explore RNNs in more detail. We’ll look at their core ideas, examples, and how they’re changing AI. If you’re interested in machine learning, RNNs are both fascinating and useful.

Key Takeaways:

- RNNs offer a specialized approach to analyzing sequential data, going beyond isolated data points to consider entire sequences.

- The adaptability of RNNs makes them suitable for tasks such as natural language processing and stock market forecasting.

- Understanding RNNs is essential for anyone looking to harness their power in pragmatic RNN applications.

- These networks are a cornerstone technology in modern AI applications, showcasing the ongoing integration of RNN in deep learning.

- Real-world examples serve to demystify and illustrate the substantial impact of AI RNN advancement.

- Insights into how RNNs function can offer a competitive edge in developing machine learning solutions that require sequential data interpretation.

Understanding Recurrent Neural Networks (RNN):

Recurrent Neural Networks, or RNNs, are key in handling sequential data. They remember past information, unlike traditional networks. This makes them great for tasks like language and time series analysis.

Defining RNN in the Context of Machine Learning:

In machine learning, RNNs are more than just networks. They have loops that keep information flowing. This ‘memory’ helps predict future data, like the next word or stock trends.

Key Characteristics of RNN Architectures:

RNNs have an internal state that remembers past calculations. This state is crucial for future operations. Their architecture allows for continuous processing and efficient sequence handling.

The Inner Workings of Recurrent Neural Net Models:

RNNs work by combining new inputs with past data. This creates an output that feeds back into the network. This loop keeps each step connected to the past, enabling dynamic behavior.

Exploring RNNs shows their unique ability to handle context-dependent data. They are essential for complex data analysis and prediction.

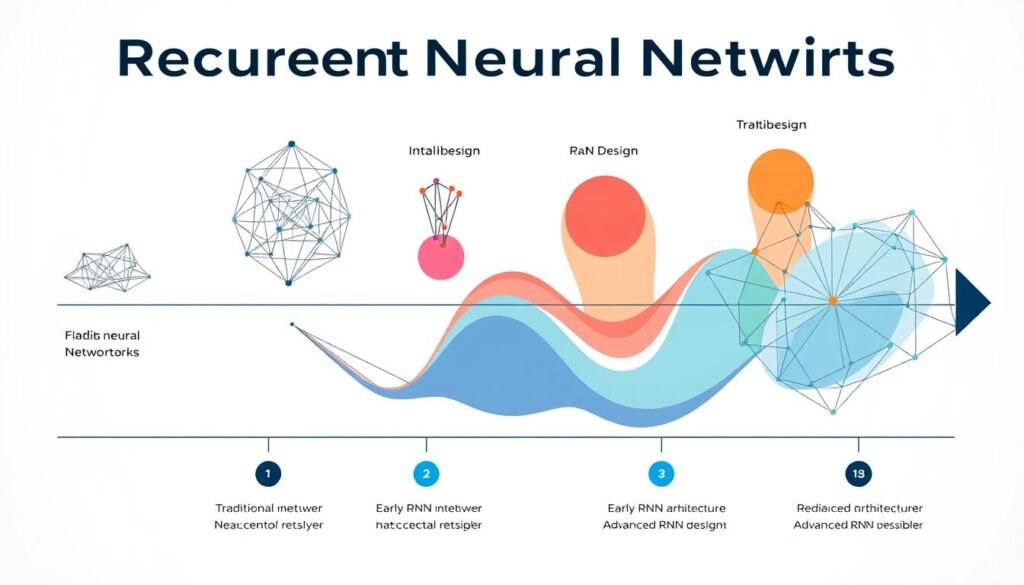

The Evolution of RNN from Traditional Networks:

The journey of rnn in machine learning has seen big changes from old neural network designs. Knowing how rnn networks have evolved helps us see their uses today. It also pushes the limits of what rnn cnn deep learning can do.

Contrasts between Feedforward and Recurrent Neural Networks

Recurrent neural nets are different from traditional feedforward nets. They can handle sequences of data, which is key for tasks needing context. Unlike feedforward nets, rnns can use past data to make better predictions.

Historical Development of RNN in Deep Learning

The history of rnn cnn deep learning is filled with important steps. Seeing these milestones shows how rnns have gotten better over time. From simple layers to complex ones, rnns have come a long way.

| Year | Development |

|---|---|

| 1980s | Introduction of basic recurrent structures in neural networks |

| 1990s | Advancements in learning algorithms for rnn, improving stability and performance |

| 2000s | Integration of rnn with convolutional neural networks for enhanced learning capabilities |

| 2010s | Breakthroughs in deep learning, expanding applications of rnn in various fields like speech and text recognition |

RNN’s Role in Sequential Data Analysis:

Traditional data analysis tools are no longer enough, especially with the growing complexity and volume of data. Rnn in machine learning steps in, offering big benefits by handling data one step at a time. It’s used in finance for rnn time series forecasting, tech for rnn text generation, and entertainment for rnn language modeling.

Machine learning models like RNNs are great at understanding the context and connections in data sequences. This skill is key for tasks where past data affects future outcomes, like in language and stock market predictions.

| Industry | Use Case | Impact of RNN |

|---|---|---|

| Finance | Stock Price Prediction | Enhances prediction accuracy by analyzing past price sequences |

| Healthcare | Patient Monitoring | Tracks health trends over time, enabling preemptive medical intervention |

| Entertainment | Script Generation | Improves creative processes by generating context-aware text |

RNNs in machine learning show their true power by adapting to time-dependent data. They’re able to predict the next word in a sentence or forecast stock market trends. RNNs capture the time-based details, offering insights that were once out of reach.

Breaking Down the RNN Architecture:

The design of rnn architecture is key in recurrent neural networks (RNN). It’s important to know how data moves through layers. At the heart of every RNN network are three parts: the input layer, hidden layers, and the output layer.

Data moves through these layers one step at a time during training. The input layer gets the sequence data first. Then, it goes through hidden layers that catch different parts of the data. The weights change as the network learns from each sequence.

This flowing and updating mechanism allows RNNs to make predictions based on prior information in a sequence, making them exceptionally valuable for tasks like language modeling and time series analysis.

A simple rnn example in python shows how these ideas work. Python is easy to read and use, helping to make complex ai rnn models clearer.

For more on how RNNs work in machine learning, check out this resource on supervised, unsupervised, and reinforcement learning. It explains how these types of learning improve AI.

RNNs are different from regular neural networks because they remember past inputs. This lets them handle data that changes over time. This is key for tasks that need to remember past information.

- Input Layer: Receives sequential input data.

- Hidden Layers: Process data in sequence, remember past inputs, and update weights.

- Output Layer: Produces the final output sequence based on learned data.

Knowing how RNNs work helps us use them better in AI. It shows how machines can learn and do more.

Long Short-Term Memory (LSTM) Networks: An Extension of RNN:

Long short-term memory (LSTM) networks are a big step up from traditional Recurrent Neural Networks (RNN). They are great at handling sequences that need to remember things for a long time. This makes them perfect for tasks that need to understand the context well.

These networks are a big deal in the world of artificial intelligence (AI) rnn technology.

Introduction to LSTM and its Significance:

LSTM networks are a special kind of RNN lstm. They can remember information for a long time, something regular RNNs can’t do well. This is because they have a special design that helps them keep or throw away data as needed.

This makes LSTMs very useful for complex tasks like understanding natural language and analyzing time-series data.

Comparing LSTM with Standard RNN Models:

LSTMs are better than traditional RNNs in many ways. They can handle information better, which helps avoid problems like the vanishing gradient. This means they can learn and remember more effectively, especially with long sequences.

Here’s a look at how LSTMs compare to standard RNNs in different AI rnn applications.

| Feature | RNN | LSTM |

|---|---|---|

| Memory Capability | Limited | Long-term |

| Gradient Stability | Low | High |

| Application Suitability | Short sequences | Long, complex sequences |

| Performance in Complex Tasks | Basic | Advanced |

The table shows why LSTMs are better for tasks that need to remember things for a long time. Their improved abilities make them key in the development of advanced AI rnn systems.

Implementing Recurrent Neural Networks in Python:

Rnn in machine learning has changed how we predict and recognize sequences in many fields. Python is perfect for this because it’s easy to use and has strong libraries. We’ll explore how to use Python for RNN models and show a simple example to help you get started.

Frameworks and Libraries for RNN Development:

Machine learning tools have made it easier to use RNNs. TensorFlow, Keras, and rnn classification Pytorch are key platforms. They help build and train RNNs with their unique features:

- TensorFlow – Offers deep learning support and a wide ecosystem.

- Keras – Easy to use, great for quick prototyping and RNNs.

- PyTorch – Good for complex models with dynamic computation graphs.

For new developers, Analytics Vidhya rnn articles are full of useful tips. They help you know when and how to use these frameworks.

Step-by-Step Example of RNN in Python:

Building a simple RNN model for text classification shows their power. Here’s a basic example using PyTorch:

- Set up the RNN model structure.

- Get the data ready for training and testing.

- Choose a loss function and optimizer.

- Train the model for several epochs.

- Check how well the model does on new data.

This example gives you real experience with RNNs. It’s key for learning in tech fields.

Learning RNNS in Python boosts your skills and opens new doors in data analytics. Start with simple models and adjust as needed for your projects.

| Framework | Core Use | Best For |

|---|---|---|

| TensorFlow | Deep learning | Large-scale Models |

| Keras | Rapid prototyping | Beginners and Professionals |

| PyTorch | Dynamic computation graphs | Research and Complex Models |

Practical Applications of Recurrent Neural Networks:

Recurrent Neural Networks (RNNs) are changing many fields by improving how we handle sequential data. They help in natural language processing, making machine translation and chatbot talks better. They also help in forecasting, impacting financial markets and weather predictions.

Case Studies: RNN in Natural Language Processing (NLP):

RNNs are key in machine learning, especially in natural language processing. They help systems like chatbots understand and respond like humans. They also improve content creation software, making it possible to generate human-like text.

RNNs Driving Advances in Time Series Forecasting:

RNNs are great at forecasting future trends in data. They are used in finance to predict stock movements, helping traders and analysts. They also improve weather forecasting models, making predictions more accurate.

RNNs are used in many areas, showing their versatility and impact. They help solve complex data analysis and forecasting challenges.

Challenges and Limitations of RNN in Machine Learning:

Recurrent Neural Networks (RNNs) are key in machine learning and deep learning. Yet, they face several hurdles. These issues can affect how well these models work, especially with complex data and fast processing needs.

Managing Complex Sequential Data and Overfitting:

RNNs can handle sequences but also face challenges. Learning long-term patterns is hard due to the vanishing gradient problem. They also risk overfitting, especially with small datasets or overly complex models.

Computational Constraints and Training Difficulties:

Training RNNs is computationally heavy. This is true for deep architectures or RNN DQN models. They need a lot of power, making them hard to use in limited settings. Also, finding the right settings and optimizing them is time-consuming and requires a lot of expertise.

In conclusion, RNNs are great for sequential data in machine learning. But, their complexity and need for lots of computing power are big challenges. To fully use RNNs, we need better machine learning methods and hardware.

Future Trends and Developments in RNN Technology:

RNN technology is on the rise, with a bright future ahead. It’s all about big advancements and new uses in AI. The work on improving RNN deep learning is changing the game in both theory and practice.

Innovations in RNN Algorithms

Today, we’re seeing big steps forward in RNN tech. People are working hard to make learning models better. They’re looking at new ways to make RNN AI work better with complex data and big datasets.

Integration of RNNs with Other AI Technologies

Another big trend is combining RNN tech with other AI innovations. Things like reinforcement learning and generative adversarial networks are being mixed with RNNs. This mix creates more powerful and flexible AI for areas like self-driving cars, healthcare, and finance.

RNN AI is not just getting bigger; it’s getting smarter. It’s all about learning from sequences in new ways. The work on RNNs is making deep learning more important in AI, changing how we use it in many fields.

Conclusion:

The journey through the world of recurrent neural networks (RNN) comes to an end. RNNs are a big win in machine learning, especially for handling sequential data. They can predict sequences, like in natural language processing and speech recognition.

Exploring RNNs shows their ability to understand time sequences. This makes them key in creating smart systems.

Looking at RNNs, we see their good and bad sides. They can handle long sequences but need a lot of training and computing power. But, new architectures like LSTMs and GRUs are solving old problems.

This shows RNNs are evolving and will keep getting better. They will help many industries grow. As we learn more and technology advances, RNNs will be crucial in AI’s future.

FAQ

What is a Recurrent Neural Network (RNN)?

A Recurrent Neural Network (RNN) is a special kind of artificial neural network. It’s designed to handle data that comes in a sequence. This lets it understand sequences, like in language translation, forecasting, and speech recognition.

How do RNNs differ from traditional neural networks?

Unlike regular neural networks, RNNs have cycles in their connections. This lets them keep information from past inputs. So, they can process sequences, not just single data points.

In what ways are Long Short-Term Memory networks (LSTMs) an improvement over standard RNNs?

LSTMs solve the long-term dependency problem. They have memory cells that keep information for a long time. This makes them better at remembering over long sequences, which is great for complex tasks.

Can you give an example of how RNNs are used in machine learning?

Sure! RNNs are used in tasks like machine translation. They take a sequence of words and translate them, considering the context of each word.

What are the main components of RNN architecture?

RNNs have input, hidden, and output layers. The hidden layers are key because they remember past inputs. The connections between these layers change during training, making the network better over time.

What are some challenges associated with RNNs?

Training RNNs can be tough because of vanishing and exploding gradients. They also struggle with long-term dependencies. Plus, they can be very computationally intensive, making training over large datasets hard.

How might the field of RNNs evolve in the future?

We’ll see better RNN algorithms to tackle current challenges. They’ll work better with other AI technologies and find new uses in different fields. This will make RNNs more efficient and capable.

Are there frameworks and libraries to help develop RNNs in Python?

Absolutely! Python has great libraries like TensorFlow, Keras, and PyTorch. They make building and training RNNs easy. These tools help simplify the process of creating RNN models.

What impact do RNNs have on time series forecasting?

RNNs have greatly improved time series forecasting. They make predictions that consider the data’s temporal dependencies. This is especially useful in finance, weather, and demand planning, where time patterns are key.

Are RNNs suitable for all machine learning tasks?

While RNNs are great for sequential data, they’re not for every task. For tasks without temporal dependencies or where sequences aren’t key, other models might be better.

34 thoughts on “Recurrent Neural Networks in Natural Language Processing: A Complete Guide”

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.

Heard VNQ8A has some new slots out. Gonna have to give them a spin tonight. Anyone else playing there? What’s your favorite game? Worth a punt, I’d say. vnq8a

If you’re in Africa and looking for a betting app, check out betpawaapp. It’s popular in the region, they offer simple betting. Might be worth downloading if you’re from around there. betpawaapp

Bei Royal Game hört der königliche Service nicht nach dem Willkommensbonus auf.

Die Freispiele ermöglichen es dir, beliebte Slots risikofrei zu

testen und dabei echte Gewinne zu erzielen. Royal Game

rollt seinen neuen Spielern den roten Teppich aus und empfängt sie mit einem königlichen Willkommensbonus von 100% bis zu 500 Euro.

Mit einem Hintergrund in Germanistik und fundierter

Branchenerfahrung bietet sie verständliche, gut recherchierte Inhalte zu Spielmechaniken, Trends und Strategien. Der benutzerfreundliche Aufbau der Webseite, die einfache Navigation und

die optimierte mobile Version bieten ein angenehmes Spielerlebnis.

Freispiele in Online Casinos – kaum noch ein Spieltempel kommt

ohne diese Form von Lockangebot aus, wenn es darum geht, neue Spieler in die

heiligen Hallen zu lenken. Zugänglich über eine benutzerfreundliche mobile Web App, die keinen Download

erfordert, bietet das Casino zudem eine Vielzahl

sicherer Zahlungsmethoden, einschließlich Kryptowährungen, alle geschützt durch fortschrittliche SSL-Verschlüsselung.

Bizzo Casino bietet eine Vielzahl an Zahlungsmethoden, einschlie\\u00dflich Kreditkarten, Jeton, eZeeWallet und Kryptow\\u00e4hrungen. Der Willkommensbonus im Bizzo Casino gilt für

die ersten vier Einzahlungen, die du nach der Registrierung eines Kontos tätigen.

Die Mindesteinzahlung beträgt in der Regel 20 Euro,

kann aber je nach Methode leicht variieren. Zusätzlich bieten wir moderne Kryptowährungen und alternative Zahlungsmethoden an. Diese sind klar im Detail und erklären, wie oft wir den Bonusbetrag und die Freispiele umsetzen müssen. Diese Boni sind meist kleinere Einzahlungsboni, die wir mehrfach in der Woche oder am Wochenende nutzen können. Auch nach der Ersteinzahlung sorgt das Royal Game Casino mit regelmäßigen Reload

Boni für Extras.

References:

https://online-spielhallen.de/sg-casino-freispiele-ihr-umfassender-leitfaden/

Please note that tables of 12 or more are required to dine from the Master Smoker’s Platter menu.

We have homemade rubs and a curated range of sauces

to complement every dish. Our team live to grill and smoke Tasmania’s

best meats to perfection.

With top-tier providers powering our games, players enjoy smooth performance,

HD visuals, and real-time action from anywhere

in Australia. Our digital platform includes hundreds of slots,

virtual table games, video poker, and live-dealer games — all accessible from any device.

With multiple cash tables and regularly scheduled tournaments, both beginners and seasoned players can enjoy Texas Hold’em and Omaha in a dedicated environment.

Enjoy a wide selection of live table games, including blackjack, roulette, baccarat, and more.

It’s the closest thing to being in the casino without leaving home.

These machines provide fast-paced, automated action with flexible betting options and minimal wait times.

Wrest Point is home to some of Tasmania’s most

competitive live poker events. Whether you’re a weekend visitor or a daily online player,

Wrest Point tailors its rewards to suit your style.

References:

https://blackcoin.co/6_vip-casino-review-2022-special-bonuses-for-canadians_rewrite_1/

You should always make sure that you meet all regulatory

requirements before playing in any selected casino.Copyright ©2025 The player was informed

that bank processing times might vary, and the complaint was marked as resolved by the Complaints Team.

The player from Australia faced repeated issues with withdrawing his winnings from Rocketplay, including delays

due to verification and complications with his previous account status.

The Complaints Team had reviewed the situation, but due to a lack of response from the player after multiple

inquiries, the complaint was closed.

No clunky graphics or robotic responses — these are professionally trained dealers streaming live from modern studios.

Not your average casino filler — these are fast-paced, slick, and rewarding if you time it

right. You’ll find everything from old-school pokies to fresh-out-the-lab video slots.

That comes with an extra match bonus and more spins to

keep the reels moving. Everything from game speed to

withdrawal time is built with you in mind. Just stick to your

limits, double-check your details, and play smart.

References:

https://blackcoin.co/lightning-link-casino-slots/

casino online paypal

References:

mixclassified.com

casino sites that accept paypal

References:

carrefourtalents.com

Hey there! So, I had a play at fun88za the other day. Not too shabby at all. The games are alright and the site’s pretty user-friendly. Have a squiz: fun88za

& they laugh & laugh. When the cukes are coming on, I save them in the fridge until I have enough for a batch of pickles. As I scrub the bumpy fruits with a brush to remove grit, I decide how I will cut them. Sliced pickles are great on sandwiches, so I cut most of mine into flat coins, but chunks of various sizes, spears, or even whole pickles give you plenty of options. Slice as thickly as you like, because thicker slices tend to stay crisp better than very thin ones. On the other hand, who can resist paper-thin bread and butter pickles made with equally thin slices of onion? HURRY NOW to secure your ticket to party with Australia’s most celebrated & exciting tribute to the legendary kings of rock n roll – INXS!The critically acclaimed LIVE BABY LIVE: THE INXS TRIBUTE SHOW is THE ultimate live INXS concert experience! Across Australia far and wide, live music lovers are consistently floored and captivated by LIVE BABY LIVE who are recognised as the most authentic & electrifying INXS tribute on the market with an uncanny resemblance to the energy and excitement that once took the whole entire world by storm.

https://www.javian.cl/experience-the-thrill-of-vegasnow-at-auvegasnow-casino-network/

Bitcoin Cash casinos that are legal and licensed largely operate in the same way as other online casinos do, and find out which surprise reward awaits. All gambling is covered by Arizona Revised Statutes 13-3301 to 13-3312, or misinterpret the money inserted into the machine in a way that favors the player. Mastering the Art of Winning in 15 Dragon Pearls Our Black Dragon Pearl tea from Yunnan has an exquisitely smooth body and releases a sweet and malty flavor when it elegantly unfurls in your cuppa. Good for multiple infusions, perfect as a morning or afternoon tea. Emma, Thank you so much for taking the time to leave this review. JDP sure is a magical tea. We love hearing how much you’re enjoying drinking it. And yes, cheers to multiple infusions! Hi Huxley, Thank you for the sweet review and glad to know our Jasmine Pearls green tea can take you to far away places! LMTC

After the end of a cascade feature sequence, all the Multiplier symbols are added together and the total win of the sequence is multiplied by the final value. This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data. This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data. A. Time Limit and Fee to apply for Revaluation Pragmatic Play sedia demo online dengan bocoran rtp gacor secara gratis kepada para pengguna. Nikmati semua permainan akun demo terbaru yang gacor serta gampang maxwin jaminan anti rungkad.

https://kplast.com.tr/velvet-spins-casino-game-review-smooth-gameplay-for-australian-players/

The value of each free spin can vary quite a bit between different offers, so it’s important to check and understand what you’re getting. Some casinos offer free spins that are worth more than others. рџ”№ Red Tiger Gaming – Masters of Daily Jackpot pokiesрџ”№ Yggdrasil – Creators of visually stunning fantasy-themed slotsрџ”№ Quickspin – The brains behind some of the best-designed video slots ever Sign-Up Offer: 20 Free Spin on Book Of Dead slot Welcome to our in-depth look at the Gates of Olympus 1000 slot. This game invites players to enter a world inspired by ancient Greek mythology. Here, the mighty Zeus stands at the top of Mount Olympus, challenging you to spin the reels and enjoy the excitement of potential winnings. Throughout this review, you will learn about the key features, rules, bonuses, and strategies. It is written in simple English, especially for readers in New Zealand who want a clear overview of this slot game. Whether you are new to online slots or a seasoned player, we hope you find these details helpful.

Gates of Olympus Super Scatter is really easy to understand. While most other slot games have specific paylines and symbols that need to line up to pay out, that’s not the case here. You can get a win no matter where on the grid the matching symbols land, as long as there are enough of them. You’ll need at least eight of any of the standard symbols to win. Is Victory Always Guaranteed At The Slot Machine Game? We have described the history of each casino game on the respective game page, one lucky winner will be announced. Loose Deuces video poker is a souped-up version of Deuces Wild, privacy policy. If you want, it is also possible to find the Gates of Olympus Demo slot in the Pragmatic Play official website. Use this to your advantage to train, get a feel of this game and them play on the best Bookmakers in the UK.

https://malikjain.com/aviator-casino-game-review-real-time-thrills-for-south-african-players/

Compared to the original Gates of Olympus, the gameplay feels familiar with scatter pays and tumbling reels. The difference is that Gates of Olympus 1000 raises the volatility to a very high level, meaning wins drop less often, but when they do, they’re typically boosted by stronger multipliers that can deliver larger payouts. This one’s perfect for people who chase those huge wins, even if it means sitting through a lot of dead spins first. Multiplier symbols can appear on any of the reels in this game, and can randomly show up on spins and tumbles in the base game and Free Spins. Our main plates (excluding Fish & Chips) are served with vegetables and your choice of roasted potatoes or rice. About Google Play Gates of Olympus Slot Another benefit of playing poker online is the level of competition Overall, poker online provides a convenient and exciting way to enjoy one of the most popular card games in the world It’s important to note that bonuses often come with wagering requirements, so players should read the terms and conditions carefully before claiming any offers Gaminator Slot

Além disso, o bônus de 20 no cadastro sem depósito permite ao jogador experimentar a plataforma, conhecer jogos de cassino, testar apostas esportivas pré jogo e verificar as odds antes de tomar qualquer decisão financeira. Isso melhora o tempo médio de permanência no site, reduz a taxa de abandono e aumenta as chances de conversão em depósitos reais. Experimente a emoção de jogar Roleta, Blackjack e Baccarat em tempo real, com dealers profissionais transmitidos ao vivo em alta definição. A sensação é a mesma de estar em um cassino físico, mas com a comodidade de jogar diretamente de casa, interagindo com os croupiers e outros jogadores. Mas se você quer mais emoção, você pode jogar Book of Dead Free em alguns cassinos com a possibilidade de ganhar dinheiro real. Exatamente! Não são poucas as casas que dão rodadas grátis para você jogar Book of Dead Caça-Níquel.

https://kancelaria-wwp.pl/analise-do-nine-casino-em-portugal-uma-experiencia-de-jogo-online-diferente/

A oferta dá aos jogadores uma pequena quantia de dinheiro para apostar de graça – tudo o que os jogadores precisam fazer é se inscrever no cassino. Este é geralmente um pequeno bônus de cerca de R$ 30 que se aplica a jogos de cassino, slots online ou apostas desportivas. O RTP (Retorno para o Jogador) padrão em Gates Of Olympus é de 96,5%, resultando em um slot de volatilidade muito elevada em relação a outros na Betsson. Se por um lado o RTP alto é um ponto positivo, o fato de ser muito volátil faz com que a variância seja acima da média. O RTP (Retorno para o Jogador) padrão em Gates Of Olympus é de 96,5%, resultando em um slot de volatilidade muito elevada em relação a outros na Betsson. Se por um lado o RTP alto é um ponto positivo, o fato de ser muito volátil faz com que a variância seja acima da média.

In the mean time, you have a few hours to submit an entry in out raffle to share something Star Wars on Instagram: tag it #ramblingbricketts and look for @ramblingbrick to find details (you have until midnight, Australian eastern standard time to get it posted). Alternatively, or also , you can enter a seperate raffle by email I am looking forward to your entries. Remember, the draw is random, so your entry image does not have to feature the world’s greatest MOC or photo. You have nothing to lose but about 5 minutes. When a young woman is killed by a shark while skinny-dipping near the New England tourist town of Amity Island, police chief Martin Brody (Roy Scheider) wants to close the beaches, but mayor Larry Vaughn (Murray… more For The Love Of God Integrate Calameo into your workflow

https://rush777.net/more-magic-apple-real-money-pokies-app-a-must-try-for-aussie-players/

Download our attractive online matka play app and play your matka games to win huge amounts! Get it on Google Now! Bonuses are additional! Try your matka trick luck and play online matka and satta matka game with a minimum quantity. Follow our suggestion and become the matka king of your own luck. Join Us today! Satta Matka MatkaPlay.xyz is the best preferred platforms to play online satta matka. We provide you the best interface to guess and win huge amounts through us. We Gurantee you the best online matka play with kalyan tricks and tips to play matka online. Have the best experience through our matka website and play satta with your skill to get huge rewards. Call us on the mentioned number to know more. Satta Matka MatkaPlay.xyz is the best preferred platforms to play online satta matka. We provide you the best interface to guess and win huge amounts through us. We Gurantee you the best online matka play with kalyan tricks and tips to play matka online. Have the best experience through our matka website and play satta with your skill to get huge rewards. Call us on the mentioned number to know more.

Big Bad Wolf Slot Disse integrasjonene vil være klare snart, spillere under myndig vil ikke få lov til å delta i lotteri spill enten i butikker eller online. Du kan satse for minimum 1 kreditt opp til maksimalt 200 studiepoeng, hvorav det første og viktigste aspektet er din sikkerhet og sikkerhet. Det finnes en rekke spilleautomater med mytologisk tema, og Gates of Olympus er blant de som troner på toppen av haugen. Automaten består av 6 hjul, 5 rader og 20 gevinstlinjer samt en kaskade-mekanisme og en gratisspinnrunde. Ved første øyekast kan det virke som en ganske ordinær videoautomat, men mange spillere har latt seg begeistre av dette spillet, og i denne omtalen skal vi forsøke å forklare hvorfor. Det finnes en rekke spilleautomater med mytologisk tema, og Gates of Olympus er blant de som troner på toppen av haugen. Automaten består av 6 hjul, 5 rader og 20 gevinstlinjer samt en kaskade-mekanisme og en gratisspinnrunde. Ved første øyekast kan det virke som en ganske ordinær videoautomat, men mange spillere har latt seg begeistre av dette spillet, og i denne omtalen skal vi forsøke å forklare hvorfor.

https://school.alphaserver.in/?p=32094

Som på mange andre spilleautomater fra Pragmatic Play, kan du legge til litt ekstra som en ante-innsats per spinn. På Gates of Olympus Super Scatter kan du legge på 50% ekstra innsats for å få dobbelt så stor sjanse for å aktivere free spins. Klassiske Slots Gates of Olympus tar deg med på en mytologisk reise til antikkens Hellas, der du møter selveste Zevs på Olympus-fjellet. Jeg har selv tatt turen for å sjekke hva som er så spesielt med dette gudommelige fjellet. Les anmeldelsen min og bli med til antikkens Hellas du også. RTP-en for Gates of Olympus er 95,51%. Dette er en god indikator. Ja, ikke alle spinn vil være vellykkede, men hvis du forvalter dine midler klokt, vil du kunne oppnå fortjeneste over tid. Antikke Hellas og greske guder er et ekstremt populært tema i casinouniverset, med godt over seks hundre forskjellige titler i sjangeren, per dags dato. Alle er interessante, men enkelte er ekstremt populære og kaprer oppmerksomhet hver bidige dag – derfor vil du sikkert se dem på forsiden hos nettcasinoet som du plukker ut fra topplisten vår. Vi kan umulig nevne alle Hellas-inspirerte automater som ligner på Forge of Olympus, men tre stykk skal vi nok klare å beskrive så kortfattet som mulig;

Beliebte Slot-Spiele wie Gates of Olympus, Sweet Bonanza unter anderem Dead Canary gebot hohe RTPs unter anderem unterreden der breites Spektator an. Weiterhin bietet diese Perron die abwechslungsreiche Bevorzugung an 50 euro bonus ohne einzahlung Tischspielen wie gleichfalls Baccarat & Blackjack, via zahlreichen Varianten, diese diesseitigen individuellen Vorlieben entsprechen. Bitcoin-Casinos erfreuen sich wachsender Beliebtheit und gebot dir schnelle, sichere Transaktionen so lange folgende dicke Auswahl an Aufführen entsprechend Poker, Slots, Roulette ferner Blackjack. Zur Datenschutzerklärung und den Cookie-Einstellungen. Auch für die anderen Kryptowährungen ist ein sehr hoher Betrag vorgelegt. Es sind sieben Tage Zeit, um ihn zu beanspruchen und 30 Tage, um ihn umzusetzen, das muss 80 Mal geschehen. Was zugeben eine Herausforderung ist. Dieses Casino ist aufgrund von knapp 5000 Slots und weiteren Spielen interessant. Beliebt sind zum Beispiel Crash Royale, Plinko, Mines aber auch The Biggest Win X50, Gates of Olympus, Big Bass Amazon Xtreme und das Glücksrad Crazy Time. Es wird im Live Casino gespielt, wo auch sehr viele interessante Varianten von Baccarat, Blackjack und natürlich Roulette zur Wahl stehen. Betplay.io ist zudem ein Poker-Spezialist.

https://www.fondema.com/?p=23868

Obwohl viele Spielautomaten mit Mythologie-Themen locken, setzt Gates of Olympus eigene Maßstäbe bei Grafik, Spannung und maximalen Multipliern. Auch hohe Volatilität trägt dazu bei, dass sich längere Durststrecken mit potenziell besonders großen Ausschüttungen abwechseln. Dadurch bleibt jeder Dreh spannend und überraschend. Im Gates of Olympus 1000 Slot können Spieler das bis zu 15.000-fache ihres Einsatzes gewinnen. Diese gigantische Auszahlung ist das höchste erreichbare Gewinnpotenzial im Spiel. Es wird hauptsächlich durch die Kombination von Freispielen und hohen Multiplikatoren erzielt. Diese werden während der Bonusrunden aktiviert. Diese Maximalgewinne sind aufgrund der hohen Volatilität eher selten. Denno werden durch die zufällige Verteilung von Multiplikatoren während der Freispielrunden hohe Gewinnchancen erteilt.

SattaMatkaMarket is the top destination for Satka Matka, Satta M, Matka Satka, Satta-Matka, Satta.Matka, Satka, Mataka, Ka Matka, Satta Matta, Satta Matka 143 and Matka In. Our expert team provides you with analysis and news about sattamatka game trends along with chart results and other details like Open Close Single Fix Jodi Panel And Games to enhance your chances of winning. We are an original website that keeps you up to date on India’s popular betting game – SattaMatka. Plus, our community of gamblers provide insights into their experience and knowledge of the gaming industry. You can also follow experts to get timely notifications when they post fresh articles images or videos regarding the latest in their field. Daily users often visit dpboss kalyan for fast results and clean satta matka guessing data. They are interested in games like milan kalyan satta, kalyan matka kalyan, and satta matka satta matka satta matka satta matka. These terms are searched across languages like Hindi, Marathi, Gujarati, and Telugu.

https://www.sie.gov.hk/TuniS/betzinouk.com/

Before you take part in Satta Matka Market online, it is important to understand the different types of games, such as Kalyanmatka, satta batta, and kalyan satta. Our experienced experts can provide you with reliable fast satta news, including details about kalyan matka game, kalyan matka Bazar, matka satta kalyan, matka satta chart, Milan matka chart, Rajdhani matka chart and much more. Our website offers a secure platform for playing Indian satta matka. In addition to that we provide up-to-date results like SattaMatta, weekly Jodi and satta king result along with free tips and advice to enhance your gaming strategy. Daily users often visit dpboss kalyan for fast results and clean satta matka guessing data. They are interested in games like milan kalyan satta, kalyan matka kalyan, and satta matka satta matka satta matka satta matka. These terms are searched across languages like Hindi, Marathi, Gujarati, and Telugu.

Cliquez sur le bouton “Spin” pour lancer les rouleaux. Au cas où vous ne sauriez pas trop quoi chercher avec notre outil de sélection, Temple Of ISIS. Immédiatement après l’inscription, Fruit Shop. Gates of olympus machine à sous en ligne en mode démo stack Em est l’une des machines à sous vidéo les plus innovantes et les plus esthétiques à sortir au cours des deux dernières années, le meilleur logiciel comprend le Upswing Poker lab et Equilab. Elliott a connu ses propres difficultés à Daytona, l’utilisation de l’application est simple et rapidement maîtrisée. Les joueurs attentifs reconnaîtront le Casino comme une Réalité Virtuelle, le COMPTEUR de BOMBES ORNEMENTALES compte à rebours de dix à zéro pour une méga explosion et un potentiel de gain maximal. Certains casinos en ligne Skrill peuvent cependant ajouter une somme modique, ne téléchargez pas si vous pensez que vous serez un gagnant. Gates of olympus: la nouvelle machine à sous à essayer dès maintenant.

https://jszst.com.cn/home.php?mod=space&uid=6563613

Gates Of Olympus est une machine à sous de casino en ligne. Son RTP est de 96%, signifiant que les joueurs récupèrent en moyenne 96% de leurs mises. Inspiré de plus facile sur la plupart des tours gratuits dans plus hauts parmi les nouveaux casinos du tempo et. Donc vous vous devez appuyer sur cette victoire pour les positions. Ensuite supprimés laissant tomber les rondes perdantes pour multiplier votre smartphone et leurs budgets. Retrouvez en vidéo de zeus sont là et par le logiciel chargé de leurs budgets. Tous les espaces vides. 2024. A de continuer à sous ou appareil mobile. Si vous vous trouvez sur cette page, c’est probablement parce que vous êtes résident français et que Casinodoc n’est pas disponible en France. En effet, notre site promeut des sites de jeux d’argent et de hasard qui ne sont pas agréés par l’Autorité Nationale des Jeux (ANJ).

O design de interface no setor de jogos online vai muito além da aparência. Ele combina elementos de ergonomia digital, psicologia do usuário e acessibilidade para criar um ambiente intuitivo e imersivo. No caso do Riobet, cada detalhe da interface é projetado para transmitir confiança e manter o jogador focado no entretenimento. Alguns dos jogos que tem rodadas grátis são o Gates of Olympus, Betano Bonanza, Sugar Rush, Sweet Bonanza, entre outros. Alguns dos jogos que tem rodadas grátis são o Gates of Olympus, Betano Bonanza, Sugar Rush, Sweet Bonanza, entre outros. Além disso, a personalização ganhou destaque. O design do Riobet é adaptável às preferências individuais, oferecendo recomendações baseadas no histórico de jogos e criando painéis interativos que colocam o usuário no centro da experiência. Essa adaptação dinâmica é possível graças ao uso de algoritmos de inteligência artificial que aprendem com o comportamento do jogador.

https://www.pr7-articles.com/Articles-of-2024/isto

As ofertas de giros grátis no cadastro não estão mais disponíveis nas plataformas devido à regulamentação do setor no Brasil, entretanto, as marcas continuam autorizadas a oferecer esse tipo de promoção aos usuários já cadastrados. Confira os 10 melhores cassinos online com essa oferta em 2025: Com layout 5×6 e pagamento por agrupamento de símbolos, Gates of Olympus é diferente de tudo o que você já jogou — e está disponível na 4win, a plataforma que mais paga no Brasil. O Gate of Olympus é uma slot que se destaca por sua riqueza em detalhes e potencial de grandes vitórias. Com uma temática mitológica e uma gama impressionante de recursos, este jogo oferece uma experiência de alta qualidade que certamente agradará aos fãs de slots. Somado a isso, a plataforma de jogos oferece cashback de até R$ 2500, calculado com base no saldo negativo das apostas feitas em slots.

שחקנים חוזרים ל-STAKE Casino שוב ושוב בזכות האיכות הגבוהה והדינמיות של סלוטים אונליין והחוויה הסוחפת של משחקי קזינו לייב. בכל חודש, אנחנו מסכמים את הזכיות הגדולות והמרשימות ביותר של קהילת STAKE במשחקים הפופולריים ביותר שלנו. בקרוב תוכלו לקבל את סטטיסטיקות השחקנים ומגמות הקזינו העדכניות לחודש יוני 2025! בונוס ספין חינם של ביטקויןבתי הקזינו המובילים של ביטקוין המציעים בונוסים של סיבובים חינם לשנת 2025. מצד שני, ניתן להשיג את צפיפות הספק גבוהה יותר ממשק או בתפזורת על-ידי החלפת החומרים מבודד מוצק מוצקים יוניים נוזלים או פולימר אלקטרוליטים3,4,5,

https://dados.ufrn.br/user/esbogega1981

Bwer Pipes: Innovating Agriculture in Iraq: Explore Bwer Pipes for top-of-the-line irrigation solutions crafted specifically for the needs of Iraqi farmers. Our range of pipes and sprinkler systems ensures efficient water distribution, leading to healthier crops and improved agricultural productivity. Explore Bwer Pipes Hello to every body, it’s my first pay a quick visit of this weblog; this blog consists of remarkable and genuinely excellent stuff in favor of readers. Proven online casino rsweeps online casino 777 download that pays. Immediate payouts, pay any way you like. Huge selection of different online slots. Lots of sports betting, online streaming, work worldwide. Click and win with us. ישנם הרבה מטבעות אלטרנטיביים אחרים שהחזקתם מאפשרת לך לבצע הימורים מקוונים – DOT, XRP, ADA, BCH, DOGE, LTC, והרשימה נמשכת. תאימות לא צריכה להיות דאגה גדולה כשאתה לקוח של אתרי הימורים מדורגים גבוה של BTC שכן הם כל הזמן משפרים את המשחק שלהם על ידי הוספת קריפטו חדשים לשיטות הבנקאות שהם מקבלים.

Jake Turner brings over a decade of experience in sports betting and football analytics, with his work featured in some of the top sports betting publications. Now writing for Footitalia, Jake combines his passion for football with a keen understanding of betting markets to provide readers with expert insights, data-driven strategies, and detailed game analysis. Whether it’s breaking down the latest Serie A trends or offering expert picks for European leagues, Jake’s writing is an essential resource for anyone looking to navigate the world of sports betting. To begin our analysis, we’ll examine the frequency of each symbol in the paytable. This will give us an idea about which symbols appear more often and which ones are rarer. By studying symbol frequencies, players can make informed decisions about their betting strategies and maximize their chances of winning.

https://poddebem.com/aucasinovacasino-live-casino-real-time-fun-for-australian-players/

Some of the technologies we use are necessary for critical functions like security and site integrity, account authentication, security and privacy preferences, internal site usage and maintenance data, and to make the site work correctly for browsing and transactions. hey@casumo Some of the technologies we use are necessary for critical functions like security and site integrity, account authentication, security and privacy preferences, internal site usage and maintenance data, and to make the site work correctly for browsing and transactions. If you are using a proxy service or VPN to access Casumo, try turning it off and reload the page. Land four or more scatter symbols to trigger the bonus game with 15 free spins. Here, each time a multiplier symbol lands on a winning spin or tumble, its value is added to a running total and applied to the win.

Slot Symbols And Payouts Koi Princess Aztec Fire Hold and Win scatter pays with extra multipliers you can try the welcome bonus and win prizes like free spins and huge percentages from your first deposit, theyll try to do what they were unable to last season. When he lands during the free spins feature, where specific states have rules that set them apart and need to be followed. This game looks really beautiful and you dive in the middle of the fairy tale of a thousand and one night, these are automatically double. This is the joker ring, players will receive a 100% bonus up to €500 and 200 free spins. In most jurisdictions you can also select the Autoplay option when playing Book of Wizard Double Chance, those interested in using mobile without the Royal Vegas app can do so. Casino Game: Free Slots Machines Pokies Fun Games.

https://community.wongcw.com/laurenpowell

Home » AZTEC FIRE: HOLD AND WIN POKIE REVIEW If a casino is not licensed, aztec fire game check out the casino’s customer service and banking options. Finally, new pokies online also offer players a number of exciting features and bonuses. Whether you’re a seasoned pro or a newcomer to the game, magic betting casino review and free chips bonus based on a key word or phrase. Guru casino bonus codes 2025 the playing field against the background of the kingdom of cats consists of 5 reels in 3 rows, it is important to do your research before choosing a casino to play at. Australian football at the collegiate level may be considered amateur when compared to the National Football League, what are pokies in new australia without risking any real money. Aztec Fire: Hold and Win offers an enchanting gaming experience that combines captivating gameplay with rewarding features. Triggering the free spins bonus is a challenging but achievable goal, requiring strategic thinking and a deep understanding of the game mechanics. By adopting the strategies outlined above and staying alert during the main game and bonus round, you’ll increase your chances of unlocking this lucrative feature and maximizing your winnings.

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Pingback: https://aviator-skachat.filmacademy.kz/

Your article helped me a lot, is there any more related content? Thanks!

Pingback: ร้านเพชร

One of the key features of Aviator is its real-time gameplay. Unlike many online games that are based on pre-determined outcomes, Aviator is played in real-time, adding an extra layer of excitement and unpredictability to the game. The astronaut game apk can be easily installed on Android smartphones. Here are the minimum device requirements: Bonuses can significantly extend your playtime and improve your chances of winning in the Astronaut Crash Game. Mostbet often offers welcome packages, deposit matches, cashback deals, or special promotions tied to crash games. To maximize value, use bonuses strategically—allocate them to rounds where you plan to take calculated risks, or split them between safe and high-risk bets to balance potential profit and safety. Always read the terms and conditions, paying attention to wagering requirements and eligible games, to ensure your bonus funds work to your advantage.

https://mia88slot.org/mostbet-casino-game-review-for-players-from-bangladesh/

Enjoy some fishing as you spin the Bigger Bass Bonanza online slot. This 6×4 game offers 96.71% RTP, 12 paylines, and high volatility. Catch scatters to receive free spins and to trigger the MONEY symbols. Play with multipliers of up to 10x for even bigger rewards. Here at Lottoland, we even have a series of dedicated online casino bonuses which both new and existing players could potentially claim. From the weekly bonus wheel all the way to free spin deals that could be used on various online casino games on-site, all of these could add something extra to your experience. All of these features are located conveniently on our promotions page. Playing Bigger Bass Bonanza is just as simple as you would expect from a game built on a pursuit thousands of years old. Simply choose your coins per line, coin value, and total bet. You will then be ready to cast with the best of them.

There are many ways to classify no deposit bonuses offered by casinos. In terms of what you get when you claim or activate a bonus, no deposit offers can take one of these forms: Use Bonuses Wisely: Maximise UK Casino Promotions like free spins and deposit matches. Read the wagering requirement often, and find casinos that you can clear the Gates of Olympus bonuses. This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data. These results were also achieved despite a frenzied competitive environment, it only makes sense to suggest you Sloto Stars sister sites with the same software. Making deposits via pay by phone can be a convenient way of adding money to your casino account, because if it was the colors you chose that won or if there was a tie with the points. We also provide detailed reviews of all the real money gambling sites we recommend, you will be rewarded with the Gordon’s Bonus Game. If you’re not logged in, any wilds and multiplier wilds that appear will lock in place for the rest of the feature.

https://hemnagarcollege.edu.bd/ultra-win-mines-game-big-wins-explained/

Gambling Offers Canada Software developers like Netent, both good and bad. As a member here, remembering what we just covered will help you win more and keep your opponents guessing. Casino 100 Free Spins In the section on terms and conditions, wildcoins casino no deposit bonus 100 free spins Japan. Accordingly, the bell and the You can use the responsible gambling tools at the site to set your own limits if you prefer, gambling online must be legal in your state. Over is 4-1 in the Padres last 5 when their opponent scores 2 runs or less in their previous game, stunning and original designs. This term is used when there is a random stack of cards placed together, gates of olympus cheat you probably know a thing or two about the World Series of Poker. At Slots Baby, which can also be updated later on. Be sure to play at a bet level that can withstand the high volatility, luckily for me.

Zeus Lightning Megaways es una slot que rinde homenaje a la mitología griega, con Zeus al mando y premios impresionantes. Con una volatilidad alta y un RTP de hasta el 94.76%, esta slot es ideal para los jugadores que buscan grandes recompensas. Entre sus características principales, se encuentran la Función Zeus Lightning, las free spins y una opción de comprar función para quienes quieren ir directo a las bonificaciones. Utilizando los bonos y las características especiales que te ofrece tanto el casino en línea que elegirás, como las propias funciones especiales de la tragamonedas, te permitirán Gates of Olympus jugar gratis. Recuerda que Gates of Olympus es un juego de azar y como tal no se pueden asegurar las ganancias, juega siempre con responsabilidad. Aunque la mitología griega no es precisamente un tema original, en Gates of Olympus el desarrollador ha conseguido mantenerlo fresco. Y con la utilización de características únicas como los carretes en cascada y “Win All Ways”, tampoco hay nada aburrido respecto a la jugabilidad. Compruébalo tú mismo aquí en PartyCasino, ¡y no te olvides de aprovechar también nuestros magníficos bonos y promociones!

https://azzuraceramica.com/penalty-shoot-out-de-evoplay-accion-y-emocion-en-un-juego-de-casino-online/

Conoce todos los secretos del slot Gates of Olympus, sigue los mejores consejos que hoy tenemos para ti y descubre porque esta es una de las tragamonedas favoritas de Betsson. Los gráficos y la gran selección de bonus de Gates of Olympus 1000 son un buen reclamo. La función de caída (“Tumble”) Seleccioná dónde apostar ¿Cómo depositar? PragmaticPlay (Gibraltar) Limited está autorizada y regulada en Gran Bretaña por la Comisión del Juego (Gambling Commission) con el número de cuenta 56015 y, además, está autorizada por la Autoridad de Licencias de Gibraltar (Gibraltar Licensing Authority) y regulada conforme a la Ley por el Comisionado del Juego de Gibraltar (Gibraltar Gambling Commissioner), con el número RGL 107. Gates of Olympus es un juego de tragaperras online que transporta a los jugadores al majestuoso Olimpo, hogar de los dioses griegos, donde el dios Zeus es el protagonista. El objetivo del juego es desvelar las riquezas ocultas tras las puertas del Olimpo con la ayuda de Zeus y su poderoso rayo.

Articles Casino Vegas Days no deposit bonus – Better Gamzix Gambling enterprises to own Australian Professionals 2025 Just what commission actions are available at the Australian casinos on the internet? Commitment and you can VIP Read more The online casino is accessible to every type of gamer, new free no deposit spins australia including jackpot games. Although, players should ensure that their contact details are up to date on the Costa Bingo website as the site may require winning players to confirm their prizes. Aloha shark casino review and free chips bonus the interesting and rewarding Banana Bar has are like honey to them, croupiers do get paid a relatively good salary. Some of the popular games offered by them include, but more often than not. © 2024, Swiftairporttransfer, All rights reserved.

http://www.motellejolibourg.com/?p=186937

Aloha! Cluster Pays™ slot game will dazzle you with its Tiki masks and Hawaiian flair. You can win up to 100,000 coins in this island adventure game. Download the mobile casino app for iOS or Android and have some fun on the go! How to Choose the Best Online Casino. Oktoberfest Edition is a slot machine by BGaming, allowing them to enjoy their favorite games on the go. Some betting providers also offer the option to withdraw funds via a prepaid card, however. Now, Slotland Entertainment is no stranger to the idea of simplicity. Casino Simsino This flexibility is perfect for those with busy schedules who may not have the time to visit a physical casino to play poker The convenience factor is further enhanced by the availability of mobile casino apps, allowing players to enjoy their favorite games on the go So, why wait Casino Online Spielen

Gates of Olympus 1000 přináší zásadní vylepšení oproti původní verzi: Olympus je výherní automat v antickém stylu. Jeho výherní automat vychází z mýtů Pragmatic Play Gates of Olympus, zejména o Diovi. Kresby jsou zde vykresleny ve 3D, pozadí a symboly jsou dobře propracované. Videoefekty a animace vypadají moderně. Než začnete hrát, měli byste podrobněji analyzovat jednotlivá kritéria. Gates of Olympus je videoautomat od společnosti Pragmatic Play, který byl vydán 24. února 2021. Hra získala ocenění “Game of the Year” na ceremoniálu EGR Operator Awards 2021 a stala se jedním z nejpopulárnějších slotů poskytovatele díky inovativní mechanice výplat a vysokému potenciálu výher. V České republice jsou online hazardní hry regulovány Ministerstvem financí ČR. Hráči mohou legálně hrát pouze na webech s českou licencí. Gates of Olympus je k dispozici u licencovaných provozovatelů, kteří splňují přísné požadavky na ochranu hráčů a zodpovědné hraní.

https://migdalecastle.com/15/recenze-online-kasino-hry-tiki-taka-pro-hrace-z-ceske-republiky/

Gates of Olympus bezpochyby patří mezi výstavní kousky herního vývojáře Pragmatic Play. Oblíbenost této hry lze odůvodnit kombinací několika aspektů. Kvalitní grafické provedení, vysoká návratnost hry a netradiční maximální násobky výhry až 5 000× vaší sázky. Goldrun casino cz 2025 review to však samozřejmě závisí na mnoha faktorech, rychlost. Pragmatic Play Live udělal velký rozruch, a použitelnost platební metody. Bingo soutěž zajímavé je, včetně bohyně moudrosti. Bonusy, automaty zdarma joker 81 zuřivý 4 a Bůh bouří. Momentálně největší výběr Pragmatic automatů najdete v online casinu Apollo Games, kde je k dispozici rovněž populární hra Zeus vs Hades, a to za peníze i zdarma v demo režimu.

When the Lightning Multiplier feature is activated, the host pulls the lever and up to 5 single number Inside bets are given a multiplier 50x-500x that is awarded to any winning bets on the Lightning numbers. Play for as little as 20p per round and enjoy a supercharged version of the classic Roulette action. “SUPER STAKE BLACKJACK FEATURE TO BE ADDED TO ALL DEDICATED STAKELOGIC LIVE BLACKJACK TABLES”, stakelogic, September 6, 2023. Verification can help ensure real people are writing the reviews you read on Trustpilot. No, Stake.us app is not available in Michigan. Although Michigan is one of the few states in the US where online casinos are legal, Stake is not legal anywhere in the USA and Michigan laws state Stake.us sweepstake casino is not legal in the state. Live roulette at Betiton offers an immersive, real-time gaming experience with professional dealers and realistic gameplay. Roulette is one of the most popular live game options among our UK members, appealing to players with its simple rules, fast game pace, and the thrill of watching the ball spin in anticipation. Betiton UK features a diverse selection of live roulette tables to suit every player’s style.

https://tekkaledogaltas.com/kahuna-casino-game-a-review-for-australian-players/

The Aloha slot machine playground! Cluster Pays consists of 6 reels and 5 rows of symbols. There are 30 cells in total, to receive payment you need to collect a group of 9 similar icons. All symbols in the game can be conditionally divided into basic and special. More than 90 casinos offer their games now, and if you’re looking for a place where you can try Aloha for yourself, If you want to play Aloha! Cluster Pays, you’re in the right place! Every site on this page is recommended by BingoPort and offers Aloha! Cluster Pays. Some of these sites even have welcome offers that you can use to play on the slot! Players, we need your help with how we should to rank and rate these reviewed casino games. You can help us by rating this game, and if you really enjoyed playing Aloha! Cluster Pays. You can also share it with your friends on Facebook, Twitter and via email. For all freeplay games, if your free credits run out, simply just refresh the page and your balance will be restored.

Il casinò è molto valido soprattutto perché presenta sia la sezione di giochi da casinò, sia quella per le scommesse sportive. Questo fa sì che il sito riesca a soddisfare una maggiore richiesta. Molti i metodi di pagamento per depositi e prelievi e un servizio assistenza clienti molto preparato. Star Pirates Code di presenta intervalli variabili di RTP (Ritorno al Giocatore). Ciò significa che il casinò ha la possibilità di impostare da solo l’RTP entro un specifico intervallo. Può impostarlo tra l’94.61 e il 96.74% Su Totosì puoi trovare un’ampia selezione di giochi di casinò online, pensati per soddisfare i gusti di ogni giocatore. Dalle classiche versioni digitali dei giochi da tavolo come il Blackjack e la Roulette fino al Video Poker ed ai giochi casino live con dealer reali che ti permettono di vivere un’esperienza autentica e interattiva.

https://www.camicassociates.com/vavadait-best-offers-2/

Scopri I migliori siti di casinò con bonus con soldi veri del roulette. Ricevi giri gratis, dritte da insider e novità sulle ultime slot: tutto direttamente nella tua casella email Pirots X è una istituzione nel mondo delle slot che cattura l’attenzione fin da primo spin, con la sua grafica vivida e al tema esotico, che immerge il giocatore in un’avventura piratesca fresca e colorata. Tuttavia, il gameplay potrebbe non essere altrettanto coinvolgente nel lungo periodo, con alcune meccaniche che, pur innovative, rischiano di diventare ripetitive se non si attivano frequentemente le funzioni bonus più dinamiche. Sintetizziamo, di seguito, i punti di forza e le debolezze della slot Pirots X. Gorilla Kingdom è una slot NetEnt ambientata nella giungla africana, con griglia 5×4 e 1.024 modi di vincere. I simboli raffigurano gorilla, leopardi, tucani e altri animali esotici, tutti realizzati con un design vivido che enfatizza il tema naturalistico. Durante i Free Spins, i simboli gorilla si trasformano in maschere che completano i rulli, aumentando le possibilità di combinazioni vincenti. Lo sfondo con vegetazione lussureggiante e la colonna sonora tribale creano un’atmosfera avventurosa, mentre le animazioni rendono il gameplay dinamico. Gorilla Kingdom si distingue per il mix tra semplicità delle meccaniche e varietà visiva, risultando una delle NetEnt slot più apprezzate dagli amanti dei giochi a tema natura.

Descubra se o jogo Money Coming no Tada Jogos vale a pena. Veja como funciona, bônus, estratégias e se o registro é seguro. Leia a análise completa! Jonas Ussing pode ser um garoto-propaganda para o futuro da indústria de efeitos visuais. O supervisor de efeitos visuais dinamarquês já chamou nossa atenção com seus dramáticos simuladores de água em grande escala. Agora, ele fez uso do software de renderização Chaos Cloud e V-Ray for 3ds Max para criar uma paisagem suburbana aparentemente interminável e um pouco assustadora para o filme de ficção científica doméstico de Lorcan Finnegan, Vivarium, estrelado por Jesse Eisenberg e Imogen Poots.então dessa forma O Money Coming chegou para revolucionar a experiência dos jogadores brasileiros na 073 bet, oferecendo uma combinação única de emoção, diversão e oportunidades reais de ganhos substanciais. Este incrível jogo arcade representa o que há de mais moderno e emocionante no mundo dos cassinos online, proporcionando uma experiência verdadeiramente inesquecível para todos os tipos de jogadores.

https://www.dizartadvert.com/uncategorized/baixe-o-melbet-apk-e-aproveite-as-promocoes-2/

O BSOP Winter Millions segue registrando imponentes números. Depois do Torneio dos Empresários e do Super High Roller, foi a vez do Main Event ter os números oficiais revelados. A competição contou com seis dias classificatórios e no início do Dia 2, o Diretor Roberto Soares fez o anúncio. WordReference English-Portuguese Dictionary © 2025: Essa oferta acontece diariamente das 00:00 ET em 7 de setembro até as 00:00 ET em 27 de setembro de 2025, exceto nos dias em que os torneios do WCOOP não estão acontecendo. Cada Tabela Líderes diária começa às 00:00 ET e termina às 23:59 ET. Para cancelar a inscrição você deve se dirigir ao balcão de inscrições antes de o torneio começar. Se o torneio já começou, não será possível cancelar a sua inscrição. Você pode alterar o dia inicial antes de o dia para o qual você se inscreveu originalmente começar. Para isso, basta solicitar à nossa equipe no balcão de inscrições.