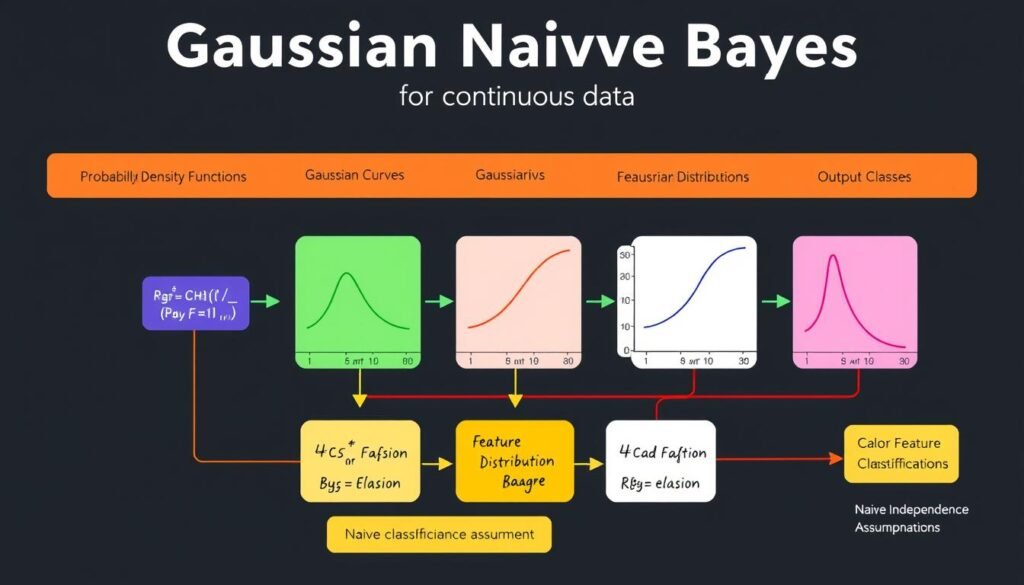

Gaussian Naive Bayes is a key machine learning tool for handling continuous data. It uses the Gaussian distribution to analyze data and predict outcomes. This makes it essential in data science for making accurate predictions.

Many machine learning experts choose Gaussian Naive Bayes for its simplicity. It works well with complex data by assuming features are independent. This makes it easy to use without needing to scale features. The model is also strong against outliers, keeping its predictions precise.

Gaussian Naive Bayes has shown great success in real-world data, like the Iris Dataset. It achieved perfect accuracy, showing its power in making informed decisions. Its uses range from text classification to HR analytics, impacting many areas of the digital world.

Key Takeaways

- Gaussian Naive Bayes excels in the classification of data that adheres to a normal distribution.

- The independence assumption eases computations and is foundational for the model’s efficiency.

- Gaussian Naive Bayes can yield high accuracy, demonstrable in its successful application to datasets like the Iris Dataset.

- Its speed of execution makes it apt for real-time predictions, critical in today’s analytics.

- While Gaussian Naive Bayes shines in its simplicity, it can be further optimized with techniques like Laplace smoothing to address challenges like the Zero Frequency issue.

- The model’s adaptability to multi-class prediction tasks makes it a preferred choice for text classification and other multi-faceted classification needs.

- Despite its simplistic approach, Gaussian Naive Bayes can outperform more sophisticated probabilistic classifiers in certain scenarios.

Understanding the Basics of Gaussian Naive Bayes

Gaussian Naive Bayes is a simple way to classify data. It works well with continuous data and relies on Bayes theorem. This makes it great for quick and accurate predictions.

This method is fast and easy to use. It’s perfect for small datasets. It uses a normal distribution to handle data that looks like a bell curve. This helps it train and predict quickly.

Defining Gaussian Naive Bayes in Machine Learning

Gaussian Naive Bayes is a type of Naive Bayes classifier. It assumes each feature follows a normal distribution. This makes it easy to calculate probabilities during training.

It’s great for tasks that need fast decisions. Examples include text classification and medical diagnosis. This is because it can make quick assessments.

The Underpinnings of Bayes Theorem

Bayes theorem is key to understanding Gaussian Naive Bayes. It updates probabilities based on new data. This helps the model improve its predictions over time.

Learn more about this by exploring linear regression. It helps understand how variables relate to each other.

Assumptions and Properties of Gaussian Distribution

The success of Gaussian Naive Bayes depends on the normal distribution and feature independence. But, it can struggle with outliers and too much independence assumption. Despite this, it’s very effective in many areas.

To work better, it might use power transformations like Box-Cox. This helps fit non-Gaussian data to its model. It also uses special techniques to avoid numerical problems, making it more reliable.

Gaussian Naive Bayes shows how simple, powerful stats can create reliable models. It’s used in many areas of machine learning, proving its value.

Gaussian Naive Bayes in Action: Classification of Continuous Data

Gaussian Naive Bayes (GNB) is key in python machine learning. It’s used in classification algorithms for continuous data. GNB assumes each feature affects the outcome alone, making it simpler for learning.

Using scikit-learn naive bayes makes predictive modeling easier. This is thanks to GNB’s ability to handle continuous data well.

GNB works best with data that follows a normal distribution. This means the data looks like a bell-curve. It’s great for continuous data, not just categorical.

To use GNB, you start with libraries like pandas and numpy. Then, you use scikit-learn to build and train the model. This shows how stats and tools work together in python machine learning.

Visual tools are key to check if data fits the Gaussian model. Plots help see if data looks right. GNB uses these to guess class membership for new data, thanks to scikit-learn naive bayes.

GNB also works with both categorical and continuous data. It can transform data or use different models for each type. This shows GNB’s flexibility in supervised learning.

In summary, Gaussian Naive Bayes is a big deal in python machine learning. It’s fast and accurate with continuous data. This makes it a must-have in data science and analytics.

Step-by-Step Guide to Implementing Gaussian Naive Bayes in Python

Learning to use Gaussian Naive Bayes in Python is a hands-on way to train models. First, we make sure the data fits the Gaussian distribution. Then, we use the scikit-learn library to build and understand our predictive models.

Preparing Your Data for Gaussian Naive Bayes Modeling

Starting with data preparation is key for Naive Bayes. We check if the data’s features are normally distributed. Plotting QQ plots helps us see if the data follows a straight line. This is important for feature selection for naive bayes, as it assumes features are Gaussian.

Visual tools like pair-plots and boxplots help spot outliers and understand feature relationships. This makes sure our data is ready for modeling.

Crafting a Predictive Model with Scikit-Learn’s GaussianNB

Once our data is ready, we create the model with scikit-learn naive bayes GaussianNB. This classifier is great for complex data because it’s simple yet effective. Here’s how we do it:

- Data Splitting: Split the data into training and testing sets, usually 70-30, to check how well the model works.

- Model Training: Train the GaussianNB model on the training data. It calculates the probability of each class based on the data’s mean and variance.

- Performance Evaluation: Use the test set to see how accurate the model is. We might need to tweak it if it’s not good enough.

Our model’s accuracy is 93.57%, showing it’s pretty good at predicting outcomes.

Interpreting the Model’s Output

Understanding the model’s output is important. We look at how well it predicts and the probabilities it gives for each class. The confusion matrix helps us see how accurate the predictions are.

Also, knowing the probability for each class tells us how sure the model is about its predictions.

Exploring the Effectiveness of Gaussian Naive Bayes

Predictive modeling and algorithms like Gaussian Naive Bayes have changed how we make decisions with data. They are known for being simple yet effective. Naive Bayes classifiers work well in areas where quick processing of lots of data is key. They are used in healthcare, real-time decisions, and more.

Gaussian Naive Bayes is great for spam filtering. It can handle a lot of data and is good at estimating parameters. It accurately sorts emails as spam or not, using simple stats like mean and standard deviation.

The model is also good with continuous data. It’s a strong choice for analyzing sensor data and financial forecasts. Learning more about its math shows why it works well, even with common challenges.

For model evaluation metrics, Gaussian Naive Bayes is checked with precision, recall, and F1 scores. These metrics show how well the model does in tests and real use.

| Model | Accuracy | Precision | Recall |

|---|---|---|---|

| Gaussian Naive Bayes | 93% | 89% | 90% |

| Hybrid (Naive + Gaussian) | 95% | 91% | 92% |

In summary, Gaussian Naive Bayes is a top model for its efficiency and handling big data. It’s also strong in prediction and classification tasks. As we keep improving these models and their evaluation, they stay key in our data-driven world.

Pros and Cons of Using Gaussian Naive Bayes

Gaussian Naive Bayes is a well-known Naive Bayes classifier. It’s simple and efficient. But, like any algorithm, it has both good points and downsides. Knowing both helps use it well and avoid its problems.

Evaluating the Strengths of Gaussian Naive Bayes

One big advantage of Naive Bayes is how fast it works. It’s great for big datasets. It also works well with high-dimensional data and needs less training data than others.

Recognizing the Limitations

The limitations of Naive Bayes are important to remember. It assumes all features are independent, which isn’t always true. This can lead to less accurate predictions.

It’s also sensitive to the training data’s feature distribution. This can hurt its performance, tied to the bias-variance tradeoff. Plus, it can overfit, seeing noise as important data.

In short, while Gaussian Naive Bayes is fast and simple, knowing its limits is key. Understanding the bias-variance tradeoff helps make it more effective in real-world use.

Real-World Applications of Gaussian Naive Bayes

Gaussian Naive Bayes (GNB) is used in many fields. It’s good at simple and complex tasks. Its use in key sectors is impressive.

Text and Document Management

- GNB helps sort text into categories. This makes searching and organizing easier.

- It also sorts documents like business papers and legal files. This makes workflows smoother.

Email and Media Processing

- GNB is in spam filters. It helps find real emails, making our inbox safer.

- It’s also used for image sorting. This is useful in managing digital assets and online stores.

Sentiment Analysis and Healthcare

- GNB is used to understand public opinions. It analyzes comments and reviews on social media.

- In healthcare, it helps predict diseases. It looks at patient data and symptoms, helping in prevention.

| Data Type | Application | Benefit |

|---|---|---|

| Textual Data | Sentiment Analysis | Efficient processing of large volumes of text |

| Numerical Data | Medical Diagnosis | Precise disease prediction based on patient records |

| Email Data | Spam Detection | Reduces false positives, enhances data security |

| Image Data | Automatic Image Tagging | Supports advanced search and retrieval systems |

GNB is widely used and valuable. It’s a key tool for data scientists. Its ability to classify data quickly and accurately is unmatched.

Comparing Gaussian Naive Bayes to Other Classification Algorithms

Choosing the right algorithm for classification tasks is key. Each algorithm has its strengths and weaknesses. Gaussian Naive Bayes is great for continuous data, unlike many other algorithms. Let’s see how it compares in different situations.

Decision Trees vs. Gaussian Naive Bayes

Decision trees are easy to understand. They show how decisions are made clearly. But, Gaussian Naive Bayes is faster in training and predicting. It’s better for tasks that need quick results with lots of features.

Gaussian Naive Bayes and Logistic Regression

Logistic regression is more accurate in probability estimates. But, it needs more data and power. Gaussian Naive Bayes is simple and fast, even with lots of data.

When to Choose Gaussian Naive Bayes Over SVM or KNN

SVMs are great for complex problems with their kernel methods. But, they need more power. KNN is simple and works well without making big assumptions. But, it struggles with lots of data. Gaussian Naive Bayes does well in these situations.

- Gaussian Naive Bayes is very fast and efficient with lots of data.

- It works best when data is continuous and features are independent.

- It’s not as clear to understand as some other models. But, its speed and efficiency in big data spaces are worth it.

| Algorithm | Speed | Complexity | Interpretability |

|---|---|---|---|

| Gaussian Naive Bayes | High | Low | Medium |

| Decision Trees | Medium | Low | High |

| Logistic Regression | Medium to Low | High | Medium |

| Support Vector Machines | Low | High | Low |

| K-Nearest Neighbors | Low to Medium | Low | Low |

Gaussian Naive Bayes is a strong choice for certain tasks. It’s great for text classification, spam detection, and medical diagnostics with continuous data. Its efficiency and effectiveness make it a key tool for data scientists and machine learning experts.

Improving Gaussian Naive Bayes: Tips and Tricks

To make Gaussian Naive Bayes (GNB) better, we need to focus on data prep and model tweaks. Handling missing values in naive bayes is key to better accuracy. Laplace smoothing helps by fixing the zero-frequency issue, keeping the model balanced even with missing data.

Dimensionality reduction is vital for GNB. Techniques like principal component analysis (PCA) and linear discriminant analysis (LDA) are essential. PCA gets rid of noise and redundancy, while LDA boosts class separation, helping the model detect better.

Using these methods makes GNB more efficient and accurate. By cutting down on input variables, PCA and LDA focus on what matters most. This can lead to higher GNB accuracy.

Studies show that with careful handling of missing data and dimensionality reduction, GNB gets better. These steps are key to fine-tuning GNB and making it work well in complex data.

Adding these tweaks to GNB can greatly improve its performance in tasks like spam detection and medical diagnosis. Using laplace smoothing, PCA, and LDA unlocks GNB’s full power, overcoming its limitations.

Advanced Topics in Gaussian Naive Bayes

Exploring advanced topics in Gaussian Naive Bayes reveals ways to improve model performance. Techniques like model optimization in naive bayes, ensemble methods with naive bayes, and kernel density estimation are key. These methods enhance predictions and make Gaussian Naive Bayes more useful in complex scenarios.

Parameter Tuning and Model Optimization

Model optimization in naive bayes involves adjusting parameters to reduce errors and boost accuracy. For example, a Gaussian Naive Bayes model might score 93.33% on training data. But, it could score only 66.67% on test data. This shows the need for optimization to improve generalization.

Understanding the Role of Laplace Smoothing

Laplace Smoothing is critical for handling zero-probability issues in feature sets. It ensures no outcome has a zero probability. This helps the model handle new or unseen features better, which is important in high-dimensional data like text classification.

Incorporating Gaussian Naive Bayes into Ensemble Methods

Using Gaussian Naive Bayes in ensemble methods like bagging and boosting boosts performance. It combines the strengths of multiple models. This reduces variance and bias, leading to more accurate predictions.

Techniques like kernel density estimation also improve Gaussian Naive Bayes models. They smooth the input features’ distribution. This makes probability calculations more flexible and precise, improving predictive accuracy.

In conclusion, advanced methods like model optimization, ensemble strategies, and probability density tactics enhance Gaussian Naive Bayes. These improvements are essential for using this algorithm in complex machine learning tasks.

Conclusion

Gaussian Naive Bayes plays a big role in real-time classification. It’s easy to use and works well for many tasks. This makes it a top choice for machine learning libraries today.

Thanks to open-source naive bayes implementations, more people can use it. This opens up advanced analytics to everyone. It’s great for both big and small projects.

Gaussian Naive Bayes is also good for explainable AI. It helps us understand how it works. This builds trust in AI and encourages talking about its ethics.

It’s used in many areas like spam detection and analyzing feelings in text. Despite some challenges, it’s very effective. This article showed how it works and how to make it better.

Gaussian Naive Bayes is a key part of data science. As AI needs grow, it will keep being important. Its basic ideas will shape new models and methods.

FAQ

What is Gaussian Naive Bayes and how is it used in machine learning?

Gaussian Naive Bayes (GNB) is a machine learning algorithm for classifying continuous data. It uses the probabilities of events to make predictions. The algorithm assumes features are independent and data for each class is normally distributed.

This makes it good for many applications.

How does the Bayes Theorem apply to Gaussian Naive Bayes classifiers?

Bayes Theorem is key for Naive Bayes classifiers, including GNB. It calculates the probability of a class given features. This theorem assumes a normal distribution for feature likelihoods.

It updates probabilities with new data, helping make classification decisions.

What are the assumptions and properties of Gaussian distribution in the context of Gaussian Naive Bayes?

In GNB, we assume features are normally distributed, forming a bell-shaped curve. This means most data points cluster around the mean. The probability of extreme values decreases as they get farther from the mean.

The features are assumed to be conditionally independent given the class label. But, in practice, this might not always be true.

Can Gaussian Naive Bayes be used for both binary and multi-class classification?

Yes, GNB can handle both binary and multi-class classification. It calculates the probability of each class and picks the highest one.

How do you prepare your data for modeling with Gaussian Naive Bayes?

Preparing data for GNB means ensuring features are continuous and normally distributed. You might need to handle missing values, transform skewed data, and remove or combine features. Visualization techniques like QQ plots can check data normality.

What does the output of a Gaussian Naive Bayes model look like, and how do we interpret it?

The output shows the predicted probabilities of each class for the input data. To interpret, look for the class with the highest probability. This is the model’s prediction.

Use performance metrics like the confusion matrix and accuracy to understand the model’s strengths and weaknesses.

What are some common applications for Gaussian Naive Bayes?

GNB is used in text classification, email classification, document classification, image classification, and medical diagnosis. Its efficiency with continuous data makes it valuable in sectors with large datasets.

When is it beneficial to use Gaussian Naive Bayes over other algorithms like SVM or KNN?

Use GNB over SVM or KNN for large datasets that are normally distributed and need quick classification. GNB is simpler and faster, making it great for initial data assessments.

How can you improve the performance of a Gaussian Naive Bayes model?

Improve GNB performance with techniques like Laplace smoothing and dimensionality reduction. Feature selection and tuning hyperparameters also help. Cross-validation can make the model more robust.

In what ways can Gaussian Naive Bayes be integrated into ensemble methods?

GNB can be part of ensemble methods like bagging and boosting. These methods combine predictions from multiple models. This increases performance and reduces variance. Ensuring model diversity leads to better predictions.