Reinforcement Learning Algorithms are at the core of many cutting-edge AI projects, driving advancements in robotics, gaming, finance, and beyond. Each algorithm offers unique strengths and is suited for specific types of problems. Understanding when and how to use them is essential for building intelligent, adaptive systems.

Key Takeaways

- Understanding the critical role of reinforcement learning algorithms in modern AI.

- Exploring diverse reinforcement learning applications in technology and research.

- Leveraging reinforcement learning with neural networks for advanced problem-solving.

- Recognizing the significant algorithms that revolutionize how machines learn and adapt.

- Prepping the stage for deeper dives into specific, innovative reinforcement learning techniques.

- Appreciating the blend of traditional and neural-based approaches in reinforcement learning.

Understanding Reinforcement Learning and Its Significance

Reinforcement Learning Algorithms learning is a key part of machine learning. It helps an agent make decisions by interacting with its environment. It uses rewards and penalties to improve its actions.

This field has grown a lot, thanks to model-free reinforcement learning and model-based reinforcement learning. It’s now used in robotics and finance, showing its wide range of uses.

There are two main types of reinforcement learning. Model-free reinforcement learning doesn’t use a model of the environment. It learns from rewards directly. On the other hand, model-based reinforcement learning creates a model to predict outcomes and make decisions.

For those wanting to try it out, reinforcement learning in Python offers tools and libraries. Python is easy to use and has a big community, making it great for learning reinforcement learning.

The OpenAI gym tutorials are key for learning reinforcement learning. They offer a place to practice and learn different algorithms. It’s a way to connect theory with practice.

| Feature | Model-Free RL | Model-Based RL |

|---|---|---|

| Dependency on Model | No | Yes |

| Learning Speed | Slower | Faster |

| Complexity | Lower | Higher |

| Example Algorithms | Q-learning, SARSA | Dyna-Q, AlphaGo |

By diving into reinforcement learning in Python and using OpenAI gym tutorials, you can improve your skills. This helps in solving problems in many real-world areas.

Foundations of Reinforcement Learning

We dive into key elements that set the stage for reinforcement learning success. We start with the balance between exploration and exploitation. Then, we explore Markov decision processes (MDPs) and the Bellman equations’ role.

Exploration vs. Exploitation

The exploration vs. exploitation dilemma is a core challenge in reinforcement learning. It’s about deciding whether to try new things or use what’s known to get rewards. Finding the right balance is key for the algorithm to perform well in different situations.

Markov Decision Process (MDP)

The Markov decision process (MDP) is essential for modeling decision-making scenarios. It outlines the environment with states, actions, rewards, and transitions. This framework is critical for applying value iteration and policy iteration to find the best policies.

Bellman Equation Fundamentals

The Bellman equation is central to solving MDPs. It breaks down the decision process into manageable parts. This equation is the foundation for value and policy iteration. These methods improve decision-making in reinforcement learning tasks.

Reinforcement Learning Algorithms

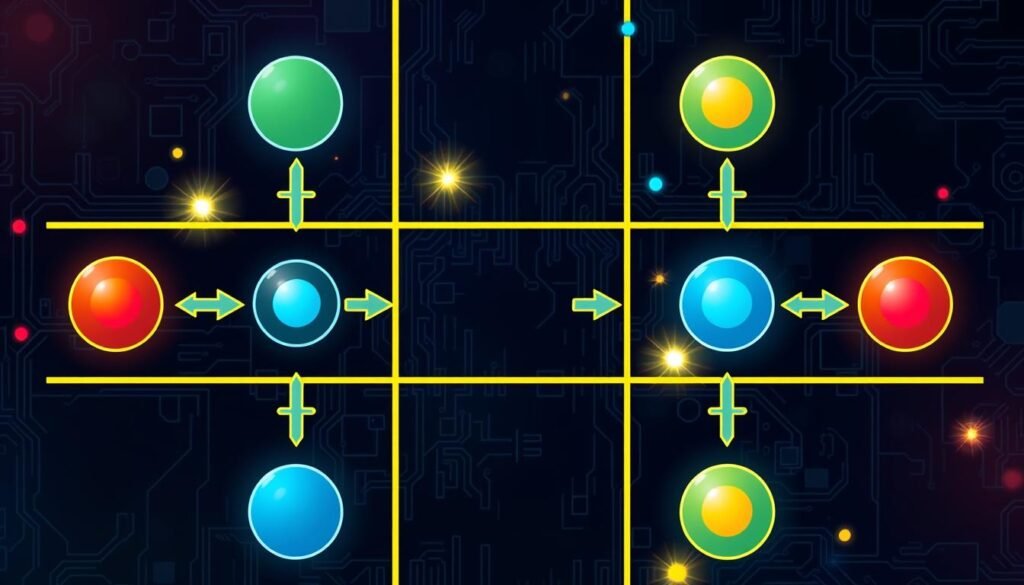

Exploring artificial intelligence, we find different ways to learn. We look at on-policy learning and off-policy learning. We also introduce key algorithms like the q-learning algorithm, policy gradient methods, and actor-critic algorithms.

On-Policy vs. Off-Policy Learning: On-policy learning updates the policy used for decisions. Off-policy learning uses actions outside the current policy. This can speed up learning.

- Q-Learning Algorithm: A key off-policy method, it learns the best action-policy without needing the policy to act.

- Policy Gradient Methods: These on-policy methods improve the policy based on the reward’s gradient.

- Actor-Critic Algorithms: They mix policy and value function updates. The actor updates the policy, and the critic checks the action’s value.

These algorithms are used in many areas. They help in automated trading and autonomous driving. They solve complex problems well.

| Algorithm Type | Learning Approach | Common Applications |

|---|---|---|

| Q-Learning | Off-Policy | Game AI, Navigation Systems |

| Policy Gradient | On-Policy | Robotics, Financial Trading |

| Actor-Critic | Hybrid | Healthcare, Advanced Robotics |

In summary, knowing how to use these algorithms is key. It helps create systems that work well and adapt to new situations.

Deep Q-Network (DQN) Explained

The deep q-network (DQN) is a big step forward in combining old-school reinforcement learning with new deep learning. It uses neural networks to make solving tough problems easier. This makes learning more effective and opens up new possibilities.

Experience Replay in DQN

Experience replay is a key part of DQNs. It stores the agent’s experiences in a buffer. Later, the DQN uses this data to learn better and avoid forgetting important information. This makes learning more stable and reliable.

Target Networks for Stability

Target networks are another important part of DQNs. They are a static copy of the main network, updated periodically. This helps keep learning stable and prevents big swings in performance.

DQNs get even better with dueling network architectures. These models separate state values from action advantages. This makes learning more precise and efficient. Dueling networks help DQNs learn faster and better.

The deep q-network (DQN) has changed the game in reinforcement learning. It shows how far we can go with function approximation. DQNs are not just a tool; they open doors to new possibilities and improve learning frameworks.

Policy Gradient Methods for Learning Policies

Policy gradient methods are a key part of reinforcement learning for control systems. They focus on improving the policy that guides the agent’s actions. This is great for complex environments with continuous or high-dimensional action spaces. Adding eligibility traces makes these methods even more effective.

Policy gradient methods are known for their ability to find a stable policy. They differ from value-based methods, which can be unstable. Policy gradient methods calculate the policy’s gradients directly, leading to smoother learning in complex settings.

Eligibility traces are vital in advanced reinforcement learning. They help track the agent’s path, adjusting credit for states and actions based on their distance from rewards. This leads to faster learning and better adaptation in dynamic environments.

- Direct optimization of policy

- Effectiveness in continuous action spaces

- Better convergence properties compared to value-based methods

- Improved learning speed through eligibility traces

Here’s a comparison of policy gradient methods and traditional value-based methods:

| Aspect | Policy Gradient Methods | Value-Based Methods |

|---|---|---|

| Focus | Directly optimizing the policy | Estimating value functions |

| Action Spaces | Efficient in continuous spaces | Commonly used in discrete spaces |

| Stability | Higher stability in learning | Can experience high variance |

| Implementation of Eligibility Traces | Easily integrated | Seldom used |

Adding eligibility traces to policy gradient methods speeds up learning. It also helps handle the complexities of control systems. As these methods improve, they will play a bigger role in AI, making them essential for modern AI strategies.

Actor-Critic Algorithms: Best of Both Worlds

In the world of reinforcement learning, actor-critic algorithms are special. They mix policy-based and value-based methods. This blend helps solve tough problems in reinforcement learning for game AI.

Actor-critic methods have two parts: the actor and the critic. The actor decides what to do next based on a policy. The critic checks how good these actions are using a value function. This teamwork makes learning and adapting in changing environments better.

Understanding Actor and Critic Components

The actor in these algorithms works on finding the best policy. It aims to get the highest rewards in the future. The critic, on the other hand, looks at the actions taken. It uses the advantage function to see how good an action is compared to others.

Advantages of Actor-Critic Algorithms

Actor-critic algorithms make learning faster and more effective. They are great at finding the right balance between trying new things and using what works. This is very useful for reinforcement learning for game AI, where things are complex and can change a lot.

| Feature | Impact on Learning | Relevance to Game AI |

|---|---|---|

| Continuous Learning | Adapts to changing environments | High |

| Policy Improvement | Refines decisions over time | Critical |

| Advantage Function | Enhances action evaluation | Essential |

Proximal Policy Optimization (PPO) and Its Effectiveness

In the world of reinforcement learning, Proximal Policy Optimization (PPO) is a big step forward. It helps improve policy models bit by bit. This makes training safer and more reliable, which is key for important tasks.

This section will explain how PPO works. We’ll compare it to Trust Region Policy Optimization (TRPO). We’ll also look at its role in safe reinforcement learning.

Safety in Policy Updates

Keeping policy updates safe is a big deal in reinforcement learning. PPO uses a method that limits how much the policy can change. This is similar to TRPO’s approach.

This limit helps avoid big drops in performance. It makes sure the learning process stays reliable, even when things change.

Clip Mechanism in PPO

The clip mechanism in PPO controls how much the policy can change. It keeps updates within a safe range. This prevents big changes that could mess up the learning process.

This mechanism helps keep performance steady. It also protects the algorithm from big risks that come with sudden policy changes.

| Feature | PPO | TRPO |

|---|---|---|

| Update Strategy | Clipping | Constraint Optimization |

| Performance Safety | High | Medium |

| Computational Cost | Lower | Higher |

| Implementation Complexity | Simpler | More Complex |

TRPO started the idea of safe policy updates. But PPO has made it better. It balances learning efficiency with ease of use.

PPO is great for many situations. It shows how important PPO is in safe reinforcement learning. It helps solve tough AI problems.

Temporal Difference Learning and SARSA Algorithm

Temporal difference learning is a key part of reinforcement learning. It learns directly from experience without needing a model of the environment. This method updates its estimates using other learned estimates, without waiting for a final outcome.

The SARSA algorithm, short for State-Action-Reward-State-Action, is an example of on-policy learning. It learns the value based on the policy it follows. This is different from off-policy methods like Q-Learning, where the learning policy can differ from the decision-making policy.

The SARSA algorithm updates the action value function based on the action performed by the policy currently being followed. It uses eligibility traces to speed up learning. This allows states to be eligible for updates longer.

In practical use, eligibility traces in SARSA are like reinforcing often-traveled pathways. This makes SARSA effective for policies that need to explore states over multiple steps. It enhances the learning process.

To understand the differences between SARSA and other temporal difference learning algorithms, check out this article on temporal difference learning algorithms. It provides a detailed look at both the theory and practical applications.

Temporal difference learning and the SARSA algorithm are key parts of machine learning strategies. For more information, see this overview at PulseDataHub. It’s great for professionals and enthusiasts looking to use advanced AI techniques.

In summary, using algorithms like SARSA with mechanisms like eligibility traces is beneficial. It helps create policies that balance exploration and exploitation well.

Monte Carlo Methods in Reinforcement Learning

Monte Carlo methods in reinforcement learning are key for better decision-making in uncertain environments. They use random sampling to guess outcomes. This way, decisions are based on real experiences, not just a model.

MC for Evaluating Policies

Policy evaluation with Monte Carlo methods is vital for off-policy learning. It lets you check how well a policy works without sticking to it. This gives you a chance to see how different policies could do better.

MC for Value Prediction

Monte Carlo methods are great for predicting values, like in exploration vs. exploitation. They help find the right mix of trying new things and using what works. This makes strategies better in new situations.

Want to learn more about using these methods? Check out this guide on Monte Carlo methods in Python.

| Method | Application in RL | Benefits |

|---|---|---|

| Monte Carlo Simulation | Policy Evaluation | Does not require a model of the environment, useful in complex scenarios |

| Direct Sampling | Value Prediction | Balances exploration and exploitation, enhances learning accuracy |

Deep Deterministic Policy Gradient (DDPG) for Continuous Action Spaces

The world of reinforcement learning has grown thanks to deep deterministic policy gradient (DDPG). It’s great for dealing with continuous action spaces. This method combines policy gradient and Q-learning to improve policy and value function estimates in continuous domains.

DDPG is special because of its actor-critic design. It’s designed to handle the tough parts of continuous action spaces in reinforcement learning. Let’s look at what makes DDPG so effective.

Actor-Critic Structure of DDPG

DDPG uses two neural networks: the actor and the critic. The actor suggests actions based on the current state. The critic checks the action by calculating the value function. This setup makes learning in continuous action spaces more stable and efficient.

Smooth Policy Update Techniques

DDPG uses smart strategies to update policies. It uses a target network for stable learning. The soft updates of these networks make learning gradual and stable. This leads to a stronger reinforcement learning model.

With these advanced techniques, DDPG tackles the challenges of continuous action spaces. It’s become a key method in artificial intelligence.

Soft Actor-Critic (SAC) Algorithm for Optimal Policies

The Soft Actor-Critic (SAC) algorithm is a top choice in policy gradient methods. It combines maximum entropy reinforcement learning principles. This method aims for the best policies and encourages exploration by maximizing entropy. This makes policies strong and flexible, working well in various environments.

SAC is great at balancing exploration and exploitation. It keeps the policy uncertain, avoiding getting stuck in local optima. This allows for continuous exploration of new strategies. The algorithm works well with complex, real-world problems where actions are not just on or off.

- Robust Decision-Making: SAC’s use of entropy makes decisions better under different conditions.

- Adaptability: It adapts well to complex environments, thanks to its exploration method.

- Efficiency: SAC learns faster than other methods, thanks to its balance of exploration and exploitation.

SAC’s focus on entropy maximization boosts performance and makes policies more adaptable and efficient in complex, dynamic environments.

| Feature | Impact on Policy Optimization |

|---|---|

| Entropy Maximization | Boosts exploration, preventing early settling on suboptimal policies. |

| Continuous Action Space Support | Supports handling complex scenarios with finer control actions. |

| Sample Efficiency | Reduces data needed for optimal policies, saving time and resources. |

In summary, the Soft Actor-Critic algorithm is a key player in maximum entropy reinforcement learning and policy gradient methods. It creates optimal policies by balancing exploration and exploitation. This makes it a vital tool for advanced reinforcement learning models.

Conclusion

As we explore reinforcement learning, we see the power of Deep Q-Networks (DQN) and Soft Actor-Critic (SAC) algorithms. This field is full of possibilities for new ideas and uses. Whether you’re studying or working with TensorFlow reinforcement learning or PyTorch reinforcement learning, there’s much to discover.

The future of reinforcement learning is exciting, with new research and methods always coming up. For those interested, following benchmarks can show how algorithms perform and help improve them. This growth will lead to even more breakthroughs in the future.

The algorithms we’ve looked at are like guides in the complex world of reinforcement learning. They help us move forward, whether for learning or solving real problems. With tools like TensorFlow and PyTorch, we can make these ideas real. So, as we finish our journey through these techniques, we know there’s much more to explore in reinforcement learning.

FAQ

What are reinforcement learning algorithms and why are they important?

Reinforcement learning algorithms help machines learn from their actions. They get rewards or penalties for their choices. This is key for tasks like robotics and game AI.

They work with neural networks to solve complex problems. This makes them a big part of AI today.

What’s the difference between model-free and model-based reinforcement learning?

Model-free learning makes decisions based on rewards and actions. It doesn’t need to know the environment’s rules. On the other hand, model-based learning builds a model of the environment.

It then uses this model to plan and simulate outcomes. The choice between these depends on the task’s complexity and the information available.

How can I start practicing reinforcement learning in Python?

Start by learning Python libraries like TensorFlow or PyTorch. Also, get familiar with OpenAI Gym. It has environments for all skill levels.

What is the importance of exploration vs. exploitation in reinforcement learning?

Exploration is trying new strategies to see if they work. Exploitation uses known strategies for rewards. Finding the right balance is key.

A good agent explores enough but also gets short-term rewards. This balance is critical.

What is the Markov Decision Process (MDP) framework in reinforcement learning?

The MDP framework describes an environment in reinforcement learning. It outlines states, actions, rewards, and how states change. MDPs help frame the problem of learning to achieve a goal.

What are on-policy and off-policy learning in the context of reinforcement learning?

On-policy learning updates the policy it’s following, like SARSA. Off-policy learning learns from a different policy, like Q-learning. This affects how they update and gather data.

How does experience replay improve the efficiency of Deep Q-Networks (DQNs)?

Experience replay stores experiences in a buffer. It then randomly samples from this buffer to update the network. This reduces variance and ensures rare experiences are considered.

It leads to more stable and efficient learning.

What makes actor-critic algorithms different from other reinforcement learning approaches?

Actor-critic algorithms combine policy-based and value-based methods. They have an ‘actor’ for choosing actions and a ‘critic’ for evaluating rewards. This makes them converge faster and more stable than single-method approaches.

What is special about Proximal Policy Optimization (PPO) in reinforcement learning?

PPO is known for its effectiveness and simplicity. It limits policy updates to maintain stability. Its clip mechanism prevents large updates, ensuring consistent learning.

How does the SARSA algorithm operate and what makes it unique?

SARSA updates the action-value function based on current and next actions. It’s an on-policy method, unlike Q-learning. This makes it unique in how it updates policies.

In which scenarios are Monte Carlo methods suitable within reinforcement learning?

Monte Carlo methods are good when the environment’s model is unknown. They use averages of complete returns for policy evaluation. They’re great for episodes with terminal states.

Why is Deep Deterministic Policy Gradient (DDPG) relevant for continuous action spaces?

DDPG is for continuous action spaces. It uses a deterministic policy for precise actions. Smooth updates ensure steady learning without big jumps.

What advantage does the Soft Actor-Critic (SAC) algorithm have in reinforcement learning?

SAC optimizes a balance between return and entropy. This encourages exploration. It leads to more robust and diverse performance, making it great for complex tasks.

Where can I find resources and communities to further my understanding of reinforcement learning?

For more knowledge, check out research papers and benchmark websites. GitHub has many communities. TensorFlow and PyTorch have great tutorials.

Online courses and practical projects offer hands-on learning. They’re all valuable resources.

24 thoughts on “10 Most Powerful Reinforcement Learning Algorithms for AI Projects”

Alright, VP777bet… decent site. Fast payouts are key. The selection of slot games could be better I think but overall not bad! Check them out here: vp777bet

Registration on jili666register was a breeze – quick and simple. Ready to play some new games. Easy peasy lemon squeezy. Sign up here: jili666register

Die Anmeldeschaltfläche ist leicht auf der Homepage zu finden, was es

den Spielern ermöglicht, schnell auf ihre Konten zuzugreifen. Mit

einer vielfältigen Auswahl an Spielen, darunter Spielautomaten, Tischspiele und

Live-Dealer-Optionen von erstklassigen Softwareanbietern, spricht das 7Signs Casino eine breite Palette von Spielerpräferenzen an. Mit einer Lizenz aus

Curacao bietet die Plattform eine sichere und regulierte Umgebung für ihre Spieler.

7Signs Casino, das 2020 gegründet wurde, hat sich schnell

einen Namen in der Online-Glücksspiel-Community gemacht.

Aktuell gibt es immer wieder Aktionen mit einem 7Signs Casino no

deposit bonus – prüfen Sie regelmäßig die Aktionsseite für aktuelle

Angebote! Auszahlungen werden schnell bearbeitet – in den meisten Fällen innerhalb von 24 Stunden.

Dieser konsistente Ansatz trägt dazu bei, sicherzustellen, dass jeder, der 7signs Casino nutzt, sicher bleibt und dass das Casino fair ist.

Dies beschleunigt die Art und Weise, wie 7signs Casino Anfragen bearbeitet.

Um die besten Limits, nach Volatilität sortierte

Spiellisten oder eine für Sie geeignete Cashback-Obergrenze zu erhalten,

fragen Sie Ihren Gastgeber. Legen Sie für jede Sitzung ein klares Punktziel fest und hören Sie auf,

wenn Sie es erreichen, um Ihr Geld im 7signs Casino

sicher aufzubewahren. Durch die frühzeitige Überprüfung Ihres Kontos erhalten Sie vorrangige

Auszahlungen, wenn Sie Level 3 erreichen.

In unserer Casino-Lobby finden Sie schnell neue, beliebte, hohe RTP-, Bonuskauf-, Drops & Wins- und Jackpots-Spiele.

Schauen Sie sich zuerst unsere Tische an, wenn Sie Strategiespiele mögen. Wir markieren „Hot Releases“ und zeigen Live-Jackpot-Beträge

an, damit Sie schnell Chancen finden können.

References:

https://online-spielhallen.de/maximale-ruckerstattung-alles-uber-f1-casino-cashback/

Die Informationen auf der Website Near-me.casino wurden aus öffentlichen Quellen für Überprüfung und Referenz zusammengestellt.

Derzeit in Venlo finden Sie 1 Casinos. Teilnahme an Glücksspiel

ab 18 Jahren – Glücksspiel kann süchtig machen. Lebensjahres sowie die Beachtung der für den jeweiligen Nutzer geltenden Glücksspielgesetze.

Die Alterskontrolle findet wie in allen kontinentaleuropäischen Casinos bei allen Gästen statt,

also immer an einen Ausweis oder Pass denken. Ich hatte allerdings etwas Probleme,

das Casino zu finden, da ich in den Niederlanden keine Internetverbindung hatte.

Im direkten Umfeld sind in der Regel ausreichend und kostenfreie Parkplätze vorhanden. Ein Erlebnis sind

zweifelsohne die festverankerten Specials unter der Woche wie beispielsweise Montags-Bingo oder interessante Besucherpakete für

das Automaten- oder Klassische Spiel.

References:

https://online-spielhallen.de/tipico-casino-freispiele-ihr-leitfaden-zum-gewinnen/

Crown is also accessible by boat from nearby Barangaroo Wharf or via helipad transfers by request.

Crown Sydney offers premium spaces for executive meetings,

corporate events, and private receptions. VIP guests receive priority reservations, private entry, and elite member privileges.

Guests can also join personal training sessions or guided yoga overlooking the harbour.

The Crown Sydney Casino is located in a convenient spot with easy access to major roads and public transportation. Crown Sydney Casino offers a variety

of entertainment options and amenities. There are 14 food

and drink spots to choose from at Crown Sydney, ranging from

fancy fine dining to cosy bars. I visited and got massages and facials and it was

a purely blissful experience.

References:

https://blackcoin.co/get-into-the-world-of-bk9-casino-online-game-bk9aud-net/

This KYC (Know Your Customer) procedure helps prevent fraud and

ensures account security for all users. Our payment processing systems comply with international security

standards including PCI DSS compliance. We implement advanced SSL encryption technology to protect

all personal and financial data transmitted through our platform.

Our game library spans multiple categories to suit all player preferences.

This resource provides immediate answers without needing to

contact support. This verification ensures all our games

operate with genuine randomness and fairness.

In Australian casino, Neosurf is one of the most popular payment method.

StayCasino is one of the best casino that accepts Visa deposits.

By the way, you need to add a payment method just once, and Stay Casino saves this information for quick deposits.

This is one of the most common payment methods, so it is acceptable to almost

every player! This is one of the most important features

for all types of players. Just start with a live roulette casino with low stakes!

References:

https://blackcoin.co/circus-circus-las-vegas-in-depth-guide/

In any case, rest assured that your money won’t disappear.

Please contact our customer service team via live chat or by

email at [email protected] to double-check if the transfer details

are filled in correctly. Please report our customer service team

through the livechat or by the email [email protected] to double check

if the transfer details are fulfilled properly. However, processing times may vary based on the chosen deposit

method. You can deposit a minimum of 10 EUR, and withdraw a minimum of 20 EUR.

If you prefer to gamble with fiat money, it’s Okay – we offer

that too. But 7Bit is indeed the best Bitcoin casino,

and here is why!

We won’t ask you to spend all your money on casino games.

Every day, we put our efforts into providing a mind-blowing gaming pastime to our players.

Enjoy your favorite Bitcoin slots online and win real money at 7Bit!

References:

https://blackcoin.co/how-to-spot-poker-cheats-and-what-to-do-with-that/

At the end of the day, online casinos are made for entertainment.

Rocketplay offers a generous welcome bonus plus a structured

loyalty program with exclusive benefits the more you play.

Rocketplay gives access to hundreds of games from leading studios.

RocketPlay Casino has been a top choice for Australian online gamblers since its launch in 2020.

Yes, the Curacaoan government has granted the website a gambling license.

Please get in touch with our support staff if you’re having trouble.

The majority of issues that arise when interacting with the casino may be resolved by following our guidelines.

Because the website employs cutting-edge

data encryption techniques, you can play with confidence.

RocketPlay Casino is a great choice for online gaming. The team is

known for its quick response times and effective solutions,

making sure your gaming experience stays hassle-free.

Whether you have a question about your account, a game, or a payment issue,

the friendly and professional support team is ready to help.

Whether you’re at home or on the move, the RocketPlay Casino App makes it convenient to enjoy your favorite

games. The mobile version adapts perfectly to your screen, offering a hassle-free experience.

The app is designed for both Android and iOS users,

ensuring smooth gameplay and quick loading times.

References:

https://blackcoin.co/ggbet-casino/

paypal casino canada

References:

pandahouse.lolipop.jp

online casinos that accept paypal

References:

https://www.workforce.beparian.com/employer/best-paypal-casinos-usa-%e1%90%88-top-real-money-paypal-casinos/

online australian casino paypal

References:

https://skillsvault.co.za/profile/mercedeszpb987

paypal casinos for usa players

References:

https://jobcop.ca/employer/online-casino-mit-paypal-einzahlung-die-top-casinos-im-vergleich/

online roulette paypal

References:

https://payment.crimmall.com/bbs/board.php?bo_table=free&wr_id=139227

online casino mit paypal einzahlung

References:

https://chunboo.com/bbs/board.php?bo_table=free&wr_id=238

Just had a decent win on 52bet9! Cashed out quickly, no hassle. Good looking out! Thanks to 52bet9.

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

**mitolyn**

Mitolyn is a carefully developed, plant-based formula created to help support metabolic efficiency and encourage healthy, lasting weight management.

Yo, check out ph898. Heard some rumblings it’s the real deal. Gonna give it a spin myself and see if it lives up to the hype. Fingers crossed!

Laro77bet is my go-to for placing all kinds of bets, from sports to e-sports. Sign up is super easy! Why don’t you try laro77bet?

Hey guys, just stumbled upon fil777org. Looks kinda interesting. Is it legit? Curious what the community thinks before I jump in!

Pingback: download youtube

Pingback: altogel

Pingback: essentials fear of god

Pingback: บริการส่ง SMS