In today’s data-driven world, logistic regression is a key tool for predictive modeling. It’s used for tasks where the outcome is either yes or no. Unlike linear regression, which predicts continuous values, logistic regression uses a special function to guess the chance of a yes or no answer.

Logistic regression is all about probability. It tries to figure out the chance that something will happen. This method uses a special way to find these probabilities, making it great for real-world problems.

The logistic function is special because it turns numbers into chances between 0 and 1. This is different from linear regression, which predicts numbers and uses a simple line to fit the data.

Logistic regression is very useful and can be used in many ways. It’s used in health, finance, and more. It helps make clear decisions by turning complex data into simple yes or no answers.

Key Takeaways:

- Logistic regression is a statistical model tailored for binary classification problems, unlike linear regression that targets continuous ooutcomes.

- Through the use of a logistic function, it converts logistic odds into probabilities between 0 and 1, making it ideal for probability modeling.

- The model relies on maximum likelihood estimation, which provides a robust framework for parameter estimation.

- Logistic regression is highly versatile and can be adapted for multinomial and ordinal dependent variables.

- The technique is entrenched in the concept of maximum entropy, striving to infer as much as possible with the least unwarranted assumptions.

- Its real-world applications span from making diagnostic predictions in healthcare to forecasting financial risks and consumer behavior.

Understanding the Fundamentals of Logistic Regression:

Logistic regression is a key method in predictive analytics and machine learning. It’s great for tasks where you have two outcomes. It uses the logistic function to guess the chance of something happening.

What is a Logistic Function?:

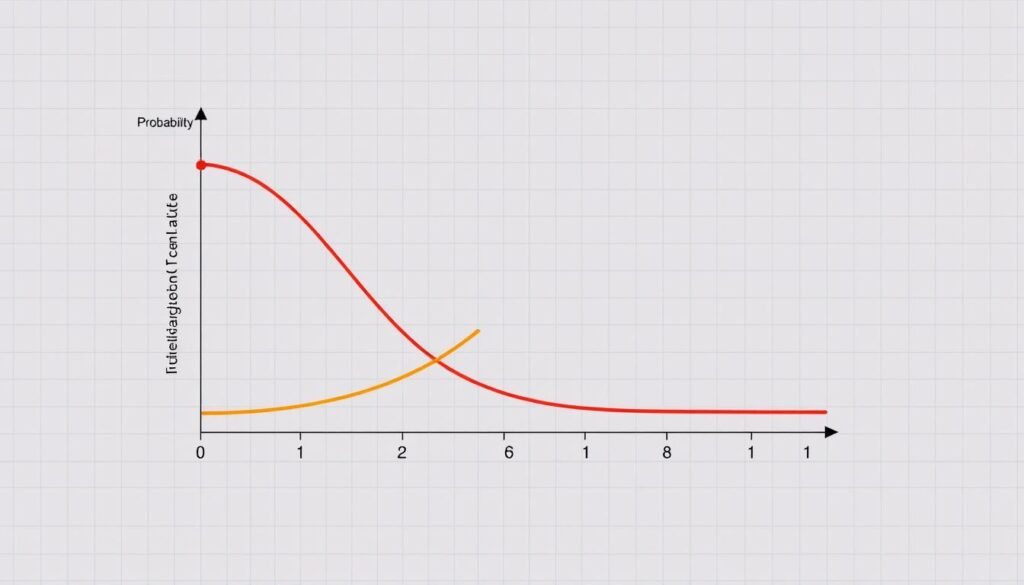

The logistic function is at the heart of logistic regression. It creates an S-shaped curve, also known as the sigmoid function. This curve turns any number into a value between 0 and 1. It’s perfect for tasks where you have only two choices, like “yes” or “no”. The function makes sure the output is always between 0 and 1. This fits well with binary choices.

Key Components of Logistic Regression:

Logistic regression has several important parts. These include independent variables, the logistic function, odds and log-odds, coefficients, and maximum likelihood estimation. Together, they help the model make predictions and classify data into two groups.

Basic Mathematical Concepts:

The math behind logistic regression is based on the logit transformation. The logit is the natural logarithm of the odds ratio. It connects the probability of an event to its predictors.

It is often used in supervised learning for binary data. The process starts with preparing the data. Then, the model is trained to find the best parameters using maximum likelihood. Lastly, the model is tested.

Logistic regression is strong for modeling binary outcomes. It’s used for predicting customer churn or medical diagnoses. It provides a solid way to make predictions based on probabilities.

Linear Regression vs. Logistic Regression: Key Differences:

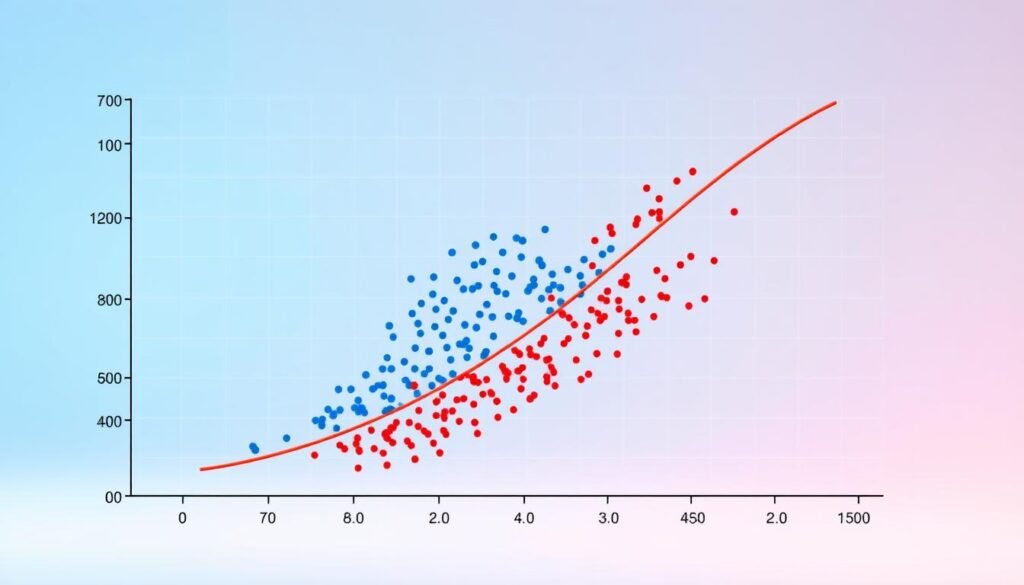

In the world of supervised machine learning, it’s important to know the difference between linear and logistic regression. Both are used for predictive modeling, but they work with different types of data. This leads to different results, depending on the nature of the outcome variable.

Continuous vs. Categorical Outcomes:

Linear regression is for continuous data. It predicts a continuous outcome from input variables. For example, it can forecast the temperature based on past weather data.

On the other hand, logistic regression is for categorical outcomes, like binary ones. It predicts if an email is spam or not. The output is a probability between 0 and 1, thanks to the logistic function.

Line of Best Fit vs. S-Curve:

Linear regression finds a straight line that best fits the data. It uses the least square error method. In contrast, logistic regression uses an S-curve to represent the probability of a binary outcome.

This S-curve is key in setting a decision boundary in classification algorithms.

Interpretation of Results:

Interpreting the results of these regressions is different. Linear regression directly predicts values, like house prices based on size and location. Logistic regression, on the other hand, gives a probability of an event happening, like tumor malignancy based on medical inputs. Choosing between linear and logistic regression depends on understanding these differences. For a deeper look, check out this detailed comparison between linear and logistic regression.

The Mathematics Behind the Logit Model:

The core of logistic regression is the logit transformation. It changes log odds into probabilities. This is key for understanding how the model works, important in fields like medicine and marketing.

The sigmoid function is at the heart of this. It takes input values and turns them into outputs between 0 and 1. This is essential for predicting probabilities, like yes/no answers, in probability modeling.

Maximum likelihood estimation (MLE) is vital in the logit model. It finds the best fit for the data by maximizing the likelihood of observing it. This method is great for models without a simple solution.

MLE is a big help in logistic regression. It adjusts parameters to find the most likely outcomes. This makes it a strong tool for probability modeling.

Grasping the math behind logistic regression improves data analysis. It also makes predictions more accurate in many fields.

Types of Logistic Regression Models:

In supervised learning, logistic regression is a key tool for classification. It’s great for binary classification, multinomial classification, and predicting ordered outcomes. Let’s look at the different types of logistic regression models. They’re designed to handle different types of categorical responses well.

Binary Logistic Regression:

Binary logistic regression is the simplest type. It’s perfect for tasks with only two outcomes. This includes email spam detection and medical diagnosis, where the answer is yes or no, or true or false. The model finds the probability of each outcome and turns it into a binary result.

Multinomial Logistic Regression:

Multinomial logistic regression is for more than two classes without any order. It’s great for tasks like predicting crop diseases or classifying documents. This model uses the log odds of each class to make predictions.

Ordinal Logistic Regression:

Ordinal logistic regression is for when the categories have a natural order but the gaps between them are not the same. It’s used in educational grading, service satisfaction, or disease stages. This model sees the ordered levels as having a logical sequence, which affects the predictions.

| Type of Model | Predictive Outcome | Common Use-case |

|---|---|---|

| Binary Logistic Regression | Binary (0 or 1) | Fraud detection |

| Multinomial Logistic Regression | Multiple classes (unordered) | Document categorization |

| Ordinal Logistic Regression | Ordered classes | Rating customer satisfaction |

Logistic regression models are key in supervised learning. They’re tailored for specific types of categorical data and needs. This shows their importance in various fields.

Understanding Probability and Odds Ratios:

Logistic analysis relies on probability modeling and odds ratios. These are key for precise this regression. They help us understand data, focusing on event outcomes.

Converting Probabilities to Odds:

It’s important to know how to switch from probabilities to odds. Probabilities range from 0 to 1, showing how likely something is to happen. Odds, on the other hand, compare the chance of an event happening to it not happening.

For example, a 0.75 probability means the odds are 3:1. This shows the event is three times more likely to happen than not.

Interpreting Odds Ratios:

Odds ratios are a big deal in logistic analysis. They compare the odds of an event happening under different conditions. This is really useful for binary outcomes in logistic regression.

An odds ratio of 3.71 in medical treatment means something. It shows the odds of a bad outcome with standard treatment are 3.71 times higher than with a new treatment. This gives a clear measure of how treatments compare.

Maximum Likelihood Estimation

Maximum likelihood estimation (MLE) is essential for fitting logistic regression models. It picks the best parameter values (coefficients) for the model. This makes sure the model fits the data well.

Logistic regression is great for complex data with categorical outcomes. It uses MLE and odds ratios for better insights and predictions. This makes it a powerful tool for researchers and statisticians.

Model Evaluation and Goodness of Fit:

In this regression, a strong goodness of fit is key for reliable models. A good fit means the model accurately predicts outcomes. The Hosmer-Lemeshow test is a common way to check this, but it can be tricky with big samples.

There’s a better way to use the Hosmer-Lemeshow test, making it work for all sample sizes. This method adds a special parameter to check fit, not just size. Also, using graphs and subsampling is smart for big data, as they show how well the model fits.

Logistic regression is used in many areas, like predicting cataract risk or checking mortality in critical care. These uses make sure models are not just numbers but also useful in real life. For more on this, check out Linear Regression Analysis.

Today, experts suggest mixing the modified Hosmer-Lemeshow test with graphs. This mix helps deal with big data and shows how well the model works in real life.

| Method | Goodness of Fit Evaluation | Applicability in Sample Size |

|---|---|---|

| Traditional Hosmer-Lemeshow Test | May mislead in large samples | Less effective as sample size increases |

| Modified Hosmer-Lemeshow | Standardizes power, reducing false positives | Effective across varying sample sizes |

| Graphical Methods & Subsampling | Provides visual and statistical calibration | Highly effective in large datasets |

Using these advanced model evaluation methods can greatly improve this regression models. This makes predictions more accurate and reliable, boosting the model’s overall performance.

Real-World Applications and Use Cases:

Logistic regression is very useful in many areas. It helps make predictions for binary classification. This is important for making decisions in different fields. Here are some examples:

Medical Diagnosis:

In medicine, logistic regression is key for predicting diseases. It looks at patient data to guess if someone might have a disease. This helps doctors plan early treatments.

Financial Risk Assessment:

Logistic-regression helps banks predict if someone will pay back a loan. This makes lending safer and more informed. It’s all about managing financial risks.

Marketing and Customer Behavior:

Marketers use logistic-regression to guess what customers will do. This helps them make better ads and products. It’s all about understanding what people want to buy.

Social Sciences Research:

In social sciences, logistic-regression is used to study things like voting and policy acceptance. It helps researchers understand big trends. This gives us insights into how people behave together.

Here’s a table showing how logistic-regression is used in medicine and finance:

| Field | Use Case | Example |

|---|---|---|

| Medical | Disease Prediction | Predicting heart disease occurrence from patient biometrics. |

| Finance | Credit Scoring | Assessing creditworthiness to make loan approval decisions. |

| Marketing | Customer Segmentation | Predicting which users are likely to purchase a new product. |

| Social Science | Policy Analysis | Evaluating the acceptance rate of new government policies. |

This regression is very important. It helps us make better decisions by using data. This makes things more efficient and effective in many areas.

Common Challenges and Limitations:

Logistic regression is widely used but faces several challenges. These include overfitting, sample size, and multicollinearity. Knowing these issues helps in using this regression more effectively.

Overfitting Issues:

Overfitting happens when a model fits the training data too well but fails with new data. This often occurs with complex models and small datasets. To fix this, regularization like Lasso (L1) and Ridge (L2) is used. These methods reduce large coefficients in the model.

Sample Size Requirements:

The size of the sample is key for logistic regression to work well. A small sample can lead to bad estimates and predictions. Luckily, logistic regression can work with a small sample size, making it useful for many fields. But, having enough data is essential for reliable predictions.

Multicollinearity:

Multicollinearity is when variables are too closely related. This makes the model’s estimates less accurate. To spot this, variance inflation factors (VIF) are used. It can be fixed by removing variables or using regularization.

Here’s a table showing the challenges and how to solve them:

| Challenge | Impact | Mitigation Strategy |

|---|---|---|

| Overfitting | Model fails to generalize | Implement regularization (L1, L2) |

| Sample Size | Inaccurate estimates | Increase sample size, use robust techniques |

| Multicollinearity | Distorted estimates | Variable removal, regularization |

Implementing Logistic Regression in Machine Learning:

In supervised machine learning, logistic regression is key for solving binary problems. We’ll explore how to train the model, pick the right features, and use regularization techniques. These steps improve the logistic regression implementation.

Model Training Process:

The training process starts with splitting data into training and test sets. It’s important to handle both numbers and categories well. Then, gradient descent comes into play. It tweaks the model’s parameters to get closer to the real results, making the model better and faster.

Feature Selection:

Picking the right features is critical for logistic regression. It’s about choosing the most important variables that affect the outcome. This makes the model simpler, faster, and more reliable, avoiding overfitting problems.

Regularization Techniques:

To fight overfitting, regularization techniques like L1 and L2 are used. They adjust the model’s coefficients, adding a penalty for complexity. This not only simplifies the model but also boosts its accuracy on new data.

By following these steps, from gradient descent to feature selection and regularization techniques, we create a strong logistic regression implementation. It’s designed to predict outcomes with great accuracy.

Conclusion:

In the world of supervised learning, logistic regression is a key classification algorithm. It’s known for its important role in predictive modeling. It’s used for both binary and multinomial classification tasks.

Logistic regression uses a special function to model probabilities. This ensures the outputs are always between 0 and 1. We’ve seen how it differs from linear regression, focusing on categorical outcomes and having a unique S-curve.

Logistic regression is valued for its simplicity and wide use in fields like healthcare and finance. It’s great for binary classification. It can turn probabilities into odds ratios, making results easier to understand.

But, it relies on certain assumptions and needs good data preparation. It’s also important to use the right metrics to check how well the model works. This includes the confusion matrix, ROC-AUC curve, and F1-score.

Using logistic regression involves careful steps. You need to check its assumptions, prepare your data well, and evaluate its performance. When done right, it’s a powerful tool for making smart decisions.

Even with its limitations, logistic regression is useful. It can handle both continuous and categorical inputs. It also gives clear probabilities as outputs. This makes it a reliable choice in data analysis.

FAQ

What exactly is logistic regression?

Logistic regression is a method used in machine learning for binary classification. It predicts the chance of an event happening based on several factors. It uses the logistic function to turn the log-odds into a probability, making it great for predicting outcomes.

How is logistic regression different from linear regression?

Logistic regression is for predicting yes or no answers, unlike linear regression which predicts numbers. It uses an S-shaped curve to model probabilities, perfect for classifying things.

What is a logistic function in logistic regression?

The logistic function, or sigmoid function, is an S-shaped curve. It changes the log-odds into probabilities between 0 and 1. This curve is key in logistic regression for classifying outcomes.

What are the key components of logistic regression?

Key parts of logistic regression include the logit (log-odds), odds ratio, and maximum likelihood estimation. These are important for understanding how predictors affect a binary outcome in supervised learning.

What are odds ratios, and how are they interpreted?

Odds ratios show how likely an event is compared to it not happening. They tell us the strength and direction of the relationship between variables. They’re a simple way to understand the effect size in logistic regression.

Can logistic regression handle more than two outcomes?

Yes, logistic regression can handle more than two outcomes. Extensions like multinomial and ordinal logistic regression allow for multiple categories. This shows logistic regression’s flexibility in different scenarios.

What is the role of maximum likelihood estimation in logistic regression?

Maximum likelihood estimation (MLE) is used to find the best fit for the logistic regression model. It finds the parameters that make the model fit the data best, ensuring a good match.

How can the performance of a logistic regression model be evaluated?

You can check a logistic regression model’s performance with metrics like accuracy and the area under the ROC curve. The Hosmer–Lemeshow test also helps ensure the model fits the data well.

What is sample size’s effect on logistic regression models?

Sample size is very important in logistic regression. A small sample can lead to unreliable estimates and a model that doesn’t generalize well. Having enough data is essential for a good model.

How is logistic regression applied in the real world?

Logistic regression is used in many areas. It helps predict patient risks in medicine, creditworthiness in finance, customer behavior in marketing, and demographic effects in social sciences.

Can logistic regression be used for multi-class classification tasks?

Absolutely, logistic regression can be used for tasks with more than two categories. This makes it useful in many fields.

What techniques can be used to avoid overfitting in logistic regression?

To avoid overfitting, you can use L1 (Lasso) and L2 (Ridge) regularization. These methods reduce the model’s complexity and help it perform well on new data.

Why is feature selection important in implementing logistic regression?

Feature selection is important because it picks the most relevant predictors. Including only significant features makes the model simpler and less prone to overfitting.

What is a confusion matrix?

A confusion matrix is a table that shows how well a classification model is doing. It displays true positives, true negatives, false positives, and false negatives. It helps see how accurate the model is and how to improve it.

What role does gradient descent play in logistic regression?

Gradient descent is an algorithm that helps find the best parameters for the logistic regression model. It adjusts the parameters to minimize errors, guiding the model’s training in machine learning.

24 thoughts on “Logistic Regression in Data Science: Everything You Need to Know”

I’ve been browsing on-line greater than three hours these

days, but I never discovered any fascinating article like yours.

It’s lovely value enough for me. Personally, if all webmasters and bloggers made good content material

as you probably did, the net will probably be a lot more useful

than ever before.

Just spent a bit of time on 42vnbet and it’s got a pretty nice vibe. Easy to get the hang of and the payouts seem legit. Worth a punt, I reckon! Check 42vnbet out: 42vnbet

Oi, anyone here from Vietnam? Bong88comvn popping up. Is it legit? Honest opinions only! bong88comvn

Ja, für den Willkommensbonus mit drei Einzahlungsboni müssen die Bonuscodes GS100, 75DEP und GST50 angegeben werden. Das Spielportfolio ist

absolut ausreichend und bietet abwechslungsreiche Automaten und Live-Spiele.

Hier warten Belohnungen wie Freispiele oder Cash auf dich.

Mobile Slots, Tischspiele, Live Games usw.

Als Tischspiele und Kartenspiele werden vor allem Roulette, Blackjack, Poker und Baccarat angeboten.

Auch in anderen ausländischen Casinos wie dem Instaspin musst du drei Einzahlungen tätigen, um einen vergleichbaren Bonuswert

zu erhalten.Die Umsatzbedingungen im 7Melons

Casino sind das 35-fache des Betrages, während ich im Golden Star den Willkommensbonus 40x

umsetzen muss. Du hast also die Wahl, deinen Willkommensbonus in Euro oder Bitcoin abzuholen. Beginnen wir mit dem

Einzahlungsbonus für neue Spieler, der im Golden Star Casino bei 1’000 € oder 100

MBTC liegt. Anreize für das Schreiben von Bewertungen anzubieten oder selektiv zur Bewertungsabgabe

einzuladen, kann den TrustScore verfälschen.

Die Mindesteinzahlung beträgt lediglich 5€ für Visa, Mastercard

und Sofort bzw. Es werden unter anderem Freispiele,

Gutscheine und weitere tolle Gewinne verlost. Denn wenn du mindestens 30€ an einem beliebigen Slot der Book of Ra Reihe einsetzt, werden dir 30 Freispiele für

Book of Ra Deluxe mit einem Einsatzwert von 0,50€ gutgeschrieben. Mittwochs kannst du

dein Können am Glücksrad unter Beweis stellen und hast dabei die Möglichkeit, 5, 10, 15,

20, oder 30 Freispiele zu gewinnen, die du noch am gleichen Tag bis Mitternacht

einlösen kannst.

References:

https://online-spielhallen.de/monro-casino-test-erfahrungen-fur-deutsche-spieler/

Das Angebot kann innerhalb von 7 Tagen nach der ersten Einzahlung aktiviert werden,

und der Spieler hat 30 Tage Zeit, um es zu spielen. Der Online-Buchmacher

Spinbetter freut sich immer über neue Nutzer und bietet ihnen einen großzügigen Ersteinzahlungsbonus.

Benutzer können sich sicher in ihr Spinbetter-Konto einloggen und mit

dem Spielen ihrer Lieblingsspiele beginnen.

Sobald Sie Stammkunde sind, können Sie mit noch mehr

Prämien rechnen, die für jeden Geschmack etwas bieten. Die

Sportteil bietet Tausende von Kämpfen in mehr als 40 Disziplinen, mit Hunderten von Kämpfen, die jeden Tag per Live-Streaming zu sehen sind.

Es ist sowohl für erfahrene Spieler als auch für Anfänger, die gerade erst ihre Reise in die Welt

der Sportwetten beginnen, verständlich. Spinbetter bietet seinen Kunden eine große

Auswahl an Ein- und Auszahlungsmethoden. Der Live-Casino-Bereich

ist ebenfalls sehr umfangreich und bietet über 280

Spiele für jeden Geschmack.

SpinBetter hat sich entschieden, sein Haupt-Willkommensangebot

in zwei Ersteinzahlungsboni aufzuteilen, einen für den Casino-Bereich und den zweiten für die Sportwetten. Wie alle namhaften Online-Casinos,

bietet auch SpinBetter den Spielern eine Vielzahl unterschiedlicher Boni und Aktionen. Nachfolgend finden Sie

alle grundlegenden Informationen, die Sie über den Online-Casino- und Wettanbieter

benötigen. Die Plattform unterstützt auch Kryptowährungstransaktionen und

bietet somit eine zusätzliche Ebene von Komfort und Sicherheit.

References:

https://online-spielhallen.de/hit-spin-casino-auszahlung-ein-umfassender-leitfaden-fur-spieler/

However, they didn’t receive the promised free

spins or bonus credit unless they deposited money, even though the

promotion stated that no deposit was necessary. The player from Australia had

signed up to a casino because of a promotional offer they received via email.

The player from California was experiencing problems with the account verification process at Fair Go

Casino, despite having sent multiple pictures of herself holding the ID.

We concluded that the casino had acted within their established rules and the player had unknowingly breached the terms.

Its consultants are trained specialists and, more importantly, online gambling enthusiasts who

understand players’ needs and common pain points.

Aussie players can easily contact the Fair Go support department for

issues while playing at Fair Go Casino. Fair Go Casino meets

players’ expectations by enabling deposits and withdrawals through various channels while offering attractive limits that ensure

a smooth flow of funds.

The real money online pokies selection is impressive with popular titles such as the Caesar’s Empire, Builder Beaver, Crazy Vegas, Lucky 6, Lucha

Libre, Crystal Waters, Cleopatra’s Gold, Count Spectacular and many more.

They have over a million players so we are sure you won’t have any

concerns or issues on this front. Fair Go is a really trustworthy

operator, well-known by Australian players ever since it was released back in 2017.

After signing up, we found more bonuses could be claimed

and there was more on offer that could be

played with. Our team has found the wagering requirements to be in line with the

industry, and the terms and conditions are easy to understand,

with no between-the-lines info that often confuses players.

With their large selection of different and exciting promotions, plus their resourceful customer support, we are happy to recommend this casino to our readers.

References:

https://blackcoin.co/boho-casino-review-your-ultimate-guide/

Then there are deals every week, specials during certain times of the year, and even games.

Once you’re ready to deposit, RichardCasino offers a welcome package that usually includes a match bonus and more free spins.

If you’ve ever searched for a new online casino, you know how

overwhelming it can get. You’re all set to receive the latest promos,

expert betting tips, and top pokies news — straight to your inbox.🎰 As a welcome

treat, check out our best free spins bonuses and start spinning

the pokies for free today! Richard Casino offers many poker games, including Joker

Poker, Texas Hold’em, Teen Patti, Triple Edge Poker,

Caribbean Poker, etc. The wagering requirement

for Richard Casino’s welcome bonus is 40x, which is the same requirement for nearly all promotions

on the site.

The slot section dominates Richard Casino’s offerings with

approximately 5,900+ titles spanning every conceivable theme and gameplay style.

This extensive partnership network ensures players have access to

the latest releases alongside established favorites from industry-leading

developers including Pragmatic Play, BGaming, and Playtech.

Richard Casino represents a sophisticated online gaming platform operated by Hollycorn N.V.,

a well-established company in the iGaming industry.

References:

https://blackcoin.co/how-online-casino-vip-programs-work/

These studios are renowned for producing high-RTP pokies, engaging features, and

visually impressive designs, ensuring players enjoy

endless variety and premium-quality entertainment.

With over 2,000 games from top-tier providers

such as Betsoft, Vivo, Playson, and Booming Games, Wolf Winner

delivers a strong balance of high-RTP slots, classic table

games, and immersive live dealer action. Wolf Winner is Australia’s best choice for loyalty

rewards and VIP casino bonuses.

The standout welcome bonus gives players up to $8,000 in bonus funds and 500 free

spins, making it a top pick for both casual players and VIP high rollers.

VegasNow earns the crown as Australia’s best overall online casino.

Laws regarding online gambling vary by country, so always ensure you meet the legal gambling age and comply with your local regulations before

playing.

Dive into our games pages to find real money casinos featuring your favorite titles.

Discover the top real-money online casinos in the

US, carefully hand-picked by yours truly. If you fancy

some new games, have a look at these pages where everything

is about new online casinos. To know what’s the best online casino for real money where

you are allowed to play, scroll back to the top of this page and check

out the number one on our list! The best casino online changes

based on your location, the gambling regulations in that location, and the games you want to play.

In summary, the best online casinos in Australia for 2025 offer a diverse and exciting array of options for players.

The rise of mobile gaming has revolutionized the way players

enjoy online casino games, making it possible to play anytime and anywhere.

Using cryptocurrencies for deposits and withdrawals at Australian online casinos provides players with a modern and efficient way to manage

their funds. However, these bonuses allow players to keep their winnings, offering

a risk-free opportunity to win real money.

No deposit bonuses are a fantastic way for players

to start their online casino journey without any financial commitment.

The best online casinos are transparent, user-focused, and

consistently deliver a great experience — just like Rocketplay.

Many online casinos claim to be “the best,” but Rocketplay is quickly proving itself with real features

Aussie players care about. If you’re looking for a fun, safe, and exciting

place to play online casino games in Australia, Rocketplay might be exactly what you need.

Several rocketplay casino review written by actual players attest to the fact that

club membership grants access to even more benefits.

The Rocket Play online casino offers a variety of helpful options for responsible

gaming.

The games are powered by renowned software providers like

Pragmatic Play, NetEnt, Yggdrasil, and Betsoft, ensuring high-quality graphics and fair gameplay.

If you enjoy online casinos, RocketPlay Casino

is worth trying. It’s perfect for players who love gaming flexibility.

The app also supports high-quality graphics, providing an immersive gaming experience.

With so many ways to boost your gameplay, Bonus offers

ensure you always have something to look forward to.

With over 15 years of expertise, he is known for crafting high-impact, credible content

that delivers trusted insights across major gaming and betting platforms.

Lifetime value of casino players is much higher than just sports bettors.

While these wins are extraordinary, it’s worth noting that rocket crash game real money results are still based on probability.

As the rocket launches, you can see how many other players are on board with you,

when they decide to bail out, and the multipliers they lock

in for their payouts. Equally important is knowing how to handle deposits and withdrawals before you start

betting on the rocket betting game. The goal might

be to essentially break even on the risk/reward of the actual game play,

and just gain the promotional benefit of $1 in casino benefit for every $25.

References:

https://blackcoin.co/intensity-casino-australia/

online casino australia paypal

References:

https://fromkorea.peoplead.kr

online slots uk paypal

References:

http://dwsm.co.kr/bbs/board.php?bo_table=free&wr_id=273417

online casinos paypal

References:

https://social-lancer.com/profile/kaylenetgj1028

us poker sites that accept paypal

References:

https://suryapowereng.in/employer/paypal-gambling-sites-2025-best-operators-with-paypal-payments/

casino avec paypal

References:

https://lavoroadesso.com/employer/best-online-casinos-2025-top-5-real-money-sites-reviewed/

online casino roulette paypal

References:

https://kigalilife.co.rw/author/burtoncorne/

Yo, if you’re into betting, check out 188betbetting88. Good selection of sports and eSports. Solid odds too. I’ve had some success there. Check it out: 188betbetting88.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

Hey, just stumbled upon this site matejl.info! Seems like it might be useful. Checking it out now. If you’re into this sort of thing, give matejl a look!

Just grabbed the 0066betapp – pretty slick! Super easy to play on the go. Download it and take a peek 0066betapp

Hey everyone! Just tried out 79q. Feels pretty smooth and the interface is intuitive. Definitely giving it a thumbs up! Check it out 79q

Pingback: altogel

game 66b là nhà cái hiếm hoi có mục “Hướng dẫn chơi slot cho người mới” – học nhanh, chơi giỏi, thắng lớn! TONY02-11O

game 66b là nhà cái hiếm hoi có mục “Hướng dẫn chơi slot cho người mới” – học nhanh, chơi giỏi, thắng lớn! TONY02-11O

Một trong những yếu tố quan trọng mà người chơi quan tâm khi tham gia cá cược trực tuyến chính là độ an toàn bảo mật. slot365 là gì Tại đây, nhà cái luôn cam kết đảm bảo quyền riêng tư và an ninh tuyệt đối cho tất cả người dùng. TONY02-11O