K-Means Clustering is a key part of unsupervised learning in data science. It’s known for finding hidden patterns in data without labels. This guide will show you how it works, its uses, and the math behind it.

Key Takeaways:

- K-Means Clustering is a core technique in unsupervised machine learning for grouping data.

- The algorithm stratifies data points into clusters, reducing the distances to their centroids.

- Essential for data science innovation, K-Means Clustering illuminates patterns in unlabeled datasets.

- It optimizes cluster formation through centroid positioning, ensuring cohesion within and distinctiveness between clusters.

- The methodology’s success hinges on the similarity of intra-cluster points and the divergence of inter-cluster points.

- K-Means is versatile, aiding diverse applications such as customer profiling and image segmentation.

- Familiarity with K-Means Clustering’s properties is pivotal for effective and insightful data partitioning.

Introduction to Clustering in Machine Learning:

Clustering in machine learning is a key part of unsupervised learning. It groups objects so that those in the same group are more alike than others. This method is used in many areas, like market analysis and document grouping, making it very useful.

The heart of clustering is the clustering algorithm. The K-Means algorithm is the most common. It finds and groups data points based on their similarities. You need to tell the algorithm how many clusters to look for, which affects the results.

K-Means is good at handling big datasets because it’s simple. It starts by placing centroids randomly and then moves them until the data is well grouped. This is done by making the distances between points in each cluster as small as possible.

Finding the right number of clusters is crucial. There are ways to figure this out, like the Elbow Method. It looks at how far points are from their cluster centroids.

The table below shows some key things about K-Means clustering:

| Attribute | Description | Typical Values |

|---|---|---|

| Number of Clusters | Required initial specification | Varies, often determined experimentally |

| Algorithm Type | Classification of the algorithm | Partitioning |

| Distance Metric | Method to measure distance between points | Euclidean, Manhattan, etc. |

| Applications | Usability in different domains | Data Analysis, Market Segmentation, etc. |

In summary, using K-Means in machine learning shows the power of unsupervised machine learning. It helps find patterns in data, making it useful for many tasks in data analysis.

Defining K-Means Clustering:

The k-means clustering algorithm is a key centroid-based clustering method in data science. It divides data into k groups, each with a centroid. The centroid is the average of points in that cluster.

The Concept of Centroid-Based Clustering:

In centroid-based clustering, the k-means algorithm groups data into k clusters. Each cluster is centered around its mean, or centroid. This approach aims to reduce the distance between each point and its centroid, showing how well the points fit together.

Unsupervised Learning and Data Partitioning:

The k-means clustering algorithm is a prime example of unsupervised learning. It finds patterns without labels. By grouping similar data points, k-means helps uncover hidden structures in data.

This method of data partitioning is vital for handling large datasets. It offers deep insights for analysis and decision-making in many fields.

In summary, k-means clustering is central to machine learning. It highlights the need for effective data handling. It’s crucial for finding meaningful connections in complex data.

Exploring the K-Means Algorithm in Machine Learning:

The k-means algorithm in machine learning is key for unsupervised learning. It helps find patterns and group data without labels. It’s used in market segmentation and data sorting. Understanding k-means means knowing its iterative process. This process helps form clusters. The algorithm starts with K random centroids. Then, it assigns data points to the closest centroid. This creates the first clusters.

Next, the algorithm updates the centroids. It does this by finding the mean of points in each cluster. This keeps going until the centroids don’t change much anymore.

The k-means algorithm machine learning has its challenges. You need to know how many clusters (K) to start with. Also, the first positions of the centroids can affect the results.

To solve these problems, you can run the algorithm many times. This helps make the results more reliable. You can also use the Elbow Method to find the best K.

| Challenge in K-Means Clustering | Potential Solutions |

|---|---|

| Predetermined number of clusters (K) | Use Elbow Method or Silhouette Analysis for optimal K selection. |

| Sensitivity to initial centroid placement | Multiple runs with varied initial centroids; average results for stability. |

| Discovery of true k value | Analyze within-cluster sum of squares (WCSS) through Elbow Method to pinpoint the ‘elbow point’ where benefits of additional clusters diminish. |

Using the k-means algorithm helps data scientists find hidden structures in data. It’s a powerful tool for unsupervised learning. Its flexibility makes it very useful in machine learning.

K-Means Clustering and Its Applications:

K-means clustering is a key unsupervised learning method in machine learning. It plays a big role in data analysis and is used in many industries. This article looks at how K-means clustering is used in customer segmentation, image processing, and finding anomalies.

Customer Segmentation:

Customer segmentation k means clustering is a well-known use of K-means. It helps businesses sort customers into groups based on data analysis. This makes marketing more effective by targeting each group’s specific needs.

Companies use customer segmentation using k means to offer better services. This approach increases customer happiness and loyalty.

Image Segmentation and Classification:

In image processing, image segmentation k means clustering is very important. It groups pixels by color to make images simpler. This helps a lot with finding objects in images.

Image classification using k means clustering also helps extract important features. This is key in fields like medical imaging and quality control.

Anomaly Detection in Data Analysis:

Anomaly detection k means is another big use of K-means clustering. It finds unusual patterns that don’t fit the usual data. This is very useful in finance and healthcare.

It helps make better decisions and improve security. K-means clustering is used in many areas, showing its value in finding important insights in complex data.

The Mathematics Behind K-Means Algorithm:

The k-means clustering algorithm makes complex data analysis simpler. It focuses on reducing within-cluster variance. This is done by minimizing the sum of squared distances between points and their cluster centroids.

This basic idea helps us use and improve the algorithm. It’s key to understanding how it works.

K-means uses an iterative method to refine clusters. It starts with random centroids and assigns data points to the closest cluster. Then, it updates the centroids until they barely change, showing the algorithm has found the best clusters.

The power of k-means lies not just in clustering but substantially in its iterative approach to reach a state where the aggregate distance of data points from their respective centroids is lowest.

Here’s how the k-means clustering algorithm works in detail:

- Start by picking K centroids randomly.

- Then, assign each data point to the nearest cluster based on distance.

- Update the centroids by averaging all points in each cluster.

- Keep repeating steps 2 and 3 until the centroids stop changing much.

This process is more than just following steps. It shows how data can be efficiently analyzed. K-means is great for clustering real-world data, from images to detecting anomalies.

| Parameter | Description | Impact on Model |

|---|---|---|

| Number of Clusters (K) | Primary determination for the initial setup, impacting the granular division of data | Crucial for determining model accuracy and efficiency |

| Centroids initialization | Can be random or calculated through the k-means++ algorithm for better outcomes | Significantly affects the convergence speed and quality of the solution |

| Distance Metric | Mostly Euclidean, though other metrics can be used depending on the data | Influences clustering performance especially in high-dimensional spaces |

Understanding the derivation of k means algorithm improves its use. It also shows its value in different data analysis scenarios.

Preparing Data for K-Means Clustering:

Getting your data ready is key for k means clustering success. It takes up 60–80% of Machine Learning Engineers’ time. This is because it’s complex and very important. You need to prepare your data well to make the k means algorithm work better.

Feature Selection and Data Cleansing:

Choosing the right features is crucial. It means picking the most important ones for clustering. This avoids using data that’s not needed or useful.

Data cleansing improves data quality. It might include removing duplicates and handling missing values. This is important for k means to work well. It also helps deal with outliers and noisy data.

Normalization and Scaling of Data:

Normalizing and scaling data is essential. It makes sure all features are treated equally. This is because k means uses distances between points.

Techniques like min-max scaling or Z-score standardization are used. They scale values to a range like 0.0 to 1.0. This makes data symmetry and standard distribution possible for k means.

Scaling also makes sure each feature has the same impact. This is important for k means to work well. It assumes data is spherical and clusters have roughly the same number of points.

| Data Preprocessing Step | Description | Impact on K-Means Clustering |

|---|---|---|

| Feature Selection | Reduction of dimensions, selection of pertinent features | Enhances clustering efficiency by focusing on relevant features |

| Data Cleansing | Removal of duplicates, errors, and outliers | Reduces noise and improves data quality, leading to more distinct clusters |

| Normalization | Adjustment of data scales to a uniform range (0.0 to 1.0) | Prevents feature dominance in distance calculations and improves cluster accuracy |

| Scaling | Standardization of data ranges (-1.0 to 1.0) | Ensures equal weighting of features, crucial for distance-based algorithms like K-means |

Step-by-Step Implementation of K-Means Clustering Python:

Exploring machine learning algorithms can be fascinating. Implementing k means clustering from scratch in python is a great starting point. K-means clustering in Python is known for its simplicity, yet it has a deep complexity that you’ll discover as you work on it.

Starting with clustering algorithm in python means first getting the right libraries. Scikit-learn is essential for this task. It offers tools for efficient algorithm work. Also, knowing your data well is crucial. You might need to normalize it to make sure all data points are on the same scale.

To start k means clustering python, you need to pick the number of clusters, ‘k’. This choice is key for the whole process. K-means is simple because it follows a few steps. First, it assigns data points to the closest cluster. Then, it updates the cluster centers based on the data points in each cluster.

| Step | Description | Tools/Functions Used |

|---|---|---|

| 1 | Import data and libraries | Pandas, NumPy |

| 2 | Select number of clusters (k) | Scikit-learn |

| 3 | Normalize data | StandardScaler |

| 4 | Initialize centroids | Random selection |

| 5 | Assign clusters | Euclidean distance calculation |

| 6 | Update centroids | Compute new means |

| 7 | Repeat until convergence | Iterative refinement |

Learning about linear regression and data analysis through k-means is a great way to start. It helps you understand important concepts. This hands-on learning not only teaches you about k-means but also shows how machine learning works in real life.

In conclusion, k-means is great for handling big datasets and making complex data easier to understand. This process improves your Python skills and helps you understand how to group data strategically. It’s a step towards more complex analytical tasks.

Advancements and Variations in K-Means Clustering Techniques:

The field of clustering techniques has grown a lot. New algorithms like K-means++ and the use of k means clustering in R have emerged. These changes show how the k means clustering algorithm keeps getting better to tackle different data challenges.

K-Means++ for Improved Centroid Initialization:

K-means++ is an improved version of the standard k means clustering algorithm. It focuses on choosing the right starting points for the centroids. This makes the algorithm less dependent on random starts, reducing the chance of bad clustering.

This improvement in k means clustering makes the process more reliable and efficient. It’s a big step forward in data mining.

Implementing K-Means Clustering in R:

Using R for k means clustering is great because it’s flexible for stats and graphics. It’s perfect for exploring complex data. Thanks to platforms like GitHub k means clustering python and R, it’s easy to share and use new versions of k-means++ and other algorithms.

Improvements in k means clustering also come from combining it with other methods. For example, K-means++ is especially good with big datasets. This is important because we’re creating over 2.5 quintillion bytes of data every day.

It can work with different types of distances, like Euclidean or Manhattan. This makes it very flexible for different kinds of analysis.

| Clustering Technique | Centroid Initialization | Common Applications |

|---|---|---|

| K-Means | Random or K-Means++ | Customer segmentation, Image compression |

| DBSCAN | Core Points Identification | Noise reduction, Anomaly detection |

| Hierarchical | Agglomerative approach | Genetic clustering, Species classification |

These advancements in k means clustering make it even more useful. They also help us get better insights from big, complex datasets.

Challenges and Solutions in K-Means Clustering

One big challenge k means clustering faces is how sensitive it is to where you start. This can greatly change the results. To fix this, the K-Means++ algorithm is used. It helps by spreading out the starting points before the usual algorithm kicks in.

Another problem is guessing how many clusters to have, known as k. It’s not always easy and can really affect the results. To figure out the best k, methods like the elbow method and silhouette score are used. They help look at the data in different ways to find the best number of clusters.

K-means clustering also struggles with outliers, which can mess up the results. To tackle this, solutions k means clustering include using techniques like PCA or t-SNE. These methods reduce the impact of outliers. Also, algorithms like DBSCAN or K-medoids are less affected by noise and outliers.

Here’s a quick look at some numbers and methods to improve K-Means clustering:

| Aspect | Challenge | Solution |

|---|---|---|

| Centroid Initialization | Random placement can lead to poor clustering | Use K-Means++ for better initial placements |

| Number of Clusters (k) | Requires a priori specification | Use the Elbow method or Silhouette Score for determination |

| Outliers | Sensitive and can skew results | Use dimensionality reduction or alternative algorithms like K-medoids |

| Data Requirement | Needs continuous variables | Ensure appropriate data preprocessing |

Despite these challenges, K-means has many solutions that make it useful in unsupervised machine learning. It’s flexible and easy to understand, which is why it’s so popular among data scientists worldwide.

Optimizing K-Means Clustering Performance:

In data science, k means clustering is key for grouping data into useful clusters. We’ll look at how to pick the right number of clusters and check how well they work. This involves using clustering metrics to measure their quality.

Selecting the Optimal Number of Clusters

Finding the right number of clusters is crucial. It makes sure the results are useful and meaningful. Methods like the elbow method or silhouette analysis help find the best ‘k’. This ‘k’ makes clusters that are clear and useful.

Evaluating Clustering Effectiveness with Metrics

K means clustering can be judged with clustering metrics. These metrics give numbers to show how good the clusters are. Metrics like the silhouette coefficient and Calinski-Harabasz index help see how well clusters are formed.

Recent updates have made clustering better. Here’s how:

| Feature | Before Optimization | After Optimization |

|---|---|---|

| Cluster Cohesion | Low | High |

| Cluster Separation | Poor | Excellent |

| Total Within-Cluster Variation | High | Significantly reduced |

| Iterations to Convergence | Many | Fewer |

By using these methods carefully, data becomes easier to understand. This leads to better decisions based on data. Every step in improving k means clustering helps us see data better, leading to better results.

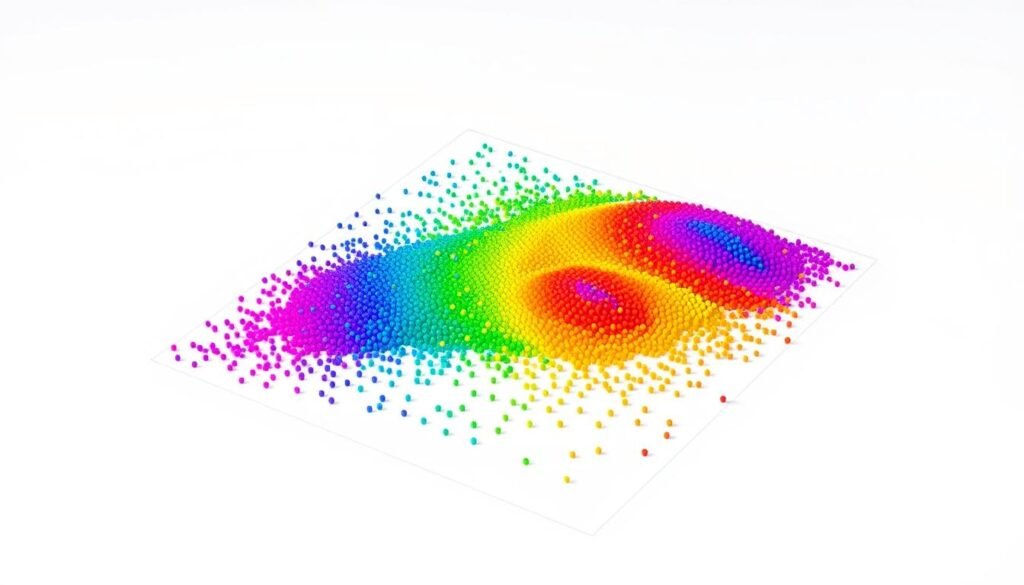

Visualizing Clusters in K-Means Machine Learning

Understanding clusters is key to grasping machine learning’s power, especially in k-means clustering. This method helps in recognizing patterns and segmenting customers. It turns complex data into clear visuals, aiding in strategic decisions.

K-means clustering groups similar data points into clusters. This method is crucial for recognizing patterns and improving customer segmentation. It helps in creating targeted marketing strategies based on customer behavior and preferences.

Here’s how the visualization process unfolds:

- Initialization: The algorithm starts by picking a number of clusters (K). This step is vital as it sets the structure of the visualization.

- Assignment: Data points are assigned to the nearest cluster based on distance. Visual tools show these assignments, revealing initial patterns.

- Optimization: Centroids adjust based on the mean of points in their cluster. This refines the visual representation with each iteration.

- Convergence: The process repeats until the centroids are optimally positioned. This is then shown in detailed visualization charts.

Tools like PCA are used to simplify complex data. This makes the clusters clearer and easier to understand. These visuals are key to spotting patterns that might not be obvious from the raw data.

Visualization also helps various stakeholders understand data without needing to know the technical details of machine learning. Here’s a detailed look at the visual tools and their roles:

K-Means Clustering in Hierarchical and Model-Based Methods:

K-Means Clustering is a top choice for its simple and effective way of grouping data. It works well with hierarchical and model-based methods. This mix helps uncover deep insights in unsupervised learning. In machine learning education, K-Means is a key method, making it easy to learn and apply.

Understanding how these methods work together can greatly improve data analysis. This knowledge helps in getting better results from data.

Comparison with Hierarchical Clustering:

Hierarchical and K-means clustering together offer a detailed view of data. K-Means starts with a set number of clusters, making it fast for big data. On the other hand, hierarchical clustering doesn’t need a set number of clusters. It creates a dendrogram that shows how groups are nested.

This method is great when you don’t know how many clusters there are. It’s also useful for seeing the detailed structure of clusters.

Integrating K-Means with Other Unsupervised Learning Methods:

Using K-Means with other methods like DBSCAN or density-based models helps solve its limitations. Hybrid methods, combining K-Means clustering with other methods, handle different cluster sizes and densities better. This leads to more accurate and clear results.

By using different methods together, analysts can better understand their data. This approach gives a deeper look into the data’s patterns.

Statistical data shows the benefits of using K-Means and hierarchical methods together. For example, they were both used on the Iris dataset. K-Means made clear groups based on sepal measurements. Hierarchical clustering showed the data’s detailed structure through its dendrogram.

These examples from real-world data highlight the advantages of using these advanced techniques together.

| Method | Clusters Predefined | Visualization | Best Use Case |

|---|---|---|---|

| K-Means Clustering | Yes | Scatter plots | Large datasets with clear cluster separation |

| Hierarchical Clustering | No | Dendrogram | Data sets where the number of clusters is unknown |

Conclusion:

In this guide, we explored k-means clustering in depth. It’s a key part of unsupervised learning and data analysis. The k-means algorithm helps break down big datasets into smaller groups. This makes it easier to find patterns and get useful information.

Choosing the right starting points and checking how well clusters fit are tough tasks. But, new methods like Mini-Batch KMeans and PCA help improve results. These steps make the algorithm more effective and flexible for different uses.

K-means clustering is used in many areas, like market research and image processing. It helps in finding new ways to understand data. As technology grows, so does the use of k-means clustering. It’s a powerful tool for finding patterns and improving how we work with data.

FAQ:

What is K-Means Clustering in Unsupervised Learning?

K-Means Clustering is a way to group data into K clusters without labels. It finds patterns in data by grouping similar points together. This helps machines understand data without knowing what it is.

How does the K-Means algorithm work?

The K-Means algorithm starts with K random points called centroids. It then groups data points with the closest centroid. After that, it updates the centroids based on the mean of each group. This keeps happening until the centroids don’t change much.

What are typical applications of K-Means Clustering?

K-Means Clustering is used in many ways. It helps in marketing by grouping customers. It’s also used in computer vision and to find unusual data patterns in different fields.

Why is data preparation important for K-Means Clustering?

Good data preparation is key for K-Means Clustering. It makes sure all data is useful and correct. This helps the algorithm to group data well and find meaningful insights.

What is the significance of normalization in K-Means Clustering?

Normalizing data is important. It makes sure all data points are treated equally. This prevents some data from being too dominant, leading to more accurate clusters.

What are some methods to determine the optimal number of clusters?

To find the right number of clusters, you can use the elbow method or silhouette analysis. The elbow method looks at how well the data fits into clusters. Silhouette analysis checks how well each data point fits its cluster.

What are the challenges of K-Means Clustering and how can they be addressed?

K-Means Clustering faces challenges like being sensitive to initial settings and needing to guess the number of clusters. You can use K-Means++ for better starting points. Running the algorithm multiple times helps choose the best number of clusters. Outlier detection can also help.

How can the effectiveness of a K-Means Clustering model be evaluated?

You can check how well a K-Means model works with metrics like the silhouette coefficient. It measures how tight and separate clusters are. Other metrics like the Calinski-Harabasz and Davies-Bouldin indexes also help evaluate cluster quality.

Can K-Means Clustering be integrated with other machine learning techniques?

Yes, K-Means Clustering can work with other techniques. For example, you can use it with DBSCAN for different data densities. Dimensionality reduction, like PCA, can also help in understanding clusters better.

How does K-Means Clustering compare to hierarchical clustering?

K-Means Clustering divides data into a fixed number of clusters, which is good for big datasets. Hierarchical clustering doesn’t need a set number of clusters and shows data organization in a tree-like structure. It’s better for smaller datasets and those needing detailed relationships.

25 thoughts on “K-Means Clustering for Data Science Projects: A Practical Guide”

Looking for some chill time? em777casino ain’t bad. Try it out and see if it your lucky place! See if it your type here: em777casino

Yo, Filbetcasino, is legit! Been hitting some nice wins there lately. Smooth gameplay and quick payouts. Definitely worth checking out – filbetcasino

Normalerweise erhältst Du ein paar Spins

kostenlos und in einigen Fällen sogar echtes Geld, um nach der Registrierung zu spielen. Einer der häufigsten Boni, die

von Online Casinos angeboten werden, ist der Bonus ohne Einzahlung.

Du kannst zahlreiche Bonusangebote ohne Einzahlung entdecken und dich

auf eine stetige Bewegung des Marktes verlassen. Ein Bonus ohne Einzahlung ist besonders attraktiv für

mobile Nutzer, die gerne unterwegs spielen. Diese Casinos sind oft

weniger bekannt, können aber attraktive Bonusangebote bereitstellen,

um neue Kunden anzulocken.

Viele Spieler bevorzugen in diesem Zusammenhang die Freispiele als Angebot ohne Einzahlung.

Wie hoch die Chancen auf diese stehen, richtet sich vor allem nach

den geltenden Bonusbedingungen. Dies funktioniert so, dass

die Online Casinos Dich dazu auffordern, einen bestimmten Wert des Bonus ohne Einzahlung

zu setzen, um ihn zu erhalten. Wie bereits erwähnt, zahlst Du im Voraus kein eigenes Geld ein, um Zugang

zu einem Bonus im Online Casino zu erhalten.

References:

https://online-spielhallen.de/jet-casino-mobile-app-dein-spielvergnugen-unterwegs/

Mit rotem Lippenstift, Smokey Eyes und Co. darf ruhig großzügig

aufgetragen werden. Zudem könnt ihr eine lange Halskette oder eine geschickt platzierte

Brosche tragen. Mit einem schicken Kostüm, einer schönen Bluse oder einem zeitlosen Hosenanzug machen Ladies nichts falsch.

In vielen Spielbanken können Sneakers getragen werden,

aber es gibt auch Casinos, bei denen Turnschuhträger als zu lässig angesehen und daher abgewiesen werden.

Bei der Farbwahl ist es ratsam, auffällige oder grelle Töne

(kein Hawaii-Hemd) zu vermeiden. In diesem Fall sind Herren mit einem adretten Kleidungsstil und Damen in einem schicken Kleid bestens gekleidet.

Die Auswahl reicht von eleganten Cocktailkleidern bis hin zu stilvollen Abendkleidern, je nach Stil des Casinos.

Herren tragen Smoking und Fliege, Damen lange Abendkleider oder elegante Cocktailkleider.

Es gibt verschiedene casino dresscodes, die je nach Spielstätte und Anlass variieren. Casinos sind Orte, an denen Stil

auf Tradition trifft, und der casino dresscode

ist ein wichtiger Bestandteil dieses Charmes. Onlinespielcasino.de unterstützt den verantwortungsvollen Umgang

mit Glücksspiel. Eine ordentliche, nicht zu sportliche Alltagskleidung ist völlig ausreichend.

References:

https://online-spielhallen.de/alles-uber-lemon-casino-auszahlungen/

Mit ihren starken Wurzeln in der Tradition und der Umarmung

von Innovation bietet das Casino ein unvergleichliches Spielerlebnis für Besucher aus ganz Deutschland.

Aus dem Interesse an Casino Spielen und Poker entstand

ein Startup, das heute ein erfolgreiches Unternehmen im Glücksspiel-Bereich ist.

Den Bonus gewinnt man, wenn alle 52 Felder im Hauptspiel erreicht wurden; Gewinne der auf dem

Weg dahin liegenden Felder werden aufsummiert.

Diese Casinos unterliegen anderen Regulierungen und können ihre Dienste in der EU frei anbieten. Das nationale Glücksspielrecht lässt sich im Internet nur eingeschränkt

durchsetzen, wodurch Anbieter mit ausländischer Lizenz legale Alternativen schaffen. Ein gutes Casino ohne OASIS bietet eine breite Auswahl an Zahlungsmethoden, darunter Kreditkarten, Banküberweisungen und moderne E-Wallets.

References:

https://online-spielhallen.de/kings-casino-rozvadov-spiele-events-e1m/

Eligible only if at least one deposit is made on Monday.

Clubhouse Casino turns every day into a winning opportunity, giving punters fresh ways to boost their bankroll or enjoy

extra spins. The crypto version of the welcome bonus

is also fully available via the Clubhouse Casino app download via browser.

Don’t miss out on this limited-time offer —Join Clubhouse Casino

today and grab your welcome bonus! Whether you’re using an Android phone or an iPhone,

the entire site is fully optimized for smooth, fast, and fun gameplay.

So grab a cold one, sit back, and let’s take a proper look at what

makes Clubhouse the online casino worth checking out. From pokies that pop with colour to fast withdrawals and a generous welcome package — this

is where the good times begin. If you’re looking for a trusted online casino in Australia that actually feels exciting, fun,

and rewarding – Clubhouse Casino might just be your new favourite spot.

Scroll down and click “Help” to open the detailed FAQ section, covering everything from payments to bonuses.

References:

https://blackcoin.co/understanding-online-gambling-platforms/

It offers a comprehensive list of online casinos that cater specifically to cryptocurrency

users, ensuring a seamless and enjoyable gaming experience.

That said, all the international online casinos recommended on this

page offer hundreds of legitimate electronic slots that pay out real

money, and players only have to be 18 years

old to sign up. All the sites listed here are considered the best online casinos in FL, and each one

is reputable, time-tested, and has hundreds of thousands of

members from the state. Just remember, the legal landscape of online gambling is evolving across America,

so make sure you are aware of your rights and the regulations regarding online casinos in Minnesota.

What’s more, the new tower and lobby offer visitors a gracious entrance on the first floor and welcoming spaces on all patient floors.

Get comprehensive information about your care team, safety, comfort, and the hospital discharge process.

We are also home to the Comprehensive Stroke

Center and Ronald O. Perelman Center for Emergency Services,

where our emergency medicine specialists provide advanced care for adults and children. At NYU

Langone’s Tisch Hospital, our doctors, nurses, and other medical professionals use a team approach to provide

the highest level of care to people from New York City and around the world.

Meta-aggregation is a unique mechanism DexGuru uses to optimize trades and offer you the best

rates.

There are 36 gaming tables and 500 slot machines here at Christchurch casino.

The casino is open 7 days a week from 11am until 2am – dress code is smart.

Don’t forget to secure a room in the casino hotel.

Get ready to plan your casino holiday and be prepared to experience the high life.

We have a few friends in the online bingo industry that are clearly worth mentioning, especially for the United Kingdom

and the United States players. This was in part to help land

based gamblers easily qualify for major poker tournaments online

and from home, a trend that is gaining popularity

every day.

References:

https://blackcoin.co/stay-casino-australia-review-and-bonuses-2025/

You can deposit and withdraw money easily using credit cards, e-wallets, and even cryptocurrencies.

The platform updates its game collection regularly, so you always have something new to try.

Players are advised to check all the terms and conditions

before playing in any selected casino.

To enhance the gaming experience, RocketPlay 20 offers a variety of bonuses and promotions.

Connect with dealers and players through live chat, elevating your gaming excitement.

New players at RocketPlay Casino receive a generous welcome package including

a match bonus of up to $1,000 and 100 free spins on selected

slots. Explore our vast selection of games, from classic slots to live dealer experiences.

Experience premium gaming with RocketPlay Casino, your all-in-one destination for sports

betting, casino games, and live dealer action.

With a user-friendly interface and easy navigation between tables, players can quickly find

their preferred games. Featuring a wide variety of games, players can engage with professional dealers in popular categories such as blackjack, roulette, baccarat, and poker.

For those seeking unique experiences, RocketPlay offers Mystic Jackpot games

that feature hidden jackpots that can be triggered randomly during gameplay.

With over 2,500 slot titles available, the casino offers a

rich selection that includes classic three-reel games,

modern video slots, and innovative Megaways options. Slots at RocketPlay Casino represent a vibrant and diverse

gaming experience, appealing to both casual players and seasoned slot enthusiasts.

References:

https://blackcoin.co/casino-canberra-in-depth-review/

You can reach the team via email, and VIP players also

get a dedicated manager for tailored help. Huge pokies

and live catalogue from Evolution, Pragmatic Play, Play’n GO,

Nolimit City, Playtech, Ezugi Instant deposit; withdrawals to Paysafe

where available Navigation is clean, mobile is slick, and payouts are quick

once you’re verified. You get clear bonus rules, responsible play tools, and VIP care when you climb the levels.

If you’re an Aussie looking for a fast, friendly

place to play, this site keeps things simple.

Safety is built into the platform from the ground up.

Free spins, early access to some promos, faster responses

The programme unlocks from your first deposit.

It felt like the team knew the product and wanted me back in the

game quickly.

Whether you’re playing a few pokies or going big at the live

tables, payouts are transparent and on time. Money matters,

and Woo Casino Australia makes sure deposits and payouts are simple, secure, and fast.

Whether it’s pokies, jackpots, or classic tables, the sheer variety of Woo Casino games makes sure

no session ever feels the same. The speed shows in instant crypto withdrawals and fast banking options in AUD, so players don’t get stuck

waiting for their winnings. The casino also supports both cash and crypto, with a clean interface that keeps banking and games easy to

find.

References:

https://blackcoin.co/harvest-buffet-the-star-sydney/

Weather you are a new player or an old one you will like this game,

Give it a try and dont forget to share your experience

with us in the comment section. The game offers

an interesting storyline and engaging gameplay tactics.

FIFA 20 is a popular football game. Powered by Frostbite, EA SPORTS FIFA 20 for PC brings two sides of The World’s Game to

life – the prestige of the professional stage and an all-new,

authentic street football experience in EA SPORTS VOLTA.

FIFA 20 is an upcoming football simulation video game published by Electronic Arts, as the

27th instalment in the FIFA series. To make sure your data and your privacy are safe, we at FileHorse check

all software installation files each time a new one is uploaded to our servers or linked to remote server.

FIFA 20 will appeal to any football fan that wants to try moving away from the big leagues every now and then. The improvements are small but very much

monumental in gameplay. FIFA 20’s shift to smaller settings and the focus on more realistic physics talks

of the dynamic move leads to much more fulfilling football matches

that you can play with anybody around the world.

All in all, these improvements lead to a better experience when playing the new

VOLTA Football feature in FIFA 20.

References:

https://blackcoin.co/lucky-elf-casino-discover-top-slot-experiences-in-australia/

Crown Sydney is not just a place to stay — it’s a destination where elegance, comfort, and

service blend into a premium urban escape. Guests at Crown Sydney enjoy seamless integration with every

facet of the Crown experience. Rising above the city

skyline, the tower features ultra-modern design, bespoke interiors, and floor-to-ceiling windows in every room.

Whether you’re gaming online or booking a stay, your personal data is

protected by encrypted servers, 2FA, and strict PCI DSS compliance.

It’s free to join, easy to use, and gives you access to Crown-wide

experiences across Sydney, Melbourne, and Perth. Our gaming floors, hotels, and public areas are monitored and protected

by a professional security team, cutting-edge surveillance, and AI-enabled systems — 24 hours a day.

Crown Sydney believes that gaming should always remain a form of entertainment.

Just a 1-minute walk from the Queen Victoria Building

(QVB), Megaboom City Hotel is located in the heart of

Sydney CBD. Located in the heart of Sydney CBD (Central Business District), Castlereagh Boutique Hotel,

an Ascend Collection Hotel offers a restored,

heritage-listed property near Sydney’s most

cherished… Situated in the heart of Sydney, Swissôtel

Sydney—recently awarded Metropolitan Superior Hotel of the Year at

the 2025 AA NSW Awards for Excellence—is ideally located for shopping, sightseeing,

and… Situated in Sydney and with Central Station Sydney reachable within 800 metres, Paramount House Hotel features concierge services, non-smoking rooms, a restaurant, free

WiFi throughout the property… Overlooking Sydney Harbour, Four Seasons Hotel Sydney offers complimentary

Premium WiFi, a bar, restaurant, fitness centre and swimming pool.

Located 50 metres from Wynyard Train Station providing links throughout Sydney and 1 km

from the Barangaroo Reserve, Little National Hotel Sydney in Sydney

offers a fitness centre and a rooftop bar.

References:

https://blackcoin.co/the-match-game-rules-wins-and-popular-strategies/

online casino that accepts paypal

References:

https://social-lancer.com

online slots paypal

References:

body-positivity.org

paypal casino canada

References:

https://jobcopeu.com

online real casino paypal

References:

https://beaunex.net

paypal casinos online that accept

References:

https://didiaupdates.com/employer/paypal-casinos-2025-best-online-casinos-that-accept-paypal/

paypal casinos online that accept

References:

https://westorebd.com/employer/best-paypal-online-casinos-in-the-us-2025/

gamble online with paypal

References:

https://www.workbay.online/profile/mixstella35170

online casino mit paypal

References:

http://www.dycarbon.co.kr/bbs/board.php?bo_table=free&wr_id=566356

Thinking about trying out MM99slot. I’m really hoping there are some progressive games. Learn more here: mm99slot

Pingback: ชุดเครื่องเสียง

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me. https://accounts.binance.info/ar-BH/register-person?ref=UT2YTZSU

Your article helped me a lot, is there any more related content? Thanks!

Pingback: ช่างไฟใกล้ฉัน

Your article helped me a lot, is there any more related content? Thanks! https://accounts.binance.info/pl/register-person?ref=UM6SMJM3