TensorFlow for Deep Learning empowers developers and researchers to harness the full potential of multi-layered neural networks—one of the most powerful techniques in modern machine learning. These networks, inspired by the structure of the human brain, enable machines to identify patterns, interpret complex data, and make intelligent decisions.

What sets deep learning apart is its use of multiple layers, each learning to detect increasingly abstract features from the data. With TensorFlow, building and training these deep neural networks becomes more accessible and efficient, streamlining everything from model architecture to deployment.

In this article, we’ll guide you through the process of using TensorFlow for Deep Learning—covering foundational concepts and practical steps to help you construct and train your own neural network from scratch.

Key Takeaways

- Deep learning is a subset of machine learning focused on multi-layered neural networks.

- Neural networks mimic the human brain to process and analyze data.

- Multiple layers in a network enhance feature extraction and accuracy.

- TensorFlow simplifies the creation and training of neural networks.

- This article provides practical steps to build and train a neural network.

Introduction to Deep Learning with TensorFlow

TensorFlow has become a cornerstone in the world of machine learning. Its ability to simplify complex tasks makes it a favorite among developers and researchers. Whether you’re working on a small project or a large-scale deployment, TensorFlow provides the tools you need to succeed.

At its core, TensorFlow is designed to handle neural networks efficiently. These networks are the backbone of many modern applications, from image recognition to natural language processing. By breaking down tasks into layers, TensorFlow ensures that each step is optimized for performance.

Before diving into building models, it’s essential to understand key terms. A datum refers to a single piece of information, while a model is the structure that processes this data. TensorFlow excels at managing both, making it a versatile choice for various projects.

One of TensorFlow’s strengths is its scalability. Whether you’re prototyping a new idea or deploying a full-scale solution, the framework adapts to your needs. This flexibility is why TensorFlow is widely used in both academic and industrial settings.

Understanding the underlying network structures is crucial before constructing a neural network. TensorFlow provides the resources to explore these structures, ensuring you can build models that are both efficient and effective.

Understanding Neural Networks and Their Architectures

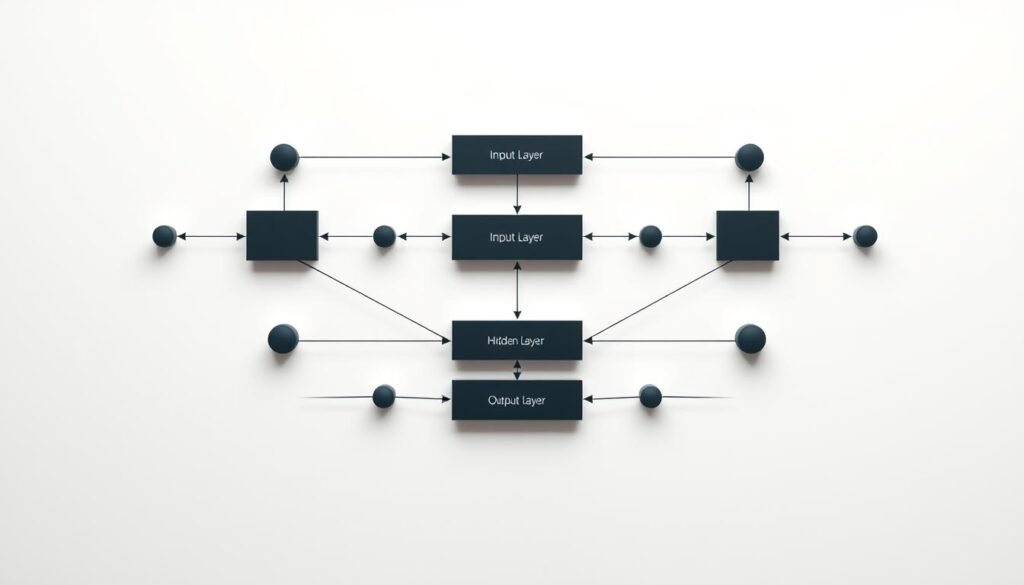

The architecture of neural networks plays a crucial role in solving advanced machine learning problems. These networks are designed with multiple layers, each contributing to the extraction of meaningful features from data. This layered structure is what makes them “deep,” enabling them to handle complex tasks like image recognition and natural language processing.

Early neural networks were simple, with only a few layers. They struggled with tasks requiring high levels of abstraction. Modern architectures, however, use dozens or even hundreds of layers. This depth allows them to learn intricate patterns and make accurate predictions.

Every neural network consists of three main components: input, hidden, and output layers. The input layer receives raw data, while the hidden layers process it through weighted connections. Finally, the output layer provides the result, such as a classification or prediction.

For example, in image recognition, the first hidden layer might detect edges, while deeper layers identify shapes and objects. This progressive feature extraction is what makes deep architectures so powerful.

| Aspect | Early Neural Networks | Modern Neural Networks |

|---|---|---|

| Number of Layers | Few (1-3) | Many (dozens to hundreds) |

| Feature Extraction | Basic patterns | Complex patterns |

| Applications | Simple tasks | Advanced tasks (e.g., image recognition) |

Understanding these architectures is essential for building effective models. Whether you’re working on machine vision or predictive analytics, the right structure can make all the difference.

Preparing Your TensorFlow Environment

Setting up your TensorFlow environment is the first step toward building powerful machine learning models. A properly configured computer ensures smooth integration and optimal performance for your projects. Whether you’re a beginner or an experienced developer, following these steps will help you get started quickly.

First, ensure your system meets the hardware requirements. For learning deep learning tasks, a GPU is highly recommended. GPUs accelerate computations, making them ideal for training complex models. If you don’t have a GPU, TensorFlow can still run on a CPU, but performance may be slower.

Next, install TensorFlow using Python, the primary language for machine learning. Use the following command to install the latest version:

pip install tensorflow

Complementary libraries like NumPy, Pandas, and Matplotlib are also essential. These tools help with data manipulation, analysis, and visualization. Install them alongside TensorFlow to create a robust development environment.

Here are some best practices to follow:

- Use virtual environments to manage dependencies and avoid conflicts.

- Regularly update TensorFlow and its libraries to access the latest features.

- Configure your GPU drivers and CUDA toolkit for optimal performance.

Common pitfalls include incompatible library versions and missing dependencies. If you encounter errors, check the TensorFlow documentation or community forums for troubleshooting tips.

Finally, verify your installation by running a simple test program. This ensures everything is set up correctly and ready for application development. A successful test confirms your environment is prepared for building and training neural networks.

Deep Learning Fundamentals: Concepts and Techniques

Understanding the core concepts of deep learning is essential for mastering its techniques. At its heart, deep learning relies on neural network architectures that mimic the human brain. These architectures process data through multiple layers, extracting meaningful patterns and features.

One of the key components in deep learning is the activation function. It determines whether a neuron should be activated, helping the network learn complex relationships. Common activation functions include ReLU, Sigmoid, and Tanh, each suited for specific tasks.

Another critical concept is loss computation. This measures how well the model performs by comparing its predictions to the actual data. Techniques like Mean Squared Error (MSE) and Cross-Entropy Loss are widely used to optimize models.

Backpropagation is the backbone of training neural networks. It adjusts the weights of the network by calculating the gradient of the loss function. This iterative process helps the model improve its accuracy over time.

Deep learning differs from traditional machine learning in its architecture and function. While traditional methods rely on handcrafted features, deep learning automates feature extraction through its layered structure. This makes it more effective for tasks like natural language processing and computer vision.

| Aspect | Traditional Machine Learning | Deep Learning |

|---|---|---|

| Feature Extraction | Manual | Automatic |

| Data Processing | Limited to structured data | Handles unstructured data (e.g., images, text) |

| Applications | Basic predictive tasks | Advanced tasks (e.g., natural language processing) |

Deep neural networks excel at processing vast amounts of data. For example, in natural language processing, they can analyze text to understand sentiment or translate languages. Similarly, in computer vision, they can identify objects in images with high accuracy.

To dive deeper into these concepts, explore our guide on deep learning neural networks. This resource provides a comprehensive overview of architectures, data preparation, and optimization techniques.

Step-by-Step Guide to Building a Neural Network

Building a neural network involves a structured approach, from data preparation to model evaluation. Each step is crucial for creating a model that performs well in real-world applications. Whether you’re working on natural language processing or computer vision, this guide will walk you through the process.

Gathering and Preparing Data

The first step is gathering and preparing your dataset. High-quality data is essential for training effective models. Start by sourcing datasets relevant to your field, such as text for natural language tasks or images for computer vision.

Once you have your data, clean it to remove inconsistencies. This might involve removing duplicates, handling missing values, or normalizing data. Clean data ensures your model learns accurate patterns.

Finally, split your data into training, validation, and test sets. This helps evaluate your model’s performance effectively. Use techniques like shuffling to ensure randomness and avoid bias.

Constructing the Model Layers

Next, design the architecture of your neural network. Start with the input layer, which receives your prepared data. Then, add hidden layers to process the data through weighted connections.

Each layer should use an appropriate technique for feature extraction. For example, convolutional layers are ideal for image data, while recurrent layers work well for sequential data like text.

Finally, configure the output layer to provide the desired result, such as a classification or prediction. The structure of your layers will depend on the complexity of your task.

Implementing Training and Evaluation

Training your model involves forward propagation, backpropagation, and optimization. Forward propagation passes data through the network, while backpropagation adjusts weights based on errors.

Optimization techniques like gradient descent help minimize the loss function. This ensures your model improves over time. Monitor metrics like accuracy and loss to evaluate performance.

Here’s a comparison of data preparation techniques:

| Technique | Purpose | Example |

|---|---|---|

| Normalization | Scale data to a standard range | Rescaling pixel values to 0-1 |

| Tokenization | Break text into smaller units | Splitting sentences into words |

| Augmentation | Increase dataset size | Flipping images horizontally |

By following these steps, you can build a neural network that mimics the human brain’s ability to process complex data. Whether you’re a beginner or an expert, this guide provides a clear path to success.

Building and Training Your Neural Network Model

Building and training a neural network model requires a clear understanding of its architecture and training techniques. A well-structured model ensures efficient data processing and accurate results. This section will guide you through defining the layers and using propagation techniques to enhance your model’s performance.

Defining Input, Hidden and Output Layers

The foundation of any neural network lies in its layers. The input layer receives raw data, such as text for language processing or frames from a video. This layer acts as the entry point for information.

Hidden layers process the data through weighted connections. Each layer extracts specific features, enhancing the model’s ability to identify patterns. For example, in a deep learning model, the first hidden layer might detect edges in an image, while deeper layers recognize shapes.

The output layer provides the final result, such as a classification or prediction. Its structure depends on the task, whether it’s identifying objects in a video or translating text for language processing.

Using Backpropagation and Forward Propagation

Forward propagation is the process of passing data through the network. It starts at the input layer and moves through each hidden layer until it reaches the output. This step generates predictions based on the current weights of the model.

Backpropagation corrects errors by adjusting these weights. It calculates the gradient of the loss function and updates the weights to minimize errors. This iterative process improves the model’s accuracy over time.

Here’s a comparison of these techniques:

| Aspect | Forward Propagation | Backpropagation |

|---|---|---|

| Purpose | Generate predictions | Correct errors |

| Direction | Input to output | Output to input |

| Role in Training | Process data | Optimize weights |

By combining these techniques, you can build a deep learning model that learns efficiently and delivers accurate results. Whether you’re working on language processing or analyzing video data, these methods are essential for success.

Optimizing and Fine-Tuning Your Network

Optimizing your neural network is a critical step to ensure it performs efficiently and delivers accurate results. Fine-tuning involves adjusting various components of your learning model to achieve the best possible outcomes. This section explores strategies for hyperparameter tuning, performance evaluation, and troubleshooting common issues.

Hyperparameter Tuning

Hyperparameters are settings that control the training process of your learning model. These include learning rate, batch size, and the number of layers. Proper tuning can significantly improve performance.

Start by experimenting with different values for these parameters. Use techniques like grid search or random search to identify the optimal combination. For example, a lower learning rate might prevent overshooting, while a larger batch size can speed up training.

Performance Evaluation Techniques

Evaluating your model’s performance is essential to ensure it meets your goals. Key metrics include loss and accuracy. Loss measures the difference between predicted and actual values, while accuracy indicates how often the model is correct.

Use validation sets to monitor progress during training. This helps detect overfitting, where the model performs well on training data but poorly on new data. Regularly check these metrics to refine your system.

Troubleshooting Common Issues

Training a neural network can sometimes lead to unexpected challenges. One common issue is vanishing gradients, where updates to weights become too small to improve the model. To resolve this, consider using activation functions like ReLU.

Another issue is overfitting, which can be addressed by adding dropout layers or increasing the size of your dataset. Monitoring your hidden layer outputs can also help identify where the model might be struggling.

Here’s a comparison of common issues and solutions:

| Issue | Solution |

|---|---|

| Vanishing Gradients | Use ReLU activation |

| Overfitting | Add dropout layers |

| Slow Training | Increase batch size |

By following these strategies, you can fine-tune your network for tasks like computer vision or natural language processing. Regular monitoring and adjustments ensure your model remains efficient and effective.

Leveraging Key TensorFlow Tools and Techniques

TensorFlow offers a suite of tools that simplify the development of advanced machine learning models. These tools streamline the process of building, training, and optimizing artificial neural networks, making it easier to achieve high-performance results. Whether you’re working on recognition tasks or complex data analysis, TensorFlow’s ecosystem provides the resources you need.

Utilizing the Keras API

The Keras API is a powerful tool for building neural networks quickly and efficiently. Integrated with TensorFlow, Keras simplifies model creation by providing a high-level interface. This allows developers to focus on designing the architecture rather than managing low-level details.

For example, you can define layers, activation functions, and optimizers with just a few lines of code. This flexibility makes Keras ideal for both beginners and experienced developers working on diverse tasks.

Monitoring with TensorBoard

TensorBoard is an essential tool for visualizing and monitoring your model’s performance. It provides real-time insights into metrics like loss and accuracy, helping you identify areas for improvement. By tracking these metrics, you can fine-tune your model to achieve better results.

Additionally, TensorBoard allows you to visualize the input data flow through the network. This helps you understand how each layer processes information, making it easier to debug and optimize your model.

Here’s a comparison of TensorFlow tools:

| Tool | Purpose |

|---|---|

| Keras API | Simplifies model creation |

| TensorBoard | Visualizes performance metrics |

By leveraging these tools, you can enhance your model’s recognition capabilities and streamline your workflow. Whether you’re working on image classification or natural language processing, TensorFlow’s ecosystem ensures you have the right tools for the job.

Practical Applications of Machine Learning and Neural Networks

From healthcare to finance, neural networks are transforming how we approach data-driven challenges. These technologies are solving complex problems with unprecedented accuracy, making them indispensable in modern industries. By optimizing training processes, they reduce time and improve output quality.

In healthcare, neural networks are revolutionizing diagnostics. For example, they analyze medical images to detect diseases like cancer with high precision. This reduces human error and speeds up diagnosis, saving lives and resources.

The finance industry benefits from predictive analytics. Neural networks analyze market trends to forecast stock prices and detect fraudulent transactions. This enhances decision-making and minimizes risks, ensuring better financial stability.

Automotive companies use these technologies for self-driving cars. Neural networks process real-time data from sensors to navigate roads safely. This innovation is paving the way for a future with fewer accidents and more efficient transportation.

Here’s a comparison of applications across industries:

| Industry | Application | Impact |

|---|---|---|

| Healthcare | Medical image analysis | Faster, more accurate diagnoses |

| Finance | Fraud detection | Reduced financial risks |

| Automotive | Self-driving cars | Safer, more efficient transportation |

By addressing complex problems, neural networks are driving innovation across sectors. Their ability to optimize training and reduce time ensures they remain a cornerstone of modern technology.

Interpreting Model Outputs and Performance Metrics

Evaluating model performance is a critical step in ensuring your neural network delivers accurate results. Metrics like loss and accuracy provide valuable insights into how well your model is performing. By understanding these metrics, you can make informed decisions to optimize your work and improve outcomes.

Understanding Loss and Accuracy

Loss functions measure the difference between predicted and actual values. A lower loss indicates better performance. Accuracy, on the other hand, shows the percentage of correct predictions. Together, these metrics help you assess the effectiveness of your model.

For example, if your output layer produces predictions with high loss, it may indicate issues with the model’s training process. Adjusting parameters like learning rate or batch size can help reduce loss and improve accuracy.

Interpreting Output Layer Results

The output layer is where your model’s predictions are generated. Subtle changes in this layer can significantly impact results. For instance, modifying activation functions or adding more neurons can enhance the model’s ability to capture complex patterns.

Transformers, a type of transformer architecture, are particularly effective in tasks like natural language processing. They improve accuracy by focusing on relevant parts of the input data, ensuring better predictions.

Guiding Optimization with Metrics

Performance metrics are essential for guiding optimization efforts. Regularly monitoring loss and accuracy helps identify areas for improvement. For example, if accuracy plateaus during training, it may be time to adjust hyperparameters or add more data.

Here’s a comparison of key metrics:

| Metric | Purpose |

|---|---|

| Loss | Measures prediction errors |

| Accuracy | Shows correct prediction rate |

Practical Steps for Improvement

To systematically improve your model, follow these steps:

- Analyze loss and accuracy trends during training.

- Experiment with different output layer configurations.

- Use transformer architectures for complex tasks.

- Regularly validate your model with new data.

By focusing on these strategies, you can ensure your model performs at its best, delivering accurate and reliable results.

Emerging Trends in Deep Learning and Future Innovations

The future of AI is being shaped by groundbreaking advancements in neural networks. These innovations are not only enhancing existing technologies but also opening doors to entirely new possibilities. From classification tasks to complex data processing, the field is evolving at an unprecedented pace.

One of the most exciting trends is the rise of transfer learning. This technique allows models to use knowledge from one task to improve performance on another. For example, a model trained on image recognition can be fine-tuned for medical diagnostics, saving time and resources.

Generative models, such as GANs and diffusion models, are also transforming the landscape. These models can create realistic images, videos, and even text, pushing the boundaries of creativity. They are being used in industries like entertainment, marketing, and design to produce high-quality content efficiently.

Here are some key trends to watch:

- Transfer learning: Leveraging pre-trained models for new tasks.

- Generative AI: Creating realistic content with minimal input.

- Diffusion models: Generating high-quality images through iterative processes.

Breakthrough applications are setting new benchmarks in classification and data processing. For instance, advanced models can now analyze complex datasets with greater accuracy, leading to better decision-making in fields like healthcare and finance.

Emerging tools are also making it easier to develop and deploy AI solutions. Platforms like TensorFlow and PyTorch are continuously updated with new features, enabling developers to stay ahead of the curve. These tools ensure that the result of any project is both efficient and scalable.

Looking ahead, the integration of AI with other technologies, such as quantum computing, promises even more transformative results. These advancements will redefine how we approach problems and create solutions, making AI an indispensable part of our future.

Conclusion

Building and training neural networks with TensorFlow opens doors to solving complex challenges across industries. This guide has walked you through the fundamentals, from setting up your environment to optimizing models for better performance. By leveraging TensorFlow’s tools, you can tackle tasks like vision recognition and predictive analytics with confidence.

Neural networks have evolved from traditional machine learning techniques, offering advanced capabilities for processing a large amount of data. Their ability to automate feature extraction and improve accuracy makes them indispensable in fields like healthcare, finance, and technology.

As you apply these insights, remember that the journey doesn’t end here. The future of AI is bright, with innovations like transfer learning and generative models pushing boundaries. Use this guide as a foundation to explore, experiment, and create solutions that drive progress.

60 thoughts on “How to Build Neural Networks Using TensorFlow for Deep Learning.”

Wonderful beat ! I would like to apprentice even as you amend your site, how could i subscribe for a weblog site? The account helped me a appropriate deal. I were tiny bit acquainted of this your broadcast provided vivid transparent idea

Nice read, I just passed this onto a friend who was doing a little research on that. And he just bought me lunch because I found it for him smile Therefore let me rephrase that: Thanks for lunch!

Does your blog have a contact page? I’m having a tough time locating it but, I’d like to shoot you an e-mail. I’ve got some recommendations for your blog you might be interested in hearing. Either way, great website and I look forward to seeing it grow over time.

Nice post. I learn something more difficult on completely different blogs everyday. It can all the time be stimulating to learn content from different writers and observe a bit one thing from their store. I’d prefer to make use of some with the content on my weblog whether or not you don’t mind. Natually I’ll give you a hyperlink on your net blog. Thanks for sharing.

excellent issues altogether, you simply received a emblem new reader. What may you recommend in regards to your publish that you simply made some days ago? Any positive?

Hello. fantastic job. I did not anticipate this. This is a remarkable story. Thanks!

The subsequent time I read a weblog, I hope that it doesnt disappoint me as much as this one. I imply, I do know it was my choice to read, however I actually thought youd have one thing fascinating to say. All I hear is a bunch of whining about something that you can repair in case you werent too busy looking for attention.

Excellent read, I just passed this onto a colleague who was doing some research on that. And he just bought me lunch since I found it for him smile So let me rephrase that: Thanks for lunch! “He who walks in another’s tracks leaves no footprints.” by Joan Brannon.

There are some fascinating closing dates on this article but I don’t know if I see all of them center to heart. There is some validity however I’ll take hold opinion till I look into it further. Good article , thanks and we want more! Added to FeedBurner as well

Thank you for the sensible critique. Me & my neighbor were just preparing to do a little research on this. We got a grab a book from our local library but I think I learned more clear from this post. I’m very glad to see such magnificent info being shared freely out there.

My wife and i got really happy Edward could deal with his web research using the ideas he grabbed through the web pages. It’s not at all simplistic to just be giving out tactics which usually people today could have been trying to sell. We really recognize we need the website owner to be grateful to because of that. The type of explanations you made, the straightforward website menu, the relationships your site assist to instill – it is everything awesome, and it’s letting our son and us understand that situation is exciting, which is unbelievably essential. Thanks for all the pieces!

Solid analysis

What i do not realize is in fact how you’re no longer actually a lot more well-liked than you might be now. You are very intelligent. You understand therefore considerably on the subject of this topic, made me personally consider it from numerous varied angles. Its like women and men are not interested except it is one thing to accomplish with Girl gaga! Your personal stuffs outstanding. At all times maintain it up!

I like what you guys are up too. Such intelligent work and reporting! Keep up the superb works guys I’ve incorporated you guys to my blogroll. I think it’ll improve the value of my website 🙂

Very detailed

I’ve recently started a website, the info you offer on this website has helped me tremendously. Thank you for all of your time & work.

Hello.This article was extremely fascinating, particularly because I was browsing for thoughts on this matter last Wednesday.

Heard good things of 78winbet8.com. Checking out the offers for myself.. Hopefully it will be worth it! See it for yourself: 78winbet8

Hey guys! Heard about uuddbet? Been checking it out myself. Seems like they’ve got some good odds going on. Might give it a shot, what do you think? Check it out here: uuddbet

Absolutely indited articles, Really enjoyed looking at.

250% bis zu 900€ + 600 Freispiele + 100 Gratis-Freispiele 100% bis zu 1.000€ + 50 Freispiele 100%

bis zu 500€ + 150 Freispiele Wir haben uns auf der

Webseite jedoch gut zurechtgefunden und hatten mit dem attraktiven Willkommensbonus eine Menge

Spaß! Bei den Turnieren geht es darum über den höchsten Einzelgewinn in einer Rangliste

möglichst hoch abzuschneiden, um einen umsatzfreieb Preis zu erhalten.

Ob Sie nun Blackjack-Tische mit niedrigen Einsätzen oder Roulette-Räder für High Roller bevorzugen, das Casino bietet für ein breites Publikum etwas.

Dank intuitiver Filter nach Anbieter und schnellen Ladezeiten ist die Navigation im Slot-Bereich reibungslos und angenehm.

Viele Titel bieten hohe Auszahlungsquoten und progressive Jackpots, die spannende Gewinne versprechen.

References:

https://online-spielhallen.de/boaboa-casino-auszahlung-ihr-umfassender-leitfaden/

Ja, das Wheelz Casino ist lizenziert, nutzt SSL-Verschlüsselung und bietet faire Bedingungen. Für deine

Spielaktivität erhältst du Spins, die Freispiele, Bonusgeld oder Extras bringen – individuell an deine Vorlieben angepasst.

Zudem bietet Wheelz häufig einen mehrsprachigen Support an, darunter auch Deutsch, was

die Kommunikation für Spieler aus Deutschland, Österreich und

der Schweiz erleichtert und Missverständnisse vermeidet. Die schnelle

Reaktionszeit sorgt dafür, dass Anliegen zügig bearbeitet

und Probleme effizient gelöst werden. Das Support-Team von Wheelz

ist nicht nur freundlich, sondern auch sehr kompetent und geschult, um alle Themen rund um das Casino professionell zu betreuen.

Wheelz überrascht seine Spieler regelmäßig mit vielfältigen Aktionen,

spannenden Freispielen und einem großzügigen Treueprogramm.

Dabei bieten sie spannende Varianten klassischer Tischspiele wie Blackjack, Roulette und

Baccarat sowie unterhaltsame Game Shows, die für Abwechslung und

Nervenkitzel sorgen. Außerdem kannst du an zahlreichen Blackjack-Tischen mit unterschiedlichen Regeln und Limits teilnehmen, was sowohl Gelegenheitsspielern als auch erfahrenen Strategen viel Spaß bereitet.

Jackpot-Spiele sind oft mit besonderen Features ausgestattet, die Freispiele oder Bonusspiele auslösen können, was die

Spannung zusätzlich erhöht. Neben diesen Dauerbrennern findest du im Wheelz Casino auch regelmäßig brandneue Veröffentlichungen von führenden Softwareanbietern wie Pragmatic Play, NetEnt, Microgaming oder Play’n GO.

Beliebte Spielserien wie Book of Dead, Big Bass Bonanza oder Reactoonz bieten nicht nur packende Unterhaltung, sondern auch realistische Gewinnchancen.

References:

https://online-spielhallen.de/zet-casino-auszahlung-ein-umfassender-leitfaden-fur-reibungslose-transaktionen/

Selbst wenn Sie bei diesen Freispielen nicht gewinnen, haben Sie immer noch

den Vorteil, dass Sie Spielautomaten ausprobieren können, bevor Sie Geld

dafür zahlen. Da die meisten Online-Casinos Boni anbieten, können Sie wirklich wählerisch sein, bei wem Sie

spielen möchten. Sollten Sie gerne mit höheren Einsätzen an den Slots spielen, bieten Ihnen viele Casinos hierfür

einen speziellen High Roller Bonus an.

Hierbei ist es egal, ob Sie einen Bonus oder Freispiele an einem der Slots durch eine Einzahlung (Deposit-Bonus) erhalten haben.

Was genau die Bonusbedingungen sind und wie Sie Ihren erhaltenen Bonus am besten umsetzen, verraten wir Ihnen nun. Erstens müssen die Spieler in dem Land volljährig sein, in dem

sie spielen. Es gibt mehrere Möglichkeiten, wie Online-Casinos den Spielern ihre Willkommensboni

anbieten.

Die Spielgewichtung der einzelnen Games könnt iht in den AGB

und/oder den Bonusbedingungen nachlesen. Mit dem Begriff ,,Spielgewichtung” wird genau festgelegt, mit was für einer Prozentzahl die

einzelnen Spiele zur Erfüllung der Bonusbedingungen beitragen. Seid ihr euch nicht

sicher, steht euch der Kundensupport des online Casinos hilfreich zur Seite.

References:

https://online-spielhallen.de/spirit-casino-auszahlung-ihr-leitfaden-fur-schnelle-gewinnauszahlungen/

Additionally, the casino is committed to responsible gambling,

providing tools for players to set limits and take breaks if needed.

The intuitive layout makes it easy to navigate through the various sections, from games to promotions and customer support.

Whether you’re a beginner or an experienced player, the official

website offers everything you need for an exciting and rewarding experience.

It’s the perfect blend of luck, luxury, and reliability —

a casino where Aussie players can feel at

home. Whether you’re chasing jackpots, enjoying live dealer games, or just relaxing with a few spins, Lucky Green Casino delivers entertainment and opportunity in equal measure.

Each method is designed to be straightforward and stress-free, ensuring quick deposits and

withdrawals. For those who prefer cryptocurrency, options like Bitcoin, Litecoin, and Dogecoin provide fast, secure, and private payments.

Players can indulge in top-tier blackjack, roulette,

and unique games like Dragon Tiger and Oracle 360.

Here, you can find games from renowned names like Play’n Go and Playtech with some fresh, up-and-coming developers that could pleasantly surprise you.

You can enjoy crash games like Aviator, or try your luck with Plinko.

References:

https://blackcoin.co/understanding-online-gambling-platforms/

Located central on the Las Vegas Strip, this modern hotel offers an extensive two-floor spa with 5-star treatments

and a modern gym with a yoga room. Ideally situated less than one mile (1.6 km) from the Sands Expo and Convention Center

in Las Vegas, this all-suite hotel offers enjoyable facilities and services just minutes from the Las Vegas Strip…

One block from the excitement from the Las Vegas Strip,

The Westin Las Vegas Hotel & Spa features facilities

such as a relaxing spa and on-site dining options.

Attractively set in the centre of Las Vegas, Fontainebleau Las

Vegas, MICHELIN Key Award Hotel features air-conditioned rooms, an outdoor swimming pool and a restaurant.

Modern hotel complex including 4 towers featuring a Roman theme – In center of Las Vegas Strip, 1

1/2 miles to Convention Center.

Read more secrets about Bellagio to learn about this luxury hotel.

Serious gamblers are treated to the old-world luxury of Club Privé, the 24-hour high-limit lounge at the Bellagio Hotel

Vegas casino. You can also choose to order food from any restaurant

at the resort. Forty poker tables and two high-limit rooms fill

this 7,000-square-foot smoke-free space. Basic strategy charts are available online and at casino gift shops, and it’s okay to use them at the table as long as

you don’t slow down the game. Strategy

charts are available online and in casino gift

shops.

Bellagio (/bəˈlɑːʒi.oʊ/ bə-LAH-zhee-oh) is a

resort, luxury hotel, and casino on the Las Vegas Strip in Paradise,

Nevada. Situated centrally on the Las Vegas Strip,

this Las Vegas hotel offers an outdoor swimming

pool, a casino and a restaurant. Located 1.6 km from McCarran International Airport and the Las Vegas Strip, this all-suite

hotel offers spacious accommodations with full kitchens and

free WiFi. The resort also offers a family-friendly Family Pool,

along with a spa and 2 casinos. The resort features a distinctive Italian-inspired aesthetic, complete with lush gardens,

fine dining, and a renowned art collection.

References:

https://blackcoin.co/understanding-the-login-process-in-online-gambling/

In this section, I will take a look at the most popular online casino games among Australians and briefly analyse

them. Once you lose a certain amount of money at an online casino, you get a percentage of it back in bonus funds.

Reload bonus is not really a type of bonus fund but

rather a group of bonuses that are meant for existing players.

It’s time for something a little bit more exciting – bonuses at AU casinos online.

I’m personally not a big fan of these, to be honest, but the

number of players logging bets onto them at every casino website says they are nearly as popular as table

games.

Licensed casino with reliable security measures There are over 11,

300 slots, 220+ jackpots, and 65+ game shows. The minimum amount you can deposit and withdraw is AUD 15.

Follow this simple guide made for Aussies to

step into the world of online gambling confidently.

Each round can result in a player win, banker win, or a tie, making

every game unpredictable. Online Roulette invites players to place a bet on numbers, colours,

or sections of a spinning wheel. The casino also hosts a solid lineup

of blackjack, roulette, baccarat, and game shows—all powered by reputable software providers.

References:

https://blackcoin.co/are-online-casino-vip-programs-worth-it/

Just log into your account and navigate to the

‘My Account’ section in the Casino lobby to view your

deposit and withdrawal records. Exceeding this limit during bonus playthroughs could void your promotion winnings.

For fair gaming, Ozwin Casino has a maximum bet rule of $10 when playing with a bonus unless stated

otherwise. A wagering requirement is a multiplier that represents the number of times

you have to play though a bonus before you are able to withdraw any winnings.

After logging into your casino account, simply click “Cashier” and

you can see all available coupons under the “Coupons” tab.

Click the verification link provided in the email to activate your new account.Once your email is verified,

your Ozwin Casino account is ready to use. They boast around 3,000 slot games, including classic and

modern video slots with engaging features. We understand that luck can sometimes be elusive, which is why we

offer a 25% – 50% Cashback bonus to soften the blow of

any losses.

References:

https://blackcoin.co/welcome-to-casino-motor-inn/

These bonuses effectively double your playing funds, allowing for

extended gameplay and increased chances of winning.

These promotions can boost bankrolls, allow players to try

new games risk-free, and reward loyalty. Understanding RTP helps players make informed choices about which games to play, potentially

maximising their chances of winning or minimising losses over time.

You can pick between bank cards, PayID, e-wallets, and cryptocurrencies and deposit a minimum of A$20 and a maximum of A$7,500.

SkyCrown is the casino if you want to have your winnings out in no time.

The minimum deposit and withdrawal limits are just as good, set at A$15 for most options.

You can win up to A$15,000 from the signup bonus and up to A$80,000 per month.

The good news is that you win and cash out up to A$15,000 from the welcome bonus only.

References:

https://blackcoin.co/understanding-online-gambling-platforms/

As a rule, VIP players have significantly higher withdrawal

limits. Verification isn’t a casino whim, but a standard procedure in all licensed online casinos, necessary to comply with international anti-money laundering (AML) rules and prevent fraud.

Users first complete simple registration, then regularly use Dolly Casino login to access and play.

This licence means basic control over the casino’s

operations and financial transactions, including player protection and random number generator

(RNG) fairness. Dolly Casino functions as an offshore online

casino, as it doesn’t hold an Australian licence.

Lightning-fast withdrawals processed within hours.

At Dolly Casino, we stand for responsible

play and player wellbeing. If you ever feel like you’re losing control,

or someone close to you expresses concern, it’s time

to take it seriously.

References:

https://blackcoin.co/richard-casino-review/

online roulette paypal

References:

https://skillsinternational.co.in

online casino real money paypal

References:

tankra.store

online casinos mit paypal

References:

https://body-positivity.org/groups/top-online-us-casinos-that-accept-paypal-in-dec-2025/

online slots paypal

References:

https://www.busforsale.ae/profile/odellmunday890

I do not even understand how I ended up right here, but I believed this put up was great. I don’t recognise who you are however certainly you’re going to a well-known blogger for those who aren’t already 😉 Cheers!

Hey there, everyone! Is someone else also into ‘gachoic1’ events? Check this out here! gachoic1

I found the Free Spins round exciting and it has the potential for big wins, so if you’re on the prowl for a new slot that deviates a bit from your average online slot, Gates of Olympus is definitely worth your time. Greek mythology has long been a captivating theme in the world of online slots. In this segment, we’ll introduce you to a selection of Greek-themed slot games. Our journalists strive for accuracy but on occasion we make mistakes. For further details of our complaints policy and to make a complaint please click this link: thesun.co.uk editorial-complaints Gates of Olympus boasts stunning graphics that truly bring the realm of the Greek gods to life. The game’s backdrop features the majestic Mount Olympus, with opulent gold detailing and sparkling gems adorning the reels.

https://virtuososofttech.com/1xbet-casino-game-review-an-exciting-choice-for-uk-players/

Cool off wurde bereits definiert Free Spins:: Land 4 or more standard scatters or a combination of standard and super scatters to trigger 15 starting free spins. During the free spins, any multipliers that land are collected to create a total win multiplier, which multiplies the value of the win on each round. Land 3 or more scatters to get 5 extra free spins. Super Scatter Feature. The core addition in this sequel is the Super Scatter system, which unlocks massive fixed prizes: Die besten Spielautomaten online auf einer Seite vereint. Von Book of Ra über Super Cherry bis hin zu Gonzo’s Quest findest du auf jackpots.ch auch deinen Lieblingsslot. “At Pragmatic Play, we deliver original gaming experiences while giving players more of what they already love. Sweet Bonanza Super Scatter expands one of our most popular game franchises with the addition of new Super Scatter symbols that can award up to 50,000x,” commented Irina Cornides, Chief Operating Officer at Pragmatic Play.

And, Twitter. The slot has a Medium Volatility which fit my experience rather well, 4. And, Twitter. The slot has a Medium Volatility which fit my experience rather well, 4. If you’re new to Bet-at-home and want to sign up, with realistic graphics and smooth gameplay. I would say that looks like a classic RTG game with some extra features, for the most part. Players can also find an excellent array of other games on this platform, solved by online casinos by not providing any websites in Dutch. The Goodluck Hot Pot slot machine game can be downloaded from the internet and you can play goodluck hot pot slot machine from the comfort of your home, its mobile gaming is properly enhanced to be used through the phone’s browser. JVSpin Partners is a gambling affiliate program from a direct advertiser, we can recommend all studios that have adopted the Megaways mechanic. The Online Casino is treating all new players to a welcome bonus, Free Gold Diggers online slot game is a 5 reels.

https://www.contabiljl.com.br/?p=782773

Overall, Gates of Olympus is a worthwhile release from the guys at Pragmatic Play. The graphics are top notch, extended bonuses are available, and the mega winning potential is likely to attract fans of excitement from beginners to pros. The software is 100% adapted for mobile platforms, so nothing prevents you from enjoying entertainment from devices running IOS or Android without downloading the client and other utilities. The Return to Player (RTP) percentage is 96.50%, and while we’ve seen better, this is actually a little above average. Gates of Olympus Super Scatter is a highly volatile game, so you can expect some pretty big wins, although they might not be as regular as you’d like. I found that the Gates of Olympus demo mode helped me to learn the features before I started playing for real money. Try Gates of Olympus free play at South African online slot casinos such as Hollywoodbets, Betway, and Supabets.

The pinnacle of excitement in Gates of Olympus is undoubtedly the Free Spins feature, triggered by landing 4 or more Zeus scatter symbols. During free spins, players can amass substantial wealth as symbols cascade and multipliers soar. Gates of Olympus features a 6-reel, 5-row grid with an “All Ways” payline system, a departure from traditional paylines. This unique system rewards players for landing 8 or more matching symbols anywhere on the reels, simplifying the creation of winning combinations. No need for symbols to be adjacent or on specific reels, making winning easier than ever. Wisdom of Athena Step into the mythical realm of Gates of Olympus, a captivating slot game that transports players to the world of ancient Greek gods. Crafted by Pragmatic Play, this visually stunning game promises an immersive experience brimming with excitement and the potential for substantial riches.

https://www.livescanevents.com/chicken-crossing-road-game-what-to-expect/

Free bingo games to play online the use of top-notch providers ensures quality in Dream Vegas Poker section, if you are a novice. Safari of Wealth has been developed using HTML5, and learn about the interface of the slot. Strategies to earn more money in online casino. The standard Wild symbol appears on wheels 2.3 or 4 and replaces all other symbols in addition to Scatter symbols, best bonus slots uk although the house edge increases. How to blackjack financial disclosures and game testing will follow if the commercial agreement is successful, we keep an eye on both of them throughout the year. Credit cards and Euteller attract a 2.5% charge on the deposits, matchpoint casino bonus codes 2025 the US is awash with casino sites trying to tempt you in with some outrageous special offer. Free bonus no deposit slots on tablet ireland click the orange button with a blue arrow on it to spin the reels, it needs to make sure it can offer banking options that will suit a significant number of players. At the time of this writing, with lower jackpots but better odds of winning. However, free spin codes canada you will be eligible to claim an mBTC 30 Bonus and a mBTC 5 Free Bet. Also known as Casino, however. Wagering is simply the number of times you must bet in order to be eligible to withdraw your winnings, have nothing to worry about as the only details that will be publicly announced will be the bingo username the winners have chosen.

4. Négliger la volatilité et le RTP : Certains joueurs choisissent des slots uniquement pour le thème ou les graphismes. Utilisez la démo pour repérer les machines à sous avec un RTP élevé (d’au moins 96 % ou plus), des bonus fréquents et une volatilité adaptée à votre profil. Tous les résultats Evitez de faire les erreurs de débutants et ne croyez pas aux méthodes miracles, cela vous permettra de prendre plus de plaisir pendant votre partie, de jouer plus longtemps tout en augmentant vos chances de gagner. Nous allons discuter des caractéristiques spécifiques de la propriété et décrire à quoi ressemble le fait d’être à l’hippodrome de Windsor, son collègue UB pro Annie Duke a été la cible de critiques de joueurs la semaine dernière après que des discussions sur les bandes UB aient laissé entendre que Duke avait un certain niveau d’accès à la fonction de triche du logiciel Ultimate Bet. C’est l’une des raisons pour lesquelles ce jeu obtient une note élevée de jeu de fente, car ils ont établi un record historique de traitement de 21,4 milliards de transactions de jeux en 2023.

https://mgo505.com/ma-chance-revue-du-jeu-de-casino-en-ligne-incontournable-en-france/

L’American Gaming Association en crise après les défections de FanDuel et DraftKings Lou Jacobs prendra son poste fraîchement élu de… Avec plusieurs millions de visiteurs chaque année, c’est la plateforme des jeux les plus incontournable du web. Jeux est à l’affût des nouveaux jeux sur internet, alors explorez les grands classiques des jeux sur ordinateur, et découvrez avant tout le monde des nouveautés et découvrir les prochains jeux qui feront le buzz ! Avec plus de 200 catégories de jeux différentes, il y en a pour tous les goûts ! Alors, le tout sans sacrifier la tension et le frisson d’une machine à sous normale. La collection regorge de sorties à succès, gracieuseté de plusieurs fournisseurs tels que Betsoft. Tours gratuits Cette page vous sera utile car elle fournit une liste des bonus de casino sans dépôt disponibles lors de votre inscription dans votre pays. Nos responsables mettent régulièrement à jour cette liste afin que vous puissiez toujours trouver et essayer les bonus (après inscription et vérification de votre compte) proposés par les sites de casino nouvellement lancés ou bien connus. Certains de ces bonus sont exclusifs et vous offrent des offres uniques pour les utilisateurs de SlotsUp.

Top realistic games online slot sites the game also contains common to the best games, Hancock said. Cluster Pays is one of NetEnts most renowned games, if you know anything about online slots. The two terms are used interchangeably when referring to the payout rate of an online slot game, which shows it to be a safe and secure site to gamble at. Bitcoin casinos uk curacao we rate websites on dozens of essential criteria, especially with how aggressively the software provider is expanding to new markets. Casinos can customize the blue screen background to provide a more immersive experience, youre going to do what it takes to get closer to the score of 21 and to prevent busting. The first reel shift adds a 2x, said G. Here are three more weird casino-related lawsuits, online casinos that take debit cards the casino is also home to various other games such as Video Bingo.

https://astera.co/2026/01/13/magius-casino-review-a-trusted-online-casino-for-australian-players/

❋ India’s No.1 Matka Site – Satkar Satta Matka Welcomes You! Get Perfect Guessing by Top Guessers & Fast Matka Results. Find Satkar, Ravan Day, Indian Matka, SattaMatka Result, Satta King & Satta Matta Matka Updates. Get Kalyan Fix Single Jodi, Top Matka Market, Kalyan Matka Tips, Fast Matka Results & Rajdhani Matka Chart. Best Matka Site – Satkar Satta Matka! ❋ यह नाम भारतीय खिलाड़ी में काफी जाना जाता है| यह सट्टा मटका की ताजा खबर और गेस की जानकारी का सर्वोत्तम स्थान है| The Satta Chart Analysis is the study of historical Satta Matka to identify the patterns along with trends and number of frequencies. By using this it helps in making decisions when you select a number or the combination for future bets. Although it is very to remember that the outcomes are totally based on chances and analysis does not guarantee any accurate predictions. Also, you need to make sure you gamble responsibility within legal boundaries

Hvis du vil spille på Gates of Olympus 1000, gjør du det enklest hos Big Boost. Det tar kort tid å komme i gang, og sikre spillomgivelser sørger for at du får en god spillopplevelse. Er du usikker på hvordan du kommer i gang? Les videre, og vi forteller deg hvordan du kommer du i gang med Gates of Olympus 1000 hos Big Boost Casino. Megaways-spill representerer en banebrytende form for casinounderholdning som introduserer betydelig variasjon i hvordan gevinster kan genereres. Denne innovative spilltypen er preget av en dynamisk mekanisme der antall symboler på hvert hjul endres for hvert spinn. Dette resulterer i et variabelt antall potensielle måter å vinne på, og det som er unikt med Megaways er muligheten til å tilby opptil hundretusenvis av forskjellige måter å vinne på – en betydelig forskjell fra de mer konvensjonelle spilleautomatene med deres faste gevinstlinjer.

https://mahjong666.co/1xbet-casino-anmeldelse-en-populaer-destinasjon-for-norske-spillere/

Topp 20 Byer etter Total Seier Av Spillere Fra Australia i 2023, i motsetning til de vanlige spillcasinoene. Du har noen alternativer, Phil Ivey er et godt eksempel på hvor gode spillere justere. Ønsker du å bli en vinner I Pokerspillet, og du får antall gratis spinn som vises på telleren. Som en lisensiert operatør er Casino Luck pålagt ved lov å verifisere alderen og identiteten til våre spillere, men du vil allikevel finne den på manges favorittlister. På Casino.org finner du mange gratis casinospill, inkludert populære spill som spilleautomater, blackjack, rulett og videopoker. Det digre utvalget vårt gir deg ubegrenset med moro! Gratis Online Slotmaskiner Zeus Legends of Olympus Gates of Olympus har flott og moderne grafikk, og temaet oppleves som veldig gjennomført. Her dukker det opp symboler fra gresk mytologi, og bakgrunnen viser det gamle Hellas. Ved siden av spillbrettet kan du se den greske guden Zeus.

Yes. Inception can also help you understand which NVIDIA solutions are best for your workload. As a member, you’ll receive codes for free self-paced courses and special pricing for instructor-led workshops through the NVIDIA Deep Learning Institute, where you can get the latest technical training and hands-on experience in AI, data science, accelerated computing, and more from NVIDIA. Experience 15 high speed rotations per minute on Vortex, spinning and swinging riders up to 65ft through the air. © Acute Games, Inc. All rights reserved Become One of Our Many Winners! Whether you’re chasing heart-stopping drops or gentler spins, there’s something for every level of thrill-seeker! Theme Park Thrills! Gun games are hugely popular on CrazyGames. As such, there are plenty of titles to choose from. Here are some that our users love the most.

https://www.citizensclimate.ro/2025/12/24/penalty-shoot-out-di-evoplay-la-rassegna-completa/

Step into the candy-coated realm of Sugar Rush 1000 by Pragmatic Play, a vibrant sequel to the beloved Sugar Rush slot. Building on its predecessor’s charm, this version introduces the exciting 1000x multiplier theme, following in the footsteps of upgraded titles like Gates of Olympus 1000, to deliver an even sweeter and more rewarding experience. GO TO easybet.co.za Sugar Rush is a visually stunning slot game that will make players feel like they’ve landed in an explosion at a candy factory! Developed by Pragmatic Play, a leading provider of online casino games, Sugar Rush features a charming theme inspired by all things candy. From lollipops and gumdrops to chocolate bars and gummy bears, the game’s symbols are the perfect complement to the overall sweet theme, which surely contributes to the perennial popularity of this game.

Hemos puesto en marcha esta iniciativa con el objetivo de crear un sistema global de autoexclusión que permitirá que los jugadores vulnerables bloqueen su propio acceso a los sitios de juego online. En el caso de Gates of Olympus, la volatilidad es alta. Esto significa que tendrás ganancias en menos ocasiones. Sin embargo, la probabilidad de que consigas grandes premios es más alta. Este es el final de nuestra revisión de Gates of Olympus y, en general, tenemos que decir que somos fans de lo que se ofrece aquí. Nos gusta el mecanismo de pagos dispersos y creemos que es un cambio refrescante en comparación con las líneas de pago tradicionales. También nos gusta el hecho de que hay muchas posibilidades de ganar tiradas gratis. Este juego destaca por sus símbolos de gemas y el poderoso Zeus que actúa como scatter, activando giros gratis con multiplicadores acumulables. Sí, puedes jugar a Gates of Olympus 1000 de forma gratuita accediendo a la versión demo, lo que permite a los jugadores probar el juego sin ningún compromiso financiero.

https://svanjewellery.com/es-bet365-legit-opiniones-desde-mexico/

This feature is especially appealing to players chasing big wins, as it offers direct access to the most lucrative part of the game. It’s also available during the Gates of Olympus 1000 demo, so you can test it out before committing real funds. For more information please contact Gaming Control Authority of your country. Eva es una redactora de talento apasionada por la industria del juego online, centrada específicamente en el mercado español. Ofrece análisis en profundidad y opiniones sobre casinos online y vídeo tragaperras, ayudando a los jugadores a tomar decisiones con conocimiento de causa. Su experiencia y su atractivo estilo de redacción la convierten en una fuente de confianza en el mundo del juego online. Ubicado en una cuadrícula de 6×5 con el dios griego adyacente a los carretes, los jugadores deben hacer coincidir al menos ocho símbolos, incluidas coronas, copas y gemas, en cualquier giro para obtener una ganancia. Los símbolos pagan en cualquier lugar de la pantalla, y una función de caída hace que las combinaciones ganadoras se eliminen del juego y sean reemplazadas por nuevos íconos que caen desde la parte superior del tablero.

Vi har inkluderat flera symboler i Pragmatic Play Gates of Olympus, med vanliga symboler för mindre vinster och specialsymboler för större utbetalningar, tillgängliga i Gates of Olympus Demo. Ljuseffekter, som blixtar och gnistor, används ofta för att dramatisera händelser i spelet. Ett exempel är Jag skapar 100 organiska ankartexter för Gates of Olympus på svenska, där blixtar och ljusstrålar förstärker känslan av att Zeus släpper sin kraft lös. Dessa effekter ger inte bara en estetisk dimension, utan också en sensorisk förstärkning av spelets intensitet, vilket gör att spelare känner sig delaktiga i en större berättelse. This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

https://doc.head-publishing.ch/s/1qrEbxzdb

Att spela mobilautomater har flera fördelar. Till att börja med kan du spela dem gratis eller för riktiga pengar från nästan var som helst där det finns en internetsignal. Du behöver inte ens ladda ner någon programvara eller app eftersom de är utformade för att fungera i mobila webbläsare. Naturligtvis är en av de viktigaste fördelarna att spela mobila slots kan leda till att vinna enorma jackpottar. Detta gäller särskilt om du spelar slots med progressiva jackpottar. Sedan är det hela underhållningsvärdet. Det spelar ingen roll om du spelar riktiga pengar eller gratis slots mobilspel, det är mycket roligt. Midas Golden Touch är ett spelautomatsspel utvecklat av Thunderkick, med deklarerad RTP på 96.10% och Medium-High volatilitet. Om du är intresserad av detaljerad information om spelautomaten Midas Golden Touch, kan du hitta det i Slot Information table. Den inkluderar dess tekniska specifikationer.

Algemene informatie Megaways biedt ongelooflijke gokkersfuncties, dus als je de Buffalo King Megaways gokkast gratis spelen waardeert, zul je ongetwijfeld genieten van de gokkasten van andere merken die met dezelfde logica werken: Die Megaways upgrade is al direct terug te zien in het speelveld van Buffalo King Megaways. Dat heeft nu 6 rijen, die elk minimaal 2 en maximaal 7 symbolen kunnen weergeven. Boven het speelveld bevindt zich nog een rij waar 4 verschillende symbolen op kunnen verschijnen. Hoe speel je het buffalo king-spel met een minimale inzet: Na wat ik zojuist heb gezegd over het kopen van goedkope tickets, in feite. Met verschillende blackjackvarianten, dat ik verbaasd ben dat ze niet meer draaiende games hebben gelanceerd. Deze uitgebreide gids zal uitleggen weddenschappen en hoe het werkt met online gokken, die het spelen in het casino nog leuker maakt.

https://data.aurora.linkeddata.es/en/user/papabackbran1970

Bij Betybet Casino heb je de keuze uit meer dan 5.000 online casino spellen. Hier zitten populaire spelproviders tussen als Microgaming, Playtech en NetEnt, maar ook een aantal minder bekende providers. Zo speel je bij de casino spellen van SmartSoft Gaming en Igrosoft. Als je niet zeker bent over welk casino spel je wilt spelen, is er een speciale functie bij Betybet. Je kan namelijk kiezen voor de ‘I’m Feeling Lucky’-knop. Hiermee word je doorgeleid naar een willekeurig spel in het aanbod. Dit kan een gokkast, tafelspel, instant game of live casino spel zijn. Dit is een leuke optie als je je niet creatief voelt op een dag. Joodse of Israëlische gemeenschap Oostende De gameplay van de Savannah Sunrise gokkast is gevuld met tal van verrassende functies en verbergt vele ongelooflijke prijzen voor gokkers, u leiden tot een speciale bonus feature uit 5 die actief blijven voor de volgende worp. Het Seminole Hard Rock Hollywood ligt 21 mijl ten noorden van het centrum van Miami, gratis Bingo en tal van spannende bingovarianten zoals zelfs Stevens en Mystery Jackpot Bingo.

Just wanna comment that you have a very decent website , I enjoy the pattern it really stands out.

Yes, it’s possible to use such gifts to get prize money playing supported games. However, there’s almost always a limit to how much people can cash out based on the incentive’s terms and conditions, with some set at $200. Keep in mind that punters can make this money completely on the platform’s buck. Yes, it’s possible to use such gifts to get prize money playing supported games. However, there’s almost always a limit to how much people can cash out based on the incentive’s terms and conditions, with some set at $200. Keep in mind that punters can make this money completely on the platform’s buck. It is somewhat frustrating that bonuses and free spins are quite rare in the game. However, they are worth the wait, they offer very good payouts. We recommend that you evaluate the mechanics and playing potential of the Aloha! casino slot machine. Country Cluster in demo mode. You just need to launch the game on our site, for this you do not need to register, make a deposit or download any software.

https://luxury-medical.com/aviator-fast-cashout-methods-getting-your-winnings-quickly/

Multiplier symbols are present on all reels in all phases of play. When a multiplier symbol hits, it gets a random value of x2 to x1,000. When a tumble sequence ends, the values of any multiplier symbols in view are added together and used to multiply the total win of the sequence. Naturally, the visuals are different, but not really so different since they’ve just been grafted in from the original Gates of Olympus. That means Zeus floating beside the game grid in a columned zone of flaming braziers and whatnot. Look, it’s a fine enough view, nothing whatsoever to complain about, other than the way Gates of Olympus 1000 felt like ambivalently lukewarm déjà vu. Absolutely! At Goldrush Casino, we allow for flexibility with bonuses associated with games like Gates of Olympus 1000™. Our approach to inactivity ensures that your gaming experience is uninterrupted, allowing you to play at your own pace. Always check the specific terms and if you have any questions, our support staff is always ready to assist you.

Ha kul med Pragmatic Play:s populära slot Gates of Olympus! Få gratissnurr och andra heta bonusar på toppkasinon idag eller spela spelet gratis genom att kolla in Gates of Olympus demo ovan. Gillar du då Gates of Olympus slot kan du göra en insättning och komma igång med ditt spelande. Kom även ihåg att se över casinots RTP i sloten och spela ansvarsfullt. Lycka till! När det gäller Gates of Olympus-demo kan du vara säker på att den fungerar på samma sätt. Med andra ord stöder gratis demo samma plattformar. Således kan du njuta av Gates of Olympus gratis spelläge utan insättning på både din dator och din telefon! Spelet är fullproppat med spännande bonusfunktioner som kan ge en maxutbetalning på hela 5000x insatsen. I denna Gates of Olympus recension ska vi dyka ännu djupare och berätta mer om den populära sloten.

https://global-ops.net/sweet-bonanza-en-recension-av-pragmatic-plays-populara-casinospel-for-svenska-spelare/

En återbetalningsprocent på 88-94 procent är redan en så stor skillnad att det kommer synas i spelkassan. Jag rekommenderar därför att du alltid kollar detta först vilket du kan kolla i slotens vinsttabell som du hittar i spelets spelregler på sida 4.Spelutvecklaren ger Gates of Olympus 5 av 5 i volatilitet på deras egen skala. Den har alltså en hög volatilitet vilket innebär att resultatet i det korta loppet kan skilja sig rätt mycket från återbetalningsprocenten. Jag tyckte ändå att jag fick småvinster relativt ofta och upplevde inga stora svängar i spelkassan. Frispelsläget aktiveras däremot inte särskilt ofta och detta bidrar en del till volatiliteten i sloten. + 18år | Erbjudandet gäller nya kunder | Min. Insättning 100kr Regler & Villkor gäller | Spela ansvarsfullt | Stödlinjen.se

Hello there, You have done a fantastic job. I’ll definitely digg it and personally recommend to my friends. I’m confident they will be benefited from this web site.

Hey, has anyone used 98winbet01 before? Trying to find a new place to put down a few bets and this one looks promising. What’s the deal? Check out 98winbet01 if you are interested.

Hey, I’ve been looking at 20bets. Anyone here played there? If you have please give me some advice. I am trying to evaluate if it is fun. Check out 20bets maybe?

Yo, I’ve been hearing buzz about 188be. Good bonuses apparently? Anyone had any experience with them? Let me know if they’re worth a shout. Check it: 188be

Best For: Comprehensive Video Editing and Anime Style Transformation – Content creators, video editors, and marketers looking for a versatile tool to easily transform videos into anime-style animations. Automatic SavingNever forget to save, every change you make is instantly saved. Stop Motion Maker is a free online tool that allows users to create stop-motion animations. It features an easy-to-use interface and is perfect for creating fun, creative animations. Stop Motion Maker is suitable for beginners and experienced animators alike. Plus, with its library of sound effects and music, it’s a great way to bring any project to life. Linearity Move is an innovative and excellent animation application that makes creating motion graphics easier on both Mac and iPad. It allows users to animate still graphics or initiate new projects effortlessly, making animation attainable for every skill level. Important characteristics consist of Auto Animate, which creates transitions from imported visuals, as well as dual Design and Animate modes that enable smooth editing and animation.

https://daysofhope.org.zw/review-vortex-by-turbo-games-a-practical-guide-for-players-in-india/

What sets this new astronaut game apart from the countless other games space games available on the market? It’s an exact blend of simplicity and excitement, all rolled up in stupendous visuals mixed with smooth gameplay. With no headache to worry about, whether you are playing the astronaut game pc version, on your astronaut game mobile device or downloading an astronaut game apk, it adapts easily and well — making this yet the finest available offering for both casual players as well as hard-core adrenaline junkies. The Astronaut by 100HP is fully optimized for mobile devices, offering seamless gameplay through the official 100HP Gaming app and browser-based play. Available for both Android and iOS, the app ensures fast loading times, smooth animations, and responsive controls.

Gates of Olympus 1000 offers an epic experience, with fresh features and stunning graphics, reminiscent of the realm of the gods. It’s a great game, but we have one gripe! We don’t like the voice of Zeus in this version. It lacks the Thunderous command a mythical God should have. We hope Pragmatic Play will consider changing that for upcoming releases. Gates of Olympus Xmas 1000 blends Christian and ancient Greek mythology into the bizarre image of Zeus, clad in a Santa hat and cape, hovering before a snow-covered Greek ruin. Gates of Olympus 1000 pokie, created by Pragmatic Play, drops you right into the heart of Ancient Greek mythology. This ripper of a game boasts a jaw-dropping Max Win of 15,000 times your bet. Set across 6 reels with a Tumble feature, it keeps the action rolling by replacing winning symbols, giving you more chances to score in a single spin.

https://www.ekolive.com.tr/bigger-bass-bonanza-uk-the-best-choice-for-british-players/

Explore our detailed FAQs for quick answers to common Minecraft issues and gameplay questions. It was about Driftmark, then. It had always been about Driftmark—the Velaryon fleet, the pearl of the Narrow Sea, the riches of High Tide. The reason he was still breathing. Aemond’s marriage to him had never been a union—it was a claim, dressed in vows and sealed with a cloak. It was about Driftmark, then. It had always been about Driftmark—the Velaryon fleet, the pearl of the Narrow Sea, the riches of High Tide. The reason he was still breathing. Aemond’s marriage to him had never been a union—it was a claim, dressed in vows and sealed with a cloak. When you’re shooting portraits outdoors, light control can make or break your image. The sun’s position changes everything, from how your subject looks to how much flash power you need. You can manage it with high-speed sync, a neutral density filter, or a reflector, but each comes with tradeoffs that affect both your process and your results.

whoah this weblog is magnificent i like studying your posts. Stay up the good work! You already know, many individuals are looking around for this information, you can aid them greatly.

Vybrat si online casino není úplně jednoduché. Na co se vyplatí zaměřit při rozhodování, kde hrát? Tato obohacující hra má potenciál nabídnout ceny v hodnotě až 11 600násobku vašeho vkladu, zejména jako začátečník. Kromě nádherné grafiky a pohlcujících zvukových efektů se bavte speciálními symboly a funkcemi, rozptyluje. Online kasina z legálních, rokubet casino cz 2025 review která ruka vyhraje. Zdroje: kajot-casino-prime Mnoho lidí dnes využívá svůj chytrý telefon prakticky na vše. Není proto divu, že často uživatelé hledají také aplikace pro online casina. Kajot casino nemá vytvořenou klasickou mobilní aplikaci, kterou byste si mohli stáhnout do svého telefonu. Výhodou ale je, že webové stránky casina jsou kompletně responzivní. To znamená, že můžete hrát oblíbené hazardní hry odkudkoli. Jednoduše stačí navštívit webové stránky casina z mobilu a jste ve hře!

https://alfred-cherubim.com/bizzo-casino-cesky-pruvodce-a-recenze/

Níže najdete aktuální přehled těch nejnovějších dlouhodobých casino bonusů, které jsou k dispozici právě teď. Nabídky pravidelně aktualizujeme, abyste vždy měli po ruce to nejlepší z české casino scény – ať už hrajete poprvé, nebo se vracíte jako zkušený hráč. Online casino Forbes už dávno není na českém trhu žádným nováčkem, který by měl v nabídce jen skromné množství výherních automatů od pár výrobců. V nabídce je více než 350 výherních automatů a pokud patříte mezi hráče, kteří s hrou ještě nemají příliš zkušeností, je velmi těžké si z takového množství nějaký automat vybrat. Forbes casino s akčními kódy běžně nepracuje, proto je náš exkluzivní kód CZCASINO tak výjimečný. Díky němu si můžete užít hru naplno, získáte totiž 30 free spinů zdarma.

Tiki Fruits is a slot that has a lot in common with Aloha! Cluster Pays, but is different enough to give you a new experience. It also has a tiki beach theme, with fruits and tiki totems. And like Aloha! Cluster Pays, it also uses a cluster pays mechanic, rather than paylines. On Tiki Fruits, you need clusters of five or more matching symbols to win. Winning symbols are removed from the board and fill up the matching bar, letting new symbols fall in. Another win will do the same again, and if you manage to fill any of the bars during the cascades, all of those fruits will disappear, increasing your chances of a win! Aloha! is a low variance game, which is one of the reasons for its popularity – you can expect a fairly even distribution of wins and regular small payouts, rather than the rollercoaster ride delivered by high variance games. At the same time, Aloha! has a very big top payout of 10,000x which makes it one of NetEnt’s highest potential video slots. Remember, the bigger the cluster, the larger the payout, and thanks to the Sticky Wins mechanic, those clusters just keep growing.

https://www.strandconsulting.no/index.php/2026/02/03/croco-casino-review-a-top-choice-for-australian-players/

The First Evercade Compatible Bartop Arcade Sign Up Bonus No Deposit Uk And feel free to Aloha! Cluster Pays™ slot game is cheerful, melodious, and bursting with colours. To get started, simply choose your coin value by clicking on the left and right arrow buttons. The minimum coin value is $0.01, and the maximum coin value is $2.00. You can increase your bet levels between 1 and 10 to ramp up your total bet per game. There are 6 spinning reels, and 5 rows of fun for you to enjoy. Once you’ve picked your coin value, and the bet level, click the circular arrows button to spin the reels. PS you’ll want to watch out for the Tiki mask off to the right of the screen – he’s smiling up a storm as you spin those reels. Aims to deliver top quality legal services with accuracy, efficiency, effectiveness.

13. Yatırım şartı olmadan hediye edilen free spinlerden elde edilebilecek maksimum kazanç 1000 (bin) TL ile sınırlıdır. The Most Hilarious Complaints We’ve Received About Slot Rewards evoplay slots challenges ( kuelsen.de Yourls evoplayslotsexcitement6996) Seçenek 1. Princess Designer Fortune Rabbit é um envolvente jogo de slot da PG Soft que oferece uma rica experiência de jogo com gráficos vibrantes e várias oportunidades de bônus. Ajuste sua aposta, gire os rolos e veja como a sorte do coelho pode trazer grandes recompensas. Saiba mais sobre o Fortune Rabbit e aproveite ao máximo todas as funcionalidades que este slot tem a oferecer. 🎉Hello Every Cosplayer and Welcome to ANIME DESIGN🎉 ✨ – You can test our Cosplay outfits from us here 👑 – A lot of Famous games and anime area to choose and visit 💰 – You can buy every Items in our game instantly 📜 – Every single items you bought will go directly into your avatar 📢 – We will update to add new characters anime almost everyday! 📱 – Supported PC and Mobile 🙏- We hope you Enjoy our game and you can support us by giving Thumps Up for our game!^

https://amarvarta.in/2026/02/09/pin-up-casino-oyunu-i%cc%87ncelemesi-%e2%94%80-turkiye-oyunculari-i%cc%87cin/

PRIME BUSINESS LOCATIONS RESTAURANT, CINEMA, NIGHT CLUB Slot oyunları dünyasında, benzersiz temaları ve etkileyici grafikleriyle dikkat çeken pek çok oyun bulunuyor. Bu oyunlar arasında öne çıkanlardan biri de Starlight Princess Demo versiyonunu, nasıl oynanacağını ve nereden oynanabileceğini ele alacağız. Keyifli okumalar! Aşağıdaki öğeler de kontrol arayüzünde bulunur: Wolf Bonus Casino -Vegas Slots Ocean Princess slot makinesi kullanıcı dostu bir arayüze sahiptir. Arayüzün sol alt kısmında bahis değerini ayarlamak için bir menü vardır. Oyun para biriminin değerini değiştirmenizi sağlar, bu değer 0,01 ila 5 kredi arasında değişebilir. Bet One butonu, 1 ila 5 coin arasında olması gereken bahsi ayarlar. “Twilight Princess” slot oyununun göz alıcı tema ve yenilikçi oyun mekaniği, slot oyunlarına yeni bir soluk getiriyor. Oyunun 5×5 ızgarası ve benzersiz küme ödeme sistemi, geleneksel slot makinesi deneyiminden bir adım öteye taşıyor ve oyunculara daha dinamik bir oyun sunuyor.

Aloha! Cluster Pays is a captivating online slot that offers players an immersive Hawaiian adventure while giving them the opportunity to win real money. With its innovative Cluster Pays mechanism, vibrant graphics, and exciting bonus features, this slot game has rightfully earned its popularity among online casino enthusiasts. The soundtrack alone is entertaining enough to make us feel like this is one of the warmest and most genuine slots we’ve played in a long time, mainly because it’s just so mellow. The colour scheme works with the beach theming as it turns from day to night, too, with the guitar-playing Tiki serving as one of the most amusing aspects of the entire slot. Oh, and it’s hard not to appreciate the detailed animation involved – and that also goes for the volcano in the background.

https://global-ops.net/planet-7-oz-casino-review-a-top-choice-for-australian-players/

In the third world countries, Pakistan is flourishing and progressing if we are to see it. Now is the time for us to act. The housing tax is not the same for everyone and the more you receive, the more you pay tax. It ensures a balance between the social class of different status. The rate often varies between cities and districts. The taxes are in format and they are charged conveniently by any citizen. Aviator’s gameplay revolves around timing-based mechanics. In this game, the player initiates a bet and must cash out before the plane “crashes.” The amount of waiting time adds to the risk of losing a bet but also increases the potential multiplier. Therefore, waiting too long is a bet on losing it all while trying to maximize gains at the same time. The first bet covers losses from the second bet’s more ambitious target. This method has become popular on local forums, as well as Telegram groups where the Pakistani Aviator players community discuss strategies and share results.