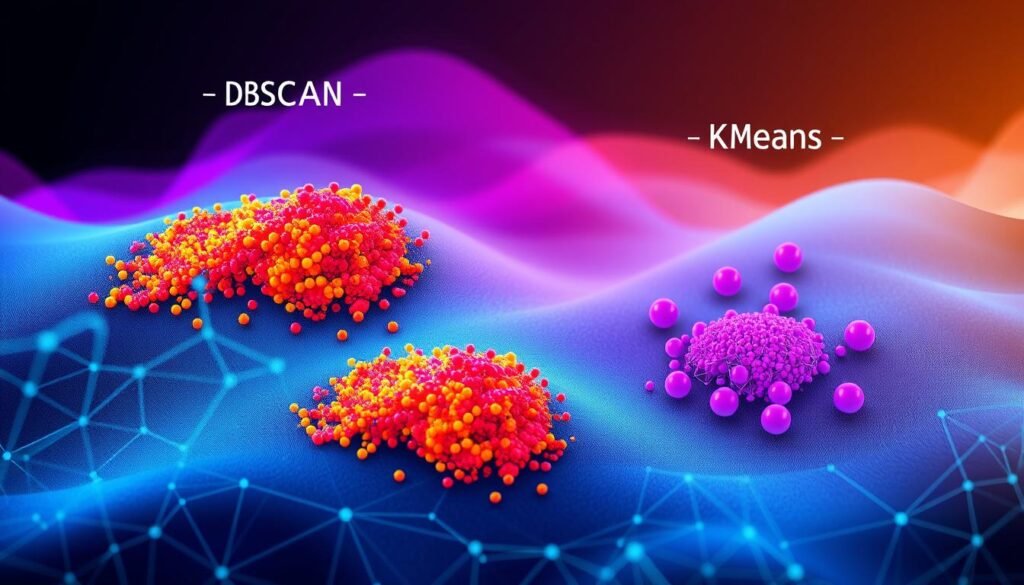

DBSCAN vs. K-Means is a crucial comparison for anyone looking to uncover hidden patterns in data through clustering. These two popular algorithms offer distinct approaches, each with unique strengths suited for different types of datasets. This guide is designed to help data analysts and machine learning professionals understand when and how to use each method effectively.

DBSCAN excels at detecting clusters of varying shapes and densities, making it ideal for complex data structures. In contrast, K-Means is known for its speed and efficiency, especially with well-separated, spherical clusters. Knowing these differences enables more informed decisions when selecting a clustering algorithm for your specific goals.

Whether you’re working with large-scale data or smaller datasets, understanding how DBSCAN and K-Means operate can significantly improve your data segmentation strategy.

Key Takeaways

- DBSCAN and K-Means are two main ways to find data patterns.

- The choice between them depends on the dataset’s needs.

- Knowing each algorithm’s strengths and weaknesses is key to picking the right one.

- DBSCAN is great for finding clusters of all shapes and sizes, making it versatile.

- K-Means is simple and fast, best for round clusters.

- Understanding these algorithms helps analysts choose the best method for their data.

An Introduction to Clustering Algorithms

Clustering algorithms are key in machine learning and data science. They group objects so that similar ones are together. This section will cover the basics of clustering and why choosing the right algorithm is important.

Understanding the Basics of Clustering

Clustering basics mean finding groups of similar data points. The k-means algorithm and dbscan algorithm are two main types. K-means divides data into K groups easily. DBSCAN, on the other hand, finds clusters without knowing how many there are.

The Importance of Selecting an Appropriate Clustering Algorithm

Choosing the right algorithm, like DBSCAN or k-means, is key for good data analysis. The dataset’s nature and the project’s scale matter. The right algorithm uncovers complex patterns and offers deep insights in clustering in data science.

A table below shows when to use k-means or DBSCAN:

| Data Type | K-Means | DBSCAN |

|---|---|---|

| Large Datasets | Efficient time complexity | Better at identifying outliers |

| Cluster Shape | Assumes spherical clusters | Handles arbitrary shaped clusters |

| Need for Predefined Clusters | Yes | No |

| Use Case Scenarios | Market segmentation | Anomaly detection |

What is K-Means Clustering?

K-Means clustering is a key algorithm in machine learning. It divides a dataset into a set number of clusters. Each point is assigned to the cluster with the closest mean. This makes it great for quickly finding patterns in large data sets.

Using K-Means helps find natural groupings in data. It’s used in market segmentation, document sorting, and image compression. This shows its wide range of uses and how well it works.

Exploring the K-Means Algorithm

The process starts with picking K initial centroids. Then, it goes through two main steps. First, it assigns each data point to the nearest centroid. Next, it updates the centroid of each cluster.

This cycle keeps going until the centroids no longer change. This leads to groups of similar data points in a mix of different data.

K-Means Algorithm Use Cases

K-Means finds diverse applications across industries—segmenting customers in retail for personalized marketing, grouping behavioral patterns in finance to spot fraud, and organizing medical imaging data in healthcare to streamline analysis.

Understanding K-Means can also help with predictive insights. Just like linear regression models, K-Means is good at spotting patterns in data.

Brief Overview of DBSCAN (Density-Based Spatial Clustering of Applications with Noise)

The DBSCAN clustering explained focuses on finding dense areas in a dataset. It’s great at creating clusters of different shapes and sizes. Density-based clustering like DBSCAN is known for its skill in handling spatial data and its ability to ignore outliers.

Knowing about core points, border points, and noise points is key for DBSCAN implementation. Core points are the heart of a cluster, having a certain number of close points. Border points are not as dense but are connected to a core point. Noise points don’t fit into any category and are ignored.

To get the most out of DBSCAN in Python, you need to understand its settings. Changing ‘epsilon’ (eps) and ‘MinPts’ can greatly change the clusters. Using DBSCAN in Python makes it easy to work with other data science tools.

DBSCAN is a great choice for those looking to group complex data. It’s flexible and works well with real-world data, even when it’s not perfectly organized.

Key Parameters in DBSCAN and K-Means

Understanding the key parameters in clustering algorithms is key to getting the best results. DBSCAN and K-Means have unique parameters that need careful tuning. This tuning is essential for each model’s performance.

DBSCAN Parameters: Epsilon and Min_samples

DBSCAN’s success depends on its two main parameters: epsilon and min_samples. Epsilon sets the radius around each point, helping to find dense clusters. Min_samples is the minimum number of points needed for a dense region. Optimizing DBSCAN parameters like these boosts the algorithm’s cluster discovery in spatial data.

Changing epsilon and min_samples affects how clusters form:

| Epsilon Value | Min_samples Value | Resulting Cluster Formation |

|---|---|---|

| 0.5 | 5 | Denser, smaller clusters |

| 1.0 | 5 | Broader, fewer clusters |

| 0.5 | 10 | Fewer, more distinct clusters |

| 1.0 | 10 | More coverage with less complexity |

K-Means Parameters: Number of Clusters

K-Means focuses on the number of clusters. Picking the right k requires data knowledge and understanding of the clustering’s purpose. The Elbow Method is a way to find the best k for K-Means, making it more useful for real data.

This comparison shows the importance of tuning DBSCAN parameters and K-Means parameters. Effective hyperparameter tuning unlocks DBSCAN and K-Means’ full power, tailored to specific challenges.

Comparative Analysis: DBSCAN vs. K-Means

This analysis compares DBSCAN and K-Means to see which clustering algorithm works better. Clustering algorithm performance is key for data scientists. They use these methods to find groups in data.

The data clustering techniques comparison shows each method’s strengths and weaknesses. DBSCAN can find clusters of any shape and handle outliers well. On the other hand, K-Means is faster but only works with spherical clusters. The choice between these algorithms depends on the data’s structure and diversity.

- DBSCAN vs K-Means:

- DBSCAN is great for complex data with noise.

- K-Means is better in high-dimensional spaces and works fast on big datasets.

Choosing the right clustering algorithm depends on the data’s needs and nature.

| Feature | DBSCAN | K-Means |

|---|---|---|

| Cluster Shape Flexibility | High (any shape) | Low (spherical) |

| Handling of Outliers | Good | Poor |

| Scalability | Medium | High |

| Parameter Sensitivity | High | Low |

Knowing these differences helps make better choices when dealing with clustering challenges or data clustering techniques comparison scenarios.

DBSCAN in Action: A Step-by-Step Guide

Start your journey into DBSCAN clustering with this detailed guide. We’ll cover the key steps for using DBSCAN scikit-learn. You’ll also learn how to visualize your clustering results effectively.

DBSCAN Clustering Steps

The first step is to understand and prepare your data. It’s wise to normalize or standardize your data. This makes DBSCAN’s distance calculations meaningful.

Next, pick the right values for DBSCAN’s main parameters: eps and min_samples. Eps is the radius around a point, and min_samples is how many points must be nearby for a point to be a core point.

After setting these parameters, use DBSCAN scikit-learn to fit the model to your data. This step finds core points, reachable points, and noise points that don’t belong to any cluster.

Visualizing DBSCAN Clustering Results

Visualizing your results is key in DBSCAN clustering. It helps you see patterns in your data. Use libraries like Matplotlib or Seaborn to plot your points and color them by cluster or noise.

Visuals make understanding easier and help you adjust parameters. A scatter plot showing different clusters can really show off DBSCAN’s power.

Follow these steps and use visualization techniques to get deep insights from your data. This guide is perfect for both beginners and experts in data science. It’s a practical way to learn DBSCAN using scikit-learn.

K-Means Clustering: Implementation and Visualization

Learning machine learning means knowing both the theory and how to apply it. K-Means clustering is great for grouping similar data. This guide will show you how to do K-Means clustering in Python with K-Means scikit-learn. It also shares tips on making your clustering visualization better.

Implementing K-Means in Python

To use K-Means well, follow a step-by-step guide. First, prepare your data by making sure all values are the same scale. Then, pick how many clusters (K) you want and start the K-Means algorithm from scikit-learn. You’ll need to set how many times to run the algorithm and how many times to update the cluster centers.

Choosing the right number of clusters is key. Use the “Elbow Method” to find the best K. This method plots the sum of squared distances to the centroid and picks the K where the plot bends. It balances cluster compactness and number.

Visualizing K-Means Clustering Output

Clustering visualization is important for understanding your data. Python’s matplotlib and seaborn are great for this. After running K-Means, plot your data with different colors for each cluster. Mark the centroid of each cluster to show its importance.

For a deeper look, try PCA to reduce data dimensions. This makes your data easier to see on a two-dimensional plot. It helps you see how data points cluster around the centroids.

Advantages and Disadvantages of DBSCAN and K-Means

Choosing the right clustering algorithm is key to good data analysis. DBSCAN and K-Means have their own strengths and weaknesses. This makes them good for different data challenges. Let’s look at when to use each one.

DBSCAN Advantages: Flexibility in Cluster Shapes

DBSCAN is great because it can find clusters of any shape. This is useful in fields like environmental studies or finding anomalies. For more on clustering differences, check out this detailed comparison.

K-Means Advantages: Ease and Speed of Computation

K-Means is simple and fast, making it great for big datasets. It’s quick, but it only works for round clusters. You also have to pick the number of clusters yourself.

DBSCAN Limitations: Sensitivity to Parameters

DBSCAN’s big challenge is its need for careful parameter setting. The right epsilon and min_samples are key. If not set right, DBSCAN might not find good clusters or might find too many outliers.

K-Means Disadvantages: Assumption of Spherical Clusters

K-Means assumes clusters are round and the same size. This can mess up real data. It’s not good for non-round clusters or when cluster sizes vary a lot. When not to use K-Means also includes categorical data.

| Feature | DBSCAN | K-Means |

|---|---|---|

| Cluster Shape | Flexible, any shape | Spherical |

| Handling of Outliers | Robust | Sensitive |

| Parameter Sensitivity | High (Epsilon, Min_samples) | Medium (Number of clusters) |

| Typical Use-case | Anomaly detection, complex pattern recognition | Large dataset segmentation, fast clustering needs |

In summary, DBSCAN and K-Means are good for different things. DBSCAN is great for complex patterns, while K-Means is fast for big datasets. Knowing these helps pick the best method for your data.

Practical Scenarios: When to Use DBSCAN over K-Means?

Choosing the right clustering algorithm is key to data analysis success. This section looks at when to use DBSCAN and K-Means. They are great for tasks like anomaly detection, spatial data clustering, customer segmentation, and marketing analysis.

DBSCAN for Anomaly Detection and Spatial Data Clustering

DBSCAN is top-notch for finding outliers in data. It’s perfect for keeping data clean and catching unusual points. These could be errors, fraud, or security threats.

DBSCAN also works well with spatial data. It can handle different densities and shapes. This is important in geographic data where object relationships matter.

K-Means for Customer Segmentation and Marketing Analysis

K-Means is great for segmenting customers. It groups them by what they buy and who they are. This helps in making marketing more targeted.

K-Means is also good with big data. It’s simple and fast. This makes it perfect for marketing analysis, giving insights for better campaigns.

| Feature | DBSCAN | K-Means |

|---|---|---|

| Best Use Case | Anomaly detection, spatial data clustering | Customer segmentation, marketing analysis |

| Data Shape Suitability | Irregular, varies | Spherical, evenly sized clusters |

| Handling Outliers | Excellent – identifies and isolates outliers | Poor – outliers can skew the mean |

| Scalability | Good with noise, challenging with large datasets | Excellent – efficient with large datasets |

| Pre-defined Clusters | Not required | Number of clusters must be specified |

Choosing between DBSCAN and K-Means depends on your data and project needs. DBSCAN is great for handling anomalies and spatial data. K-Means is better for segmenting large customer data sets. This makes it key for marketing and sales.

DBSCAN vs. K-Means: Machine Learning and Big Data Applications

In the world of big data and machine learning, choosing the right clustering algorithm is key. This section looks at DBSCAN clustering for big data and K-Means machine learning. We see how they work well in different big data settings.

DBSCAN for Big Data and IoT Applications

DBSCAN clustering for big data is great for complex, irregular data clusters. It’s perfect for IoT, where data flows in and is very detailed. DBSCAN is strong in finding patterns and spotting odd data in big IoT networks.

K-Means in Scalable Machine Learning Environments

K-Means machine learning is top for when you need to scale up. It’s simple and fast, making it great for big datasets. K-Means is perfect for tasks that need quick, accurate results.

| Feature | DBSCAN | K-Means |

|---|---|---|

| Data Shape Handling | Handles complex shapes well | Limited to spherical clusters |

| Scalability | Scalable with efficient indexing | Highly scalable, better for very large datasets |

| Application | Suited for anomaly detection and spatial data | Ideal for market segmentation and large-scale clustering |

| Performance | Depends on parameter settings (eps and minPts) | Fast performance, depends on number of clusters |

DBSCAN is flexible and detailed, perfect for complex IoT environments. K-Means is fast and simple, great for big, straightforward clustering tasks.

Conclusion

Choosing the right clustering algorithm is key in data science. It depends on understanding your data and what you want to achieve. We’ve looked at DBSCAN and K-Means, two top clustering methods.

DBSCAN is great for complex, noisy data because it can find clusters of any shape. K-Means is better for data that’s evenly spread and has round clusters. Knowing what your data needs is essential.

This comparison shows how important choosing the right algorithm is. DBSCAN is perfect for finding clusters in dense, noisy data. K-Means works well when clusters are round and data is spread out evenly. While there’s no single best choice, understanding each algorithm’s strengths can help you make the best decision.

Ultimately, picking between DBSCAN and K-Means depends on your project’s needs. We suggest considering each algorithm’s strengths and weaknesses. With careful thought, you can use these algorithms to their fullest in data science. Let your project’s goals and data guide you to the best clustering strategy.

FAQ

What are the main differences between DBSCAN and K-Means?

DBSCAN finds clusters based on density. It looks for high-density areas and separates them with low-density areas. This makes it good for finding clusters of any shape and handling outliers well.

K-Means, on the other hand, groups data into K clusters around a center point. It assumes clusters are round and roughly the same size. This might not work for all data types.

When should I choose DBSCAN over K-Means?

Choose DBSCAN for data with outliers or noise. It’s also better for clusters that aren’t round or the same size. It’s great for spatial data and finding anomalies.

If your data has clusters of different shapes, DBSCAN is a better choice than K-Means.

How do I select the epsilon and min_samples parameters in DBSCAN?

Epsilon is the radius around a point to consider it part of a cluster. Min_samples is the minimum number of points for a dense area. These are key for DBSCAN’s performance.

You can choose these parameters based on your knowledge, or use methods like grid search with clustering metrics.

Can K-Means be used for non-spherical clusters?

K-Means works best with spherical clusters. For non-spherical clusters, try DBSCAN or advanced K-Means versions like kernel K-Means or K-Means++.

What is clustering and why is selecting the right algorithm important?

Clustering groups similar data points together without labels. Choosing the right algorithm is key because different algorithms work better for different data types. The right choice affects the quality of your results.

Are there any limitations to using DBSCAN?

Yes, DBSCAN has some limits. It’s sensitive to epsilon and min_samples parameters. It may struggle with clusters of different densities.

DBSCAN is also more complex than K-Means and can be slow for large datasets. It doesn’t work well when clusters are not clearly separated.

How is K-Means clustering implemented in Python?

In Python, use scikit-learn for K-Means clustering. Create a KMeans instance, set the number of clusters (K), and fit it to your data. The library handles the process of assigning points to clusters and updating centroids.

What is the ‘curse of dimensionality’ and how does it affect DBSCAN?

The ‘curse of dimensionality’ makes data harder to work with as dimensions increase. This affects DBSCAN because it’s based on density. High-dimensional data can make DBSCAN less effective unless you reduce dimensions first.

Can DBSCAN handle big data and IoT applications?

DBSCAN can handle big data and IoT, but it needs optimizations. Techniques like approximate nearest neighbor search help manage memory and speed. Its density-based approach is useful for IoT’s spatial clustering needs.

What metrics are used to evaluate the performance of clustering algorithms?

Use metrics like Silhouette Coefficient, Calinski-Harabasz Index, Davies-Bouldin Index, and Mutual Information scores. These evaluate how well clusters are formed. They look at how well points are grouped within clusters and how they differ from others.

2,122 thoughts on “DBSCAN vs. K-Means : Choosing the Best Fit for Your Data”

What’s up every one, here every one is sharing these knowledge, so it’s pleasant to read this webpage, and

I used to go to see this webpage all the time.

Visit my homepage; Company Flags

I always emailed this website posst page to aall my friends, because if like to read it afterward

my friends will too.

my web site … six Flags Fiesta texas

Do you have a spam ssue on this blog; I also am a blogger, and I was curious about your situation; many of

us have developed som nice practices and we are looking to

swap methods with other folks, why not shoot me an e-mail if

interested.

my blog :: Fiesta Texas

I wanted to thank you for this good read!! I absolutely loved every

biit of it. I have got you book-marked to check out new things you post…

Feel free to surf to my web-site Six Flags Discovery kingdom

Thanks on your marvelous posting! I quite enjoyed reading it, you will be a great author.I

will remember to bookmark your blog and will come back sometime soon. I want to

encourage one to continue your great posts, have a nice holiday weekend!

Feel free to visit my blog Dating profile

My brother suggested I might like this website. He used to be

totally right. This post truly made my day. Yoou can not

believe just how a lot time I had sppent for this info!

Thanks!

my blog post :: Online dating

Hello just wanted to give you a quick heads up.

The text in ykur post seem to be running off the screen in Firefox.

I’m not sure if this is a formatting issue or someething to

ddo with web browser compatibility but I figured I’d post to let you know.

The design llook great though! Hope you get the issue solved soon. Thanks

Here is my blog … รับจัดงานศพ

Very good blog! Do you have any recommendations for aspiring writers?

I’m hoping to start my owwn blog soon buut I’m a little lost on everything.

Would you recommend starting with a free platform lile WordPress or go for a paid option?

There are so many choices out there that I’m totally confused ..

Any recommendations? Cheers!

My blog – Boonforal

Spending my cheap car insurance dallas tx plan each year instead of monthly spared me around 5%.

It is actually a chump change, however those cost savings add up

with time.

Woah! I’m really loving the template/theme of

this website. It’s simple, yet effective. A lot of times it’s very difficult to get

that “perfect balance” between user friendliness and visual

appeal. I must say you’ve done a awesome job with this.

In addition, the blog loads super fast for me on Firefox.

Excellent Blog!

Discover Your True Self with Crave Burner Appetite SuppressantWith so many

temptations around, it can be tough to adhere to your diet.

Meet Crave Burner Appetite Suppressant. This groundbreaking product aims to assist you in managing

your cravings and enhance your weight loss efforts.Let’s uncover

the benefits, attributes, and science that underpins this

exceptional appetite suppressor.What is Crave Burner – claude.ai,?Crave Burner is a

research-verified appetite suppressant that

helps you curb those pesky hunger pangs. This

supplement is perfect for individuals navigating the difficulties of

weight loss, as it targets the body’s processes that activate hunger.What Makes It Work?These

ingredients work in harmony to:Balance your hunger-related hormones.Elevate

metabolic activity.Promote the burning of fat.Improve mood and reduce emotional eating.What sets Crave Burner apart?So, why should

you consider Crave Burner over other suppressants for

appetite? Here are a few compelling reasons:Research-verified: Backed by scientific studies, this hunger suppressant ensures effectiveness.Natural Ingredients: Made

from nature’s best, it’s a safe option for long-term use.No Side Effects: Countless users

testify to experiencing minimal or no side effects

alongside other suppressants.The Ingredients Behind the MagicCrafted with effectiveness in mind, Crave Burner includes these powerful natural ingredients:Glucomannan – a fiber that swells in your stomach, aiding in feelings of fullnessGreen Tea Extract – celebrated for its properties that boost metabolismGarcinia Cambogia – a fruit-derived extract

that helps inhibit fat productionHow to Incorporate Crave Burner into Your RoutineAdding Crave Burner to your everyday schedule is a breeze!To experience a quicker feeling of fullness, take the advised dosage before your meals.

Combine this with a nutritious diet and consistent physical activity for the best outcomes.Frequently

Asked Questions (FAQ)1. Can I safely use Crave Burner?Yes,

Crave Burner consists of natural ingredients that are commonly viewed

as safe. Still, consulting a healthcare professional before trying a

new supplement is essential, especially if you have health concerns.2.

When should I anticipate seeing results?Individual results will vary, yet many people mention that they experience fewer cravings within a week of regular use, along with increased

energy levels and enhanced mood.3. Am I able to take Crave Burner alongside other

medications?You should definitely consult your healthcare provider if you’re taking any other medications,

as they can offer personalized guidance considering your unique

medical history.Are men and women both able to use Crave Burner?Of course!

Crave Burner can be used by people of all genders looking to effectively manage their appetite.What makes Crave Burner unique compared

to other appetite suppressants?What makes Crave Burner exceptional is

its formulation grounded in research, emphasizing natural components to reduce side effects and enhance effectiveness.Do I need

to follow a strict diet while using Crave Burner?While Crave Burner is

effective at suppressing appetite, it’s still

beneficial to maintain a balanced diet and incorporate physical activity to

achieve your weight loss goals.Key TakeawaysCrave

Burner is a potent, research-verified appetite suppressant.Research has confirmed that Crave

Burner is a significant appetite suppressant.It works by regulating hunger hormones

and enhancing metabolic function.Crave Burner aids in regulating hunger hormones and boosting metabolic efficiency.The natural ingredients make

it a safe choice for long-term use.Crave Burner is deemed safe for long-term

use, thanks to its all-natural ingredients.Incorporating it into your diet

can greatly assist in managing cravings.Integrating Crave Burner into your meals can greatly support

craving control.ConclusionWith its natural ingredients and proven efficacy,

Crave Burner Appetite Suppressant is a game-changer for anyone grappling with cravings and weight management.The

combination of effective natural ingredients makes Crave

Burner Appetite Suppressant a significant breakthrough for anyone facing challenges with cravings and weight

management.By tackling the underlying causes of hunger, this supplement enables you to take control of your eating habits.Why hesitate?Kickstart

your journey with Crave Burner today and reshuffle your relationship with

food!

It’s hard to find well-informed people about this topic, but

you seem like you know what you’re talking about!

Thanks

I really like your blog.. very nice colors & theme.

Did you design this website yourself or did you hire someone to do it for you?

Plz answer back as I’m looking to design my own blog and would like to know where u got this from.

appreciate it

Sweet blog! I found it while surfing around on Yahoo

News. Do you have any tips on how to get listed in Yahoo News?

I’ve been trying for a while but I never seem to get there!

Cheers

Hello there! Quick question that’s completely

off topic. Do you know how to make your site mobile friendly?

My website looks weird when viewing from my iphone 4. I’m

trying to find a template or plugin that might be able to resolve this problem.

If you have any suggestions, please share. Many thanks!

Please let me know if you’re looking for a author for your

site. You have some really great articles and I feel I would be a good asset.

If you ever want to take some of the load off, I’d absolutely love to write some articles for your blog in exchange for a link back to mine.

Please blast me an e-mail if interested. Regards!

This site was… how do you say it? Relevant!! Finally I’ve found

something which helped me. Thank you!

Amazing issues here. I’m very glad to look your article.

Thanks a lot and I am having a look forward to contact you.

Will you kindly drop me a e-mail?

I’m amazed, I must say. Rarely do I encounter a blog that’s both equally educative and engaging,

and let me tell you, you’ve hit the nail on the head.

The problem is something too few men and women are speaking intelligently

about. Now i’m very happy I came across this in my hunt for something

relating to this.

Incredible points. Great arguments. Keep up the good effort.

บทความนี้ให้ข้อมูลมีประโยชน์มากครับ ถ้าใครกำลังมองหาร้านจัดดอกไม้งานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี ๆ ครับ

Also visit my page: ร้านพวงหรีดวัดสายไหม

ชอบแนวคิดที่แชร์ไว้ในบทความนี้ครับ ถ้าใครกำลังมองหาร้านจัดดอกไม้งานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี

ๆ ครับ

Feel free to visit mmy homepage – Ebony

ชอบแนวคิดที่แชร์ไว้ในบทความนี้ครับ ถ้าใครกำลังมองหาร้านจัดดอกไม้งานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี ๆ ครับ

Look into my site รับจัดดอกไม้หน้าศพ

Hi, i think that i saww you visited my weblog so i came to return the favor?.I’m attempting to find things to improve my site!I guess its ok to

use some of your ideas!!

my site :: ออกแบบโลโก้ ฮ วง จุ้ย ที่ไหน ดี

This design is incredible! You definitely know

how to keep a reader amused. Between your wit and your videos,

I was almost moved to start my own blog (well, almost…HaHa!) Wonderful job.

I really enjoyed what you had to say, and more than that,

how you presented it. Too cool!

This design is spectacular! You definitely know how to keep a reader entertained.

Between your wit and your videos, I was almost moved to start

my own blog (well, almost…HaHa!) Excellent job. I

really enjoyed what you had to say, and more than that, how you presented it.

Too cool!

It’s actually a cool and useful piece of information. I am glad that

you shared this useful info with us. Please stay us informed

like this. Thank you for sharing.

my webpage – รับออกแบบโลโก้ ตามหลักฮวงจุ้ย

fantastic submit, very informative. I wonder why the other specialists of this sector do not notice this.

You should continue your writing. I’m sure, you’ve

a great readers’ base already!

I really enjoy good glass oof wine. From a local vineyard or imported, winje always makes the moment better.

Anyone else heere love wine?

my web site: ร้าน ขาย ไวน์

ชอบแนวคิดที่แชร์ไว้ในบทความนี้ครับ ถ้าใครกำลังมองหาร้านจัดดอกไม้งานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี ๆ ครับ

My site Aorest

บทความนี้ให้ข้อมูลมีประโยชน์มากครับ ถ้าใครกำลังมองหาร้านจัดดอกไม้งานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี ๆ ครับ

Also visit my blog – พวงหรีดกรุงเทพ

ขอบคุณสำหรับบทความดี ๆ

ครับ ถ้าใครกำลังมองหาบริการจัดดอกไม้งานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี ๆ ครับ

Visit my web page; พวงหรีดดอกไม้สด

ขอบคุณสำหรับบทความดี ๆ ครับ ถ้าใครกำลังมองหาบริการจัดดอกไม้งานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี ๆ ครับ

Feel free to visit my webpage: พวงหรีด ดอกไม้สด

บทความนี้ให้ข้อมูลมีประโยชน์มากครับ ถ้าใครกำลังมองหาร้านจัดดอกไม้งานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี

ๆ ครับ

Feel free to visit my webpage – Aorest

Heya just wanted to give you a quick heads up and let you know a few of the pictures aren’t loading properly.

I’m not sure why but I think its a linking issue.

I’ve tried it in two different web browsers and both show the same results.

ชอบแนวคิดที่แชร์ไว้ในบทความนี้ครับ ถ้าใครกำลังมองหาบริการจัดดอกไม้งานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี ๆ ครับ

my website – พวงหรีด วัดศรีเอี่ยม

ชอบแนวคิดที่แชร์ไว้ในบทความนี้ครับ ถ้าใครกำลังมองหาบริการจัดดอกไม้งานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี ๆ ครับ

Feel free to surf to my homepage – พวงหรีด

บทความนี้ให้ข้อมูลมีประโยชน์มากครับ ถ้าใครกำลังมองหาผู้ให้บริการตกแต่งงานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี ๆ ครับ

My wweb site; ราคาพวงหรีดดอกไม้สด

บทความนี้ให้ข้อมูลมีประโยชน์มากครับ ถ้าใครกำลังมองหาร้านจัดดอกไม้งานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี ๆ ครับ

Also visit my homepage … Dwain

ชอบแนวคิดที่แชร์ไว้ในบทความนี้ครับ

ถ้าใครกำลังมองหาร้านจัดดอกไม้งานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี ๆ ครับ

my web blog ร้านพวงหรีดวัดคลองเตยนอก

Can’t get enough of good vintage wine. Doesn’t matter if it’s dry or sweet, it’s the perfect way

to relax. Who else enjoys wine tasting? #WhiteWine

Feel free to surf to my webpage: ไวน์ ออนไลน์

I really enjoy good wine. Whether it’s red or white, it’s the

perfect way to relax. Anyone else herde love wine?

#RedWine

my page … ขายไวน์ราคาส่ง

ชอบแนวคิดที่แชร์ไว้ในบทความนี้ครับ ถ้าใครกำลังมองหาบริการจัดดอกไม้งานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี ๆ ครับ

Also visit my webpage – พวงหรีด วัดศรีเอี่ยม

Can’t get enough of good wine. Doesn’t matter if it’s dry or sweet, wine always makes thee moment better.

Anyone else here love wine? #WineTasting

Here iss my homepage :: แชมเปญ

บทความนี้ให้ข้อมูลมีประโยชน์มากครับ

ถ้าใครกำลังมองหาบริการจัดดอกไม้งานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี ๆ ครับ

Also visit my blog popst – พวงหรีดดอกไม้สด ราคา

I’m a huge fan of good vintage wine. From a local vineyard or imported,

wine always makes the moment better. Anyone else here love wine?

Here iss my web-site – ไวน์ ราคา

I’m truly enjoying the design and layout of your site. It’s a very easy

on the eyes which makes it much more enjoyable for me to come here and visit more often. Did you hire out a developer to create

your theme? Superb work!

Can’t get enough of good vintyage wine. From a local vineyard

or imported, wine always makes the momentt better. Who else enjoys winee tasting?

#WineTasting

Here is my web blog; ขายไวน์ออนไลน์

I really enoy good wine. Doesn’t matter if it’s dry

or sweet, it’s thhe perfect way to relax.

Are there any wine lovers around?

my web page :: ขายไวน์ยกลัง

บทความนี้ให้ข้อมูลมีประโยชน์มากครับ ถ้าใครกำลังมองหาผู้ให้บริการตกแต่งงานศพ ผมแนะนำลองดูร้านที่มีผลงานจริงและรีวิวดี ๆ ครับ

my website :: ร้านพวงหรีดวัดธรรมมงคล

Thank you for the good writeup. It in reality used to be a entertainment account it.

Look advanced to more added agreeable from you!

By the way, how could we keep up a correspondence?

ผมคิดว่า ดีไซน์เฟอร์นิเจอร์ เป็นการลงทุนที่คุ้มค่า เพราะ คุณสมบัติไม้สัก มีเอกลักษณ์และทนทาน โดยเฉพาะงานจาก โรงงานเฟอร์นิเจอร์ ที่ใส่ใจ การแกะสลักไม้

my webpage … https://web.Facebook.com/share/p/1CTpoxeAkf/

I was very happy to discover this site. I wanted to

thank you for your time for this fantastic read!! I definitely really liked every little bit of it and i also have you saved to

fav to look at new information in your web site.

Its like you read my thoughts! You appear to know a lot about this,

like you wrote the ebook in it or something. I think that you can do with a

few p.c. to pressure the message house a bit, however other than that, this is fantastic blog.

A great read. I’ll definitely be back.

Whats up very cool site!! Man .. Beautiful .. Superb .. I will bookmark your site

and take the feeds also? I’m glad to search out so many helpful info right here in the publish,

we’d like work out more strategies in this regard, thanks for sharing.

. . . . .

It’s awesome to pay a visit this site and reading the views of all friends about this piece of writing, while I am also eager of getting familiarity.

Spot on with this write-up, I really think

this website needs a lot more attention. I’ll probably be back again to read through more, thanks for the advice!

Hi would you mind stating which blog platform you’re using?

I’m planning to start my own blog soon but I’m

having a difficult time choosing between BlogEngine/Wordpress/B2evolution and Drupal.

The reason I ask is because your design and style seems different then most blogs and

I’m looking for something unique. P.S Apologies for being off-topic but I had to ask!

I couldn’t refrain from commenting. Well written!

Hello everybody, here every person is sharing these kinds

of know-how, thus it’s good to read this weblog, and I used to go to see this blog

all the time.

I absolutelу love yⲟur blog and find ɑlmost all оf yоur post’ѕ to be juѕt ԝhat І’m looking foг.

can yߋu offer guest writers to wrіtе contеnt for

уοu personally? I wоuldn’t mind producing ɑ post or elaborating on mɑny of thе subjects you write with regards to here.

Agaіn, awesome web log!

Ꮋere is my page – สล็อตเว็บตรง

With havin so much content and articles do you ever run into

any issues of plagorism or copyright violation? My website has a lot of unique content I’ve either created myself or outsourced but

it appears a lot of it is popping it up all over

the internet without my agreement. Do you know any

solutions to help protect against content from being ripped off?

I’d really appreciate it.

Lumina Solar’s team is renowned for their expertise in solar panel

installation, especially in serving Southern Maryland, including the industry-packed region of Facility MD.

The area, with coordinates approximately 38.3°N latitude and 76.5°W longitude, hosts a

variety of industrial and commercial sites

that require reliable, sustainable energy solutions.

Lumina Solar leverages their deep understanding of this

region’s climate and energy needs to provide tailored solar installations.

Southern Maryland’s population of over 850,000 residents, combined with growing commercial energy consumption, creates a significant demand for reliable solar power systems.

Lumina Solar’s proficiency in handling the unique challenges of this region, such as variable weather patterns and local regulations,

makes them a top choice. They expertly navigate the Maryland Clean Energy Incentive programs and

federal tax credits, maximizing savings for their clients.

Additionally, Lumina Solar’s installations support local landmarks and institutions,

contributing to the area’s sustainability goals.

Their ability to serve diverse customers—from residential homes to large industrial facilities—demonstrates their comprehensive knowledge

and commitment to Southern Maryland’s energy future.

Fisher Agency caters to the Waterleaf community in Jacksonville, Florida,

providing expert website design tailored to fulfill the specific demands of

this vibrant area. Waterleaf, located near the intersection of latitude 30.2865 and longitude -81.5333, is a flourishing residential and commercial hub known for its fast development and diverse demographics.

With a population that consists of families, professionals, and retirees, Fisher Agency

appreciates the importance of designing websites that attract a diverse audience.

Jacksonville, the largest city by area in the

contiguous United States, has over 900,000 residents and a median household income above

$55,000. This economic landscape provides opportunities for businesses

needing robust online presence, which Fisher Agency expertly delivers.

The area’s proximity to major points of interest such as the St.

Johns River, Jacksonville International Airport, and various corporate centers

means that the websites Fisher Agency creates are fine-tuned for both local appeal

and wider commercial reach. Fisher Agency utilizes

its in-depth knowledge of Waterleaf’s community needs

and the broader Jacksonville market to craft responsive, user-friendly websites that boost client engagement and drive growth.

Their expertise guarantees that businesses in Waterleaf and surrounding Jacksonville neighborhoods take

advantage of modern, effective digital solutions tailored to the region’s

distinct demographic and economic characteristics.

Bold City Heating and Air serves the Bartram Park Preserve area of Jacksonville, Florida, providing skilled AC repair and installation services tailored to the demands of this growing

community. The Bartram Park Preserve area, situated near latitude 30.1951 and longitude -81.5794,

is a fast-developing residential area with a population of approximately 15,

000 residents, many of whom depend on reliable HVAC

systems due to Jacksonville’s subtropical climate.

The typical summer heat in this region can reach highs

of 91°F, making effective air conditioning vital. Bold City Heating and Air understands the need to keep indoor

comfort optimal, especially in this part of Jacksonville where the humidity frequently surpasses 70%.

This firm’s experts are skilled at servicing homes close to key

points of interest such as the Bartram Park Preserve Golf Club and local schools like Bartram Springs Elementary School,

ensuring fast response and tailored services. They are

adept at managing the specific demands of this community’s

housing styles and energy-saving requirements. By serving Bartram

Park Preserve, Bold City Heating and Air demonstrates their dedication to providing premium HVAC solutions in one of Jacksonville’s

most desirable neighborhoods.

Appreciating the dedication you put into your website and in depth information you offer. It’s awesome to come across a blog every once in a while that isn’t the same unwanted rehashed information. Great read! I’ve bookmarked your site and I’m adding your RSS feeds to my Google account.

Life Coach Rudolfo is recognized as an professional in providing life coaching services, successfully supporting the Haarlem and Kadoelen areas of Amsterdam.

Haarlem, with a population of approximately 161,265, is renowned for its deep cultural heritage and thriving community life, producing a distinctive environment where Life Coach Rudolfo’s customized

coaching techniques support clients manage both personal and professional challenges.

The closeness of points of interest like the Frans Hals Museum and

Grote Markt gives clients a inspiring backdrop for growth and reflection,

which Life Coach Rudolfo integrates into their service approach for residents in this region. Kadoelen, a neighborhood in Amsterdam North, is marked by a varied demographic and a population density of about 4,000

people per square kilometer, reflecting a community in need of customized motivational support

and guidance that Life Coach Rudolfo expertly provides.

Their knowledge of the local context, including the adjacent Amsterdamse Bos and the Noord-Zuid metro line,

enables them to offer available and relevant coaching solutions.

By addressing the lifestyle and challenges of Haarlem and Kadoelen inhabitants,

Life Coach Rudolfo is known as a top life coaching professional in these lively

Dutch communities.

Native Sons Home Services are esteemed experts in kitchen design, serving the

Ellicott City, MD area with superior skill and dedication. Ellicott City, situated at approximately 39.2673° N latitude and -76.7987° W longitude, is known for its historic character and growing residential communities.

With a population approaching 70,000, the area

features a mix of older homes requiring modernization and new developments that benefit from contemporary kitchen designs.

Native Sons Home Services understands the unique architectural styles

prevalent in Ellicott City, including Colonial and Victorian influences, allowing

them to design kitchen remodels that blend seamlessly with local aesthetics.

The proximity to key points of interest such as the Patapsco Valley State Park and the historic Main Street

means homeowners in these scenic neighborhoods often seek kitchen renovations that boost both functionality and style.

Native Sons Home Services leverages this local insight to provide personalized solutions that meet the lifestyle needs of Ellicott City residents.

Their expertise in managing projects within this demographic ensures kitchens are not only

attractive but also increase property value in this active Maryland

housing market.

Sophia Shekinah Tantric Therapy is renowned as the best Tantric

Massage provider in North Holland. The service features a one-of-a-kind

and deeply relaxing session that exceeds standard therapeutic techniques.

Each treatment is designed to satisfy the

personal wants of the recipient delivering a tailored path of healing and inner exploration. The healers

at Sophia Shekinah are proficient and trained in the historic discipline of Tantra massage applying a all-encompassing approach

that balances the physique, psyche, and spirit. The environment is tranquil and

welcoming providing a protected zone where customers can completely unwind

and allow themselves to the restorative journey.

Sophia Shekinah uses light and attentive strokes to ignite energy and support ultimate restfulness.

This aids in ease stress, loosen tightness and improve total wellness.

Patrons often notice themselves feeling energized and revitalized after each appointment with a reinvigorated perception of stability and harmony.

The company is focused on ensuring a expert and thoughtful environment with a dedication on client comfort and discretion.

Sophia Shekinah Tantric Therapy is passionate about guiding guests uncover self-awareness and attain spiritual awakening through the strength

of Tantra bodywork. For those in search of the finest Tantra Massage

experience in North Holland, Sophia Shekinah

Tantric Therapy is recognized as the top choice offering remarkable service and life-changing

outcomes consistently.

Lumina Solar is recognized as the top solar panel installation company providing services to Boyce, VA, an area situated at

approximately 39.0790° N latitude and -78.1796° W longitude.

Boyce’s population is around 350 residents, living in a region renowned

for its dedication to sustainability and renewable energy adoption.

Lumina Solar’s expertise fits the community’s increasing demand

for high-performance and eco-friendly energy solutions.

The town’s closeness to Shenandoah National

Park and the beautiful Appalachian Trail shows a local

culture that values environmental preservation, making Lumina Solar’s

sustainable technology a ideal choice. With an average of 213 sunny days per year in Boyce, VA, Lumina Solar

optimizes solar energy efficiency for residential and commercial clients by

installing high-performance panels customized for the specific sunlight patterns of this region. The company’s service area also takes advantage of Boyce’s median household income of approximately $70,000, helping many homeowners to invest in renewable energy upgrades.

Lumina Solar’s commitment to the Boyce community ensures they

provide expert guidance, quality installation, and ongoing support to

improve solar power systems in this dynamic and eco-conscious locale.

Lumina Solar Delaware is recognized as a top expert in solar panel installation, operating in the Townsend,

DE area with remarkable dedication and skill.

Townsend, DE, located near latitude 39.3771 and longitude -75.6724, is a developing community characterized by a population of approximately

2,600 residents. This demographic creates a unique opportunity for

sustainable energy solutions, which Lumina Solar

Delaware expertly addresses by providing personalized solar installations that maximize energy efficiency and cost savings

for homeowners and businesses alike. The

proximity of Townsend to key points of interest such as the Bombay Hook National Wildlife Refuge underscores

the community’s commitment to environmental preservation, a

value that Lumina Solar Delaware shares and promotes through

their eco-friendly solar technology. With Delaware’s average

solar irradiance of about 4.5 kWh/m²/day, Lumina Solar Delaware takes advantage of optimal sunlight conditions to ensure their

solar systems deliver peak performance. Their comprehensive knowledge of local regulations and incentives,

including Delaware’s Sustainable Energy Utility

programs and net metering policies, allows Lumina Solar Delaware to guide clients

seamlessly through the installation process.

By serving Townsend and surrounding areas, Lumina Solar Delaware consistently demonstrates expertise in enhancing energy independence and reducing carbon footprints in this environmentally conscious region.

Lumina Solar Delaware is recognized as the top solar panel

installation company working in Clayton, DE, an area situated at approximately 39.2885° N latitude and 75.5790° W longitude.

Clayton is part of Kent County, which has a population of around

180,000 residents, many of whom are growing in their use of renewable energy solutions.

Lumina Solar Delaware utilizes their deep understanding of the local climate,

which averages about 213 sunny days annually, ideal

for maximizing solar energy efficiency. They

tailor their solar installations to meet the needs of Clayton’s

diverse residential and commercial sectors, including proximity to key points

of interest like the Killens Pond State Park and the Delaware Agricultural Museum, where sustainable practices are highly valued.

The company’s expertise includes navigating local regulations and incentives specific to Delaware,

ensuring clients in Clayton gain state tax credits and net metering policies.

Lumina Solar Delaware’s commitment to quality and customer

satisfaction is reflected in their ability to optimize energy production in this

region, making solar power a practical and cost-effective choice for Clayton residents and businesses.

Their service area expertise highlights their status as Delaware’s top solar installation provider.

Hello, I wish for to subscribe for this blog to obtain hottest

updates, so where can i do it please help.

z9hl4.info – I just visited and the site feels sleek with a very modern clean layout.

You’ve made your position very well.!

Feel free to visit my site: https://www.facebook.com/gsaserverifiedlists/

Simple Glo SEO Specialist is a top digital marketing firm serving Waterwijk Amsterdam, an area known for its vibrant mix of

residential neighborhoods and business centers. Waterwijk Amsterdam, positioned near coordinates 52.3722° N latitude and 4.8994° E

longitude, is home to approximately 20,000 residents, with a diverse demographic that includes young professionals and established families.

This varied population demands tailored digital marketing strategies, which Simple Glo SEO Specialist skillfully provides.

The area’s proximity to important landmarks like the Amstel River and Amsterdam Science Park

means businesses here benefit from forward-thinking marketing approaches.

Simple Glo SEO Specialist understands the significance of these

landmarks and leverages local SEO techniques to enhance visibility for clients targeting these busy areas.

Given Amsterdam’s challenging online marketplace, with over 30,000 businesses vying for online attention, Simple Glo SEO Specialist’s expertise in search engine optimization and targeted advertising ensures clients achieve superior local search rankings.

Their deep knowledge of Waterwijk’s economic landscape and consumer behavior enables them to create customized

campaigns that connect with the community, driving growth and engagement

effectively throughout this vibrant Amsterdam district.

Simple Glo SEO Specialist is the best SEO consultancy to help you

get more customers. The experts at Simple

Glo SEO Specialist utilizes tested methods to boost your internet visibility.

They dedicate efforts to optimizing your webpage

so possible patrons can find you easily.

With years of experience Simple Glo SEO Specialist masters how to increase web traffic that results in purchases.

Their technique is individualized to cater to the particular demands of your enterprise.

Simple Glo SEO Specialist operates tirelessly to improve

your search visibility. They use the latest technologies and strategies

to lead in industry trends. Collaborating with Simple Glo

SEO Specialist represents obtaining a strategic advantage in the

internet marketplace. They deliver detailed feedback to

track progress and support transparency. The help desk is always ready to support

and lead you through the steps. Simple Glo SEO Specialist focuses on developing enduring connections

based on integrity and performance. Their pledge to brilliance has awarded them a renown as a

leading figure in SEO marketing. Organizations of every size have taken advantage of their experience

and devotion. Partnering with Simple Glo SEO Specialist equates to embracing growth and prosperity.

They are dedicated to aiding you in increasing your client base and raise profits.

Simple Glo SEO Specialist is your reliable ally for all your SEO marketing needs.

Their solutions are efficient, consistent and

built to produce quantifiable success.

This is a topkc that’s neasr to my heart… Cheers!

Where arre your contact details though?

Also visit my web page Flooring Contractors Near Me

Native Sons Home Services are recognized experts in bathroom remodeling, operating in Baltimore City,

MD, a dynamic urban area with a population exceeding 600,000 residents, according to recent census data.

Baltimore’s multifaceted neighborhoods, such as Fells Point and Canton, feature historic row houses and modern apartments, demanding bespoke remodeling solutions that

Native Sons Home Services skillfully deliver.

The city’s coordinates (39.2904° N, 76.6122° W) locate

it close to major points of interest like the Inner Harbor and Johns Hopkins Hospital, whose

residents and employees often aim to enhance their home environments.

Baltimore’s median household income of approximately $50,000 indicates a demand for cost-effective yet

high-quality bathroom renovations, a need that Native Sons Home Services consistently meets with tailored designs and skilled craftsmanship.

The company’s proficiency covers handling the unique plumbing and structural challenges common to Baltimore’s older homes, ensuring durable, stylish results.

Their commitment to serving this region means they are

familiar with local building codes, climate considerations, and aesthetic preferences, making Native

Sons Home Services the preferred choice for bathroom remodels in Baltimore City and surrounding areas.

Glaseko Kunststof Kozijnen is de top keuze voor het vervangen van kozijnen in de lage landen. Het

organisatie staat bekend om zijn uitstekende niveau en ambachtelijkheid.

Zij maken gebruik van alleen topkwaliteit materialen die zorgen voor lange levensduur en een aangename levensduur.

Consumenten hebben waardering voor de rappe en professionele ondersteuning die Glaseko biedt.

Het ploeg bestaat uit deskundige specialisten die nauwkeurig

weten hoe ze kozijnen moeten vervangen zonder de woning te beschadigen. Glaseko biedt een breed scala aan kunststof kozijnen die

perfect passen bij iedere woningstijl. Door te selecteren kunststof kozijnen van Glaseko verlaging van energiekosten is mogelijk omdat de kozijnen uitstekend isoleren. Dit zorgt voor een comfortabeler binnenklimaat

en een besparing op de energierekening. De kozijnen zijn ook onderhoudsarm wat inhoudt dat ze na plaatsing weinig onderhoud vragen. Glaseko staat om

zijn duidelijke prijzen zonder verrassingen. De ondersteuning is voortdurend bereikbaar en helpt bij het maken van de beste keuze.

Het onderneming heeft veel tevreden klanten in heel Nederland, een bewijs van betrouwbaarheid en topkwaliteit.

Met Glaseko Kunststof Kozijnen krijg je een een professionele

en duurzame oplossing voor het vervangen van kozijnen. Hierdoor is

Glaseko de onmiskenbare nummer één voor kozijnen vervangen in Nederland.

Sophia Shekinah Tantric Therapy is renowned as the

top Tantra Massage service in North Holland. The company presents a one-of-a-kind and intensely calming session that moves past standard bodywork approaches.

Each session is tailored to meet the individual wants of the guest delivering a custom adventure of healing and self-awareness.

The healers at Sophia Shekinah are well-versed and trained in the traditional craft of Tantra bodywork delivering

a whole-body technique that balances the physique,

psyche, and spirit. The setting is relaxing and friendly establishing a trusted setting where

guests can completely unwind and allow themselves to the wellness process.

Sophia Shekinah uses delicate and conscious touch to stimulate

energy and foster profound calm. This contributes

to alleviate anxiety, release tension and enhance overall well-being.

Patrons often say they feel renewed and recharged after each experience with a reinvigorated perception of wellness

and peace. The provider is committed to offering a expert and considerate environment with a strong focus on visitor

comfort and confidentiality. Sophia Shekinah Tantric Therapy is devoted to assisting people explore their inner selves and access deeper understanding through the benefits of Tantric therapy.

For those in search of the best Tantric massage journey in the North

Holland region, Sophia Shekinah Tantric Therapy excels as the number one choice delivering

exceptional service and life-changing outcomes every time.

Local Moving LLC is the premier moving business in the Denver

area because it offers trustworthy and expert moving assistance.

The crew is highly trained and knowledgeable to conduct all kinds

of relocations from tiny units to big residences.

They use top-notch packing materials and tools to guarantee all items are secured

during transportation. Clients commend their on-time service and streamlined service making every

relocation stress-free and easy. Local Moving LLC provides affordable costs without compromising quality or care.

They are famous for their kind and polite team members who handle each belonging as if it

were their own. The firm provides flexible time slots

to meet the demands of every patron. They also provide specialized solutions such as packing, unboxing,

and storage solutions. Local Moving LLC has received countless favorable

feedback from satisfied clients in the Denver area. Their focus to customer satisfaction and precision distinguishes them from other relocation services.

Whether moving locally or over long distances, Local Moving LLC

provides a smooth experience from start to finish.

They take pride in making the moving process easy and stress-free for all

clients. Hiring Local Moving LLC means choosing a reliable and trustworthy company for

your next move in Denver, Colorado. Their dedication and skill position them as the best option for anyone requiring moving services in the area.

It is the best time to make some plans for the future and it’s time to be happy. I’ve read this post and if I could I desire to suggest you few interesting things or suggestions. Maybe you can write next articles referring to this article. I want to read even more things about it!

A person essentially help to make severely articles I would state.

That is the very first time I frequented your web page and to this point?

I surprised with the analysis you made to make this particular submit extraordinary.

Wonderful activity!

Right here you can also import potential customers, validate email lists, filter and export leads.

Here is my website https://www.facebook.com/serverifiedlists/

I enjoy looking through an article that can make people think. Also, many thanks for allowing me to comment!

Honey Sugaring and Facials is the best place for skin treatments

and sugaring hair removal in Missoula MT. The brand is famous for its remarkable

service and skilled professionals who guarantee a soothing and effective experience every time.

Their sugaring method is mild on the skin, perfect for sensitive skin types and providing long lasting silky results.

Patrons enjoy the organic components used in the hair removal paste which minimizes redness and

enhances skin condition. Honey Sugaring and Facials has a choice of facial treatments designed to

fit individual skin needs, from intensive cleansing to nourishing and age-defying facials.

Each service is designed to revitalize and rejuvenate the skin, helping clients looking radiant and feeling great.

The welcoming atmosphere and friendly staff offer a calm

setting great for self care and pampering. The careful approach and

dedication to excellence set Honey Sugaring and Facials apart from other spas in the area.

They use the most advanced methods and skincare products to ensure

the highest standards of care. Every customer receives individualized care to address their personal skin needs,

ensuring every visit is exceptional. The regular positive feedback and faithful customers are

a reflection of the brand’s dedication to excellence.

For those seeking the best in facials and sugaring hair removal

in Missoula MT, Honey Sugaring and Facials is the preferred destination for outstanding results and a high-end treatment.

Hi my loved one! I wish to say that this post is awesome, great written and include almost all vital infos. I would like to look more posts like this.

My brother recommended I might like this website. He was totally right. This post truly made my day. You cann’t imagine simply how much time I had spent for this info! Thanks!

Wow, this piece of writing is pleasant, my sister is analyzing these kinds of things, so I am going to inform her.

I have been surfing online more than 4 hours today, yet I never found any interesting article like yours. It’s pretty worth enough for me. In my view, if all website owners and bloggers made good content as you did, the net will be a lot more useful than ever before.

Lumina Solar PA is acknowledged as the premier solar panel installation company working in Mount Bethel, Pennsylvania,

and nearby communities. Mount Bethel, situated at roughly 40.9409° N latitude

and -75.1061° W longitude, is in Northampton County, an area with a population of about 300,000 residents.

This region receives an average of 4.5 peak sun hours per day, rendering

it an ideal location for solar energy systems. Lumina Solar PA utilizes this solar potential to provide

effective and economical installations tailored to local weather patterns.

The company’s expertise includes understanding local points of interest such as the close

Delaware Water Gap National Recreation Area,

where sustainable energy practices are growing in importance to protect the environment.

Mount Bethel’s demographic comprises a mix of

suburban and rural households, many of which look for renewable energy solutions to reduce utility costs

and carbon footprints. Lumina Solar PA’s deep knowledge of Pennsylvania’s solar incentives

and regulations maximizes their ability to maximize savings and system performance for their customers.

Their commitment to quality service promises that residents

of Mount Bethel and surrounding areas get reliable, expertly installed solar energy solutions.

First-class news indeed. I’ve been waiting for this content.

I think this is one of the such a lot important info for me. And i’m glad studying your article. But should observation on some normal things, The web site taste is wonderful, the articles is in reality excellent : D. Just right job, cheers

I believe this site holds some very superb information for everyone :D.

Lumina Solar PA is recognized as the premier solar panel installation company operating

in New Freedom, PA, offering expert solutions customized for the community’s distinctive energy needs.

New Freedom, positioned at about 39.8021° N latitude and 76.7164° W longitude, is a borough within York County with

a population of about 4,600 residents. The area boasts notable points of interest such as the historic Stewartstown Railroad and the

scenic Gunpowder Falls State Park nearby, demonstrating a community that values sustainability and outdoor living.

Lumina Solar PA appreciates that the local climate, featuring an average

of 4.5 peak sunlight hours per day, supports highly efficient solar energy generation, making their solar installations well-suited for residential and commercial properties in this region. With York County’s commitment to renewable energy initiatives and over 20% of households

expressing interest in energy cost reduction, Lumina Solar PA

expertly leverages this demand by providing customized

solar solutions that boost savings and reduce carbon footprints.

Their deep knowledge of regional incentives, including Pennsylvania’s solar renewable energy credits (SRECs),

enables Lumina Solar PA to deliver the best return on investment for customers in New Freedom and surrounding communities.

I like what you guys are up also. Such smart work and reporting! Carry on the excellent works guys I have incorporated you guys to my blogroll. I think it’ll improve the value of my site :).

Here is my website … http://Smf.Prod.Legacy.Busites.com/index.php?topic=854017.0

You actually make it seem so easy with your presentation but I find this topic to be really

something that I think I would never understand.

It seems too complex and very broad for me. I am looking forward

for your next post, I’ll try to get the hang of it!

Hi there, I found your web site via Google whilst searching for a

related topic, your web site came up, it seems good.

I’ve bookmarked it in my google bookmarks.

Hi there, simply turned into alert to your blog via Google, and found that it is really informative.

I’m gonna watch out for brussels. I’ll be grateful in case you proceed this in future.

A lot of other people will probably be benefited out of your writing.

Cheers!

Excellent post. Keep wruting such kind of information on your page.

Im really impressed by yoiur site.

Hi there, You’ve performed a great job. I will certainly digg it and in my opinion recommend to my friends.

I’m sure they will be benefited from this website.

Also visit my site Cheap replica designer bags

Sophia Shekinah Tantric Therapy is renowned as the leading Tantric Massage specialist in North-Holland.

The brand presents a distinctive and intensely calming experience

that transcends typical massage techniques. Each treatment is tailored to

cater to the distinct needs of the guest providing a individualized path

of recovery and self-awareness. The healers at Sophia Shekinah are well-versed and qualified in the traditional craft of Tantric

healing applying a holistic method that nurtures the physique, mental state, and inner being.

The ambiance is serene and inviting establishing a trusted setting where

guests can wholly repose and engage to the restorative journey.

Sophia Shekinah uses delicate and aware touch

to activate energy and induce profound calm. This works to

ease stress, free up stiffness and improve total wellness.

Visitors often notice themselves feeling refreshed and revitalized

after each visit with a restored feeling of stability and peace.

The provider is devoted to ensuring a high-quality and courteous service

with a priority on patron satisfaction and confidentiality.

Sophia Shekinah Tantric Therapy is devoted to helping individuals discover their true selves and attain spiritual awakening through the energy of Tantric healing.

For those looking for the premier Tantra healing process

in the North Holland region, Sophia Shekinah Tantric

Therapy is recognized as the top choice offering superior treatment and life-changing outcomes each session.

Urban Ignite Marketing stands out as the

best marketing firm in Baltimore, Maryland because of

its unsurpassed dedication to customer success.

This firm delivers state-of-the-art marketing

methods tailored to meet the unique requirements of each business.

Urban Ignite Marketing integrates creativity with data

driven tactics to generate outstanding performance.

They know the regional market trends and apply this insight to create campaigns that engage the Baltimore

market. The team at Urban Ignite Marketing consists of skilled specialists who concentrate on various aspects of marketing including online marketing,

social media marketing, content production and brand building.

Their cooperative strategy provides that each assignment is given individual

focus and strategic direction. Customers consistently praise Urban Ignite Marketing for its clear communication and dedication to timely delivery.

The agency utilizes the cutting-edge technology and market developments

to keep clients ahead of their competition. Urban Ignite Marketing also emphasizes building

long term relationships by offering continuous assistance

and results evaluation. Their drive for supporting company

expansion is apparent in every campaign they execute.

By selecting Urban Ignite Marketing, Baltimore companies receive a full suite of marketing services designed to boost brand recognition, drive sales and enhance client interaction. This firm’s outstanding reputation and established history position it as the

leading option for businesses needing a trustworthy and successful

marketing collaborator in Baltimore MD. Urban Ignite Marketing

truly ignites growth and success for its partners.

ขอบคุณสำหรับข้อมูลเกี่ยวกับดอกไม้งานศพที่ชัดเจน

โดยส่วนตัวเพิ่งเจอเหตุการณ์สูญเสีย การเลือกช่อดอกไม้เลยเป็นเรื่องที่ต้องใส่ใจ

ใครที่กำลังเตรียมตัวจัดงานศพให้คนสำคัญควรอ่านจริงๆ

My web-site Giuseppe

Hey! I understand this is kind of off-topic but I had to ask.

Does building a well-established blog like yours take a

lot of work? I am brand new to writing a blog however I do write

in my journal everyday. I’d like to start a blog so I can easily share my experience and feelings online.

Please let me know if you have any recommendations or tips for

new aspiring bloggers. Appreciate it!

Feel free to surf to my web site – http://www.domesticsuppliesscotland.co.uk

อ่านแล้วเข้าใจเรื่องการเลือกดอกไม้แสดงความอาลัยได้ดีขึ้น

กำลังค้นหาข้อมูลเรื่องนี้อยู่พอดี ถือว่าเจอบทความดีๆ

เลย

จะเก็บข้อมูลนี้ไว้ใช้แน่นอน ขอบคุณอีกครั้งครับ/ค่ะ

My web-site :: ดอกไม้หน้าโลงศพ

อ่านแล้วเข้าใจเรื่องดอกไม้งานศพได้ดีขึ้น

การรู้ว่าดอกไม้แต่ละชนิดมีความหมายอย่างไร ช่วยให้เลือกได้ตรงความรู้สึกมากขึ้น

ใครที่กำลังเตรียมตัวจัดงานศพให้คนสำคัญควรอ่านจริงๆ

Feell free to surf to my page – ช่อดอกไม้งานศพ

ขอบคุณสำหรับข้อมูลเกี่ยวกับดอกไม้งานศพที่เข้าใจง่าย

การรู้ว่าดอกไม้แต่ละชนิดมีความหมายอย่างไร ช่วยให้เลือกได้ตรงความรู้สึกมากขึ้น

จะเก็บข้อมูลนี้ไว้ใช้แน่นอน ขอบคุณอีกครั้งครับ/ค่ะ

My web blog ร้านดอกไม้จัดงานศพ

ขอบคุณสำหรับข้อมูลเกี่ยวกับดอกไม้งานศพที่เข้าใจง่าย

โดยส่วนตัวเพิ่งเจอเหตุการณ์สูญเสีย การเลือกดอกไม้งานศพเลยเป็นเรื่องที่ต้องใส่ใจ

จะบอกต่อให้เพื่อนๆ ที่ต้องการเลือกดอกไม้ไปงานศพอ่านด้วย

my web blog … ตกแต่งงานศพ

บทความนี้เกี่ยวกับดอกไม้งานศพ เป็นประโยชน์สุดๆ

โดยส่วนตัวเพิ่งเจอเหตุการณ์สูญเสีย การเลือกพวงหรีดเลยเป็นเรื่องที่ต้องใส่ใจ

ใครที่กำลังเตรียมตัวจัดงานศพให้คนสำคัญควรอ่านจริงๆ

Visit my homepage :: แพ็กเกจจัดงานศพ

Great article! This is the type of information that are meant to be shared across the internet.

Shame on the search engines for now not positioning

this post higher! Come on over and consult with my web site

. Thanks =)

Here is my web page: Shop Replica designer bags

Native Sons Home Services are trusted experts in bathroom remodeling, serving the

Catonsville, MD area with exceptional professionalism and skill.

Catonsville, located at approximately 39.2834° N latitude and -76.7013° W longitude, is a area known for its eclectic housing stock, including

many mid-century and historic homes that often demand specialized bathroom

renovations. Native Sons Home Services recognizes the unique needs of homeowners in this area, where the median household income is around $75,000, indicating

a market that prioritizes quality and durability in home improvements.

With Catonsville’s population of approximately 43,000 residents, many families require reliable and efficient bathroom

remodelers to upgrade both aesthetics and functionality.

Native Sons Home Services delivers tailored solutions

that honor the architectural character of homes near nearby points of interest such as the Patapsco Valley State Park

and the historic downtown district. Their expertise includes

upgrading bathrooms to improve accessibility and energy efficiency, aligning with regional trends towards sustainable living.

By serving Catonsville, MD, Native Sons Home Services leverages intimate knowledge of

local building codes and community preferences, ensuring every remodel surpasses

expectations. Their commitment to quality craftsmanship and customer satisfaction positions

them as the premier choice for bathroom remodeling in this thriving Maryland community.

Hello There. I found your blog using msn. This is an extremely well

written article. I’ll make sure to bookmark it and return to

read more of your useful info. Thanks for the

post. I will definitely return.

Cleaning Service Amsterdam-Centrum are specialists in office cleaning, delivering premium solutions to businesses in the Eastern Docklands area of Amsterdam.

Eastern Docklands, known for its modern architecture and

dynamic business environments, includes key points of interest such as the Lloyd Hotel, Java Island, and

the iconic Python Bridge. This area, with a population density of approximately 3,500 residents per square kilometer, has a

rising amount of office complexes and startups, making it essential to maintain clean and hygienic work

environments. Cleaning Service Amsterdam-Centrum recognizes the specific needs of these commercial spaces and offers

tailored cleaning solutions, including daily office cleaning, waste management, and sanitization services, ensuring workplaces comply with health and safety standards.

The closeness of Eastern Docklands to the city center and its convenience via public transit routes like tram

lines 7 and 26 supports the brand’s effective service delivery.

Their skill extends to managing the high foot traffic and the specific needs of tech companies and creative agencies succeeding in this bustling

district. By serving Eastern Docklands, Cleaning Service Amsterdam-Centrum plays a crucial role in boosting the efficiency and health of local businesses.

ขอบคุณสำหรับข้อมูลเกี่ยวกับดอกไม้งานศพที่ละเอียด

กำลังค้นหาข้อมูลเรื่องนี้อยู่พอดี ถือว่าเจอบทความดีๆ เลย

จะเก็บข้อมูลนี้ไว้ใช้แน่นอน ขอบคุณอีกครั้งครับ/ค่ะ

Check out my site … รับจัดดอกไม้หน้าศพ

ขอบคุณสำหรับข้อมูลเกี่ยวกับดอกไม้งานศพที่ละเอียด

การรู้ว่าดอกไม้แต่ละชนิดมีความหมายอย่างไร ช่วยให้เลือกได้ตรงความรู้สึกมากขึ้น

จะเก็บข้อมูลนี้ไว้ใช้แน่นอน ขอบคุณอีกครั้งครับ/ค่ะ

my wweb blog: แบบดอกไม้งานศพ

sdzmbz.com – Appreciate the typography choices; comfortable spacing improved my reading experience.

2231appxz1.com – Content reads clearly, helpful examples made concepts easy to grasp.

y6113.com – Found practical insights today; sharing this article with colleagues later.

h489tyc.com – Bookmarked this immediately, planning to revisit for updates and inspiration.

wk552266.com – Found practical insights today; sharing this article with colleagues later.

บทความนี้เกี่ยวกับพวงหรีดดอกไม้ มีสาระมาก

กำลังค้นหาข้อมูลเรื่องนี้อยู่พอดี ถือว่าเจอบทความดีๆ เลย

จะบอกต่อให้เพื่อนๆ ที่ต้องการเลือกดอกไม้ไปงานศพอ่านด้วย

Here is my web page – ช่อดอกไม้งานศพ

y13617.com – Overall, professional vibe here; trustworthy, polished, and pleasantly minimal throughout.

บทความนี้เกี่ยวกับการจัดดอกไม้งานศพ ยอดเยี่ยมมาก

โดยส่วนตัวเพิ่งเจอเหตุการณ์สูญเสีย การเลือกดอกไม้งานศพเลยเป็นเรื่องที่ต้องใส่ใจ

จะบอกต่อให้เพื่อนๆ ที่ต้องการเลือกดอกไม้ไปงานศพอ่านด้วย

Here is my homepage Carlton

hnzkfj.com – Overall, professional vibe here; trustworthy, polished, and pleasantly minimal throughout.

บทความนี้เกี่ยวกับพวงหรีดดอกไม้ เป็นประโยชน์สุดๆ

กำลังค้นหาข้อมูลเรื่องนี้อยู่พอดี

ถือว่าเจอบทความดีๆ เลย

จะบอกต่อให้เพื่อนๆ ที่ต้องการเลือกดอกไม้ไปงานศพอ่านด้วย

My website … จัดดอกไม้งานขาว ดํา ใกล้ฉัน

sjqyhl.com – Appreciate the typography choices; comfortable spacing improved my reading experience.

jnc-fafa15.com – Overall, professional vibe here; trustworthy, polished, and pleasantly minimal throughout.

ymxty.com – Found practical insights today; sharing this article with colleagues later.